Introduction

We will attempt to tackle this post's most often requested initial interview questions. A famous Business Intelligence Data Processing Platform is Ab Initio Software. It is used to create the great majority of business applications, including data warehousing and quality management systems, operational systems, distributed application integration, and complicated event processing. This article discusses ab initio interview questions.

Ab Initio Interview Questions For Freshers

If you're looking for Ab Initio Interview Questions for Experienced or Freshers, you've come to the perfect place. There are countless opportunities accessible from a variety of reliable companies globally. Ab Initio has a market share of roughly 2.2 percent, per the report.

Consequently, you still have the opportunity to progress in the Ab Initio Development industry.

1. What is Ab Initio?

Ab Initio is a robust data processing and analysis tool used for ETL (Extract, Transform, Load) tasks in data warehousing and business intelligence environments. It offers a graphical development environment for creating, deploying, and managing complex data processing applications.

2. Architecture of Ab Initio?

Ab Initio follows a client-server architecture comprising components like Co>Operating System, Graphical Development Environment (GDE), Enterprise Metadata Environment (EME), and a scheduler. These components collaborate to design, develop, and execute data processing applications efficiently.

3. What does dependency analysis mean in Ab Initio?

Dependency analysis in Ab Initio involves identifying and managing dependencies between different components of a data processing application. It ensures the correct execution sequence and data integrity by analyzing relationships between graph components like data flows, transformations, and conditions.

4. How can you connect EME to Ab Initio Server?

To connect the Enterprise Metadata Environment (EME) to the Ab Initio Server, you must configure the EME server details in Ab Initio's configuration files. This includes specifying the EME server hostname, port number, and authentication credentials. Once configured, users can access and manage metadata within the EME environment directly from Ab Initio tools.

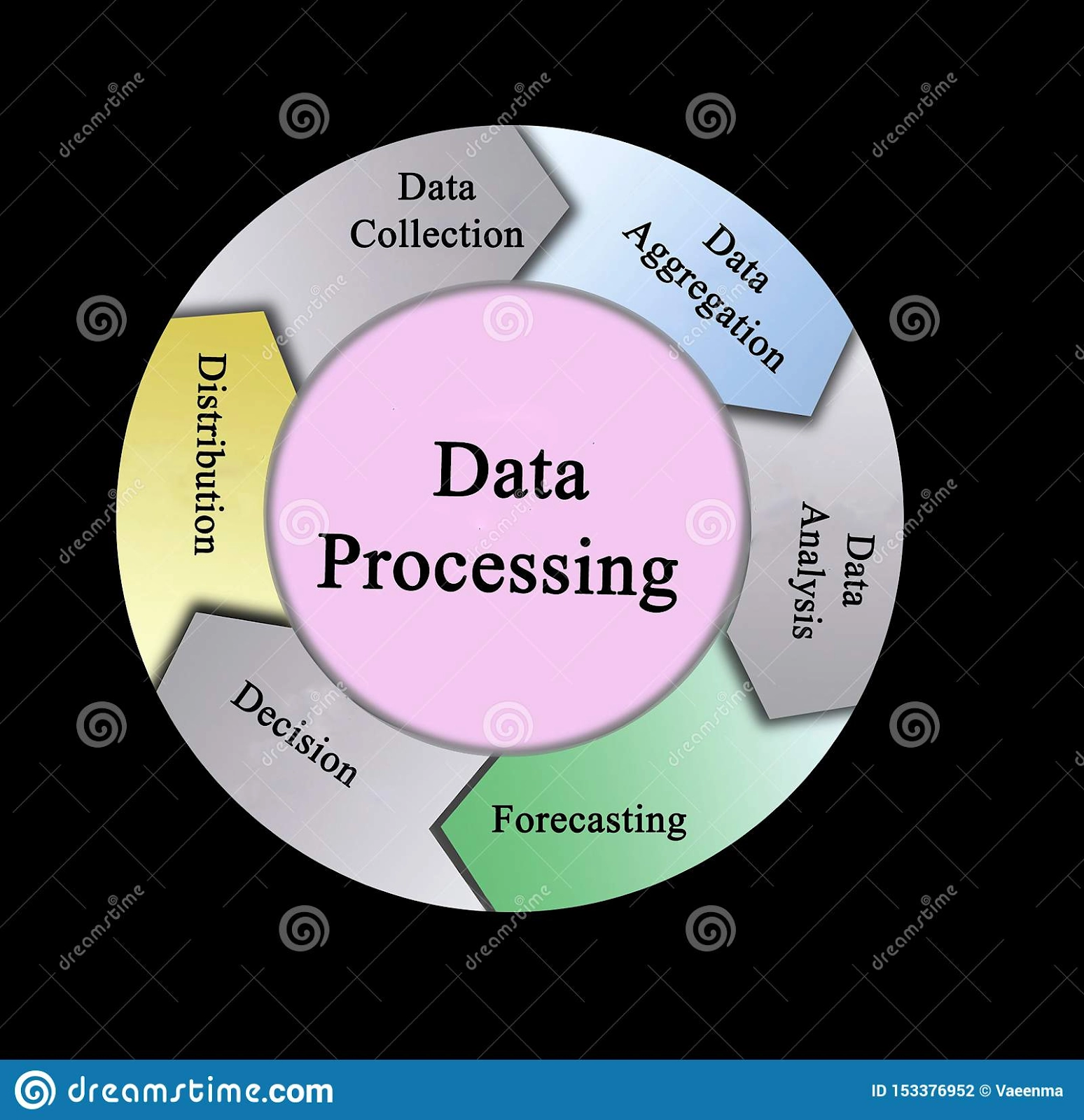

5. What advantages do you see in data processing?

Well, there is a tonne of advantages that come from data processing. Numerous elements that are important to users can be separated. Additionally, this method makes it possible to quickly maintain a pace by extracting data from a completely unstructured format and putting it into various structures.

Additionally, the processing helps get rid of other defects that are frequently linked to the data and creates issues in a later stage. The fact that data processing is widely used in a variety of jobs is solely due to this.

6. How precisely do you define the phrase "data processing," and can businesses rely on this method?

Processing is a process that quickly converts data from an ineffective form to an advantageous one. However, the same may differ based on elements like the amount and format of the data. A series of steps are typically taken to accomplish this activity, and depending on the kind of data, these steps may be automatic or human.

Because computers are now used to execute this function on most devices, automated methods are more common than ever. Users can access data in various formats, including tables, graphs, charts, pictures, and vectors. These are the most extraordinary things that entrepreneurs may take pleasure in.

7. How is data handled, and what are the underlying principles of this method?

The most delicate part is that processing heavily relies heavily on gathering data for particular tasks that call for it. In actuality, data must first be examined and stored before processing. These are some of the critical considerations that will affect this task:

- Presentation of Data Collection

- Analysis of Final Results Sorting

These are also considered core principles that may be relied upon to maintain the pace in this topic.

8. After gathering the data, what would come next?

The next crucial step is entering the data into the appropriate equipment or system after it has been acquired. Well, the times when storage depended on documents are long gone. The data size is now quite huge, and it must be conducted in a trustworthy manner.

A digital method is an excellent choice since it enables consumers to do this work quickly and without compromising anything. Then, a significant number of operations must be completed for the relevant analysis. Users are always free to think about the best results that fit their expectations, and the conversion frequently matters greatly.

9. What exactly is a data processing cycle, and what relevance does it have?

Data is often utilized and has to be continually processed. The cycle of data processing is what it is called. Depending on the kind, amount, and nature of the data, the same delivers findings quickly or may require more time.

This is making this technique more complex, necessitating the use of more dependable and cutting-edge procedures than current ones. The data cycle ensures that complexity may be avoided as much as possible without requiring further action.

10. What are the variables that affect data storage?

In essence, sorting and filtering are key. Additionally, a lot relies on the program one employs.

11. Is effective communication essential for data processing, in your opinion? What is your area of strength in this regard?

The capacity to rely on facts or knowledge is the most significant skill one might possess in this field. Of course, effective communication is crucial to completing many critical activities, including the display of information. An organization has numerous departments, and communication ensures everything is reliable and suitable for everyone.

12. Imagine that we provide you with a new project. What would be your starting point and the crucial actions you take?

The task's aim must be clearly stated before the team is tasked with completing it. This gives the work a solid direction to be completed. This is crucial when working with a brand new or fresh collection of data. Effective data modeling is the next important issue that requires attention after this. This involves validating the data and locating the missing values. Monitoring the outcomes is the final step.

13. What does "Validation" mean when it appears in a piece of data?

It implies that the information in question is accurate and pure, allowing for worry-free application. Most people agree that the crucial components of the processing system are data validation.

14. How does data sorting work?

It's not always required for data to stay in a precise order. In actuality, things are constantly thrown together at random. Simply organizing the data objects into desired sets is sorting.

15. What strategy can you use to combine numerous data sets quickly?

It's called Aggregation.

16. What distinguishes scientific data processing from commercial data processing?

Data that has undergone significant calculation, or arithmetic operations, is considered scientific data processing. This uses a small quantity of data as input and produces bulk data as a result. Commercial data processing, on the other hand, is unique. In this case, the development is constrained by the information provided. Commercial data processing has a restricted set of computing processes.

17. What advantages does data analysis offer?

It guarantees the following:

- The ability to explain developments about the primary duties is guaranteed

- There are always test hypotheses using an integration strategy.

-

Detecting patterns in a trustworthy manner

18. What constitutes a data processing system's essential components?

These include a sorter, converter, aggregator, validator, analyzer, and summarizer.

19. What two stages of the data processing cycle can you think of, and how would you compare them?

The first one is data collection, and the second is data preparation. Of course, in a cycle including data processing, the group comes first, followed by practice. The success and ease of the first stage depend on how exactly the first has been completed, and the first stage serves as a benchmark for the second. The alteration of critical data is the fundamental aspect of preparation. Collecting data sets are split apart while they are joined together by practice.

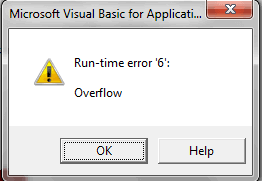

20. What do you mean by overflow errors, exactly?

Bulky computations are frequently performed while processing data, and they are not necessarily required to fit in the RAM allotted for them. This error occurs when a character with more than 8 bits is placed.

21. What information might jeopardize the accuracy of data?

A number of faults can lead to this problem and change many other issues. Which are:

- Malware and bugs

- Human mistake

-

Data compression that goes beyond what is acceptable usually includes hardware error transfer problems.

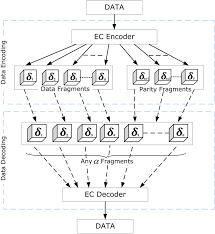

22. What does data encoding mean?

In many situations, it is necessary to maintain data confidentiality, and this method can help. It only ensures that information is preserved in a format only the sender and the recipient can comprehend.

23. What is the acronym for EDP?

It denotes the processing of electronic data.

24. Identify one technique that remote workstations often take into account while processing

spread-out processing

25. Describe a transaction file and explain how it differs from a sort file.

Input data at the time while a transaction is being processed, is typically thought of as being stored in the transaction file. It makes updating all master files simple. On the other hand, sorting is done to provide the data files in a specific location.

26. What use does Aggregation serve when rollup is already available? As is well known, the rollup component is employed from the outset to compile a set of data records. So, where will we apply Aggregation?

Both aggregate and rollup can summarise the data, but rollup is far more user-friendly. To comprehend why a specific summary is significantly more informative when rolled up than when it is aggregated. Rollup is also capable of filtering records at both the input and output. Aggregate and rollup do the same task. However, rollup shows the intermediate result in main memory, whereas total does not.

27. What types of layouts is ab initio capable of supporting?

AbInitio essentially supports serial and parallel layouts. Both can coexist in a graph at once. The parallel one relies on how similar the data are. A component in a chart can operate in a 4-way parallel if the layout is designed to match the degree of parallelism in the multi-file system, which is a 4-way parallel.

28. How may default rules be added to the transformer?

The transform editor will appear when you double-click the transform parameter on the parameter tab page of the component properties. The editor selects Add Default Rules from the dropdown menu after clicking on the Edit menu in the transform. It will present two choices. –

1) Match Names

2) Wildcard.

29. What exactly is a local lookup?

The local lookup function can be utilized before the call to the lookup function if your lookup file is multifile and partitioned/sorted on a specific key. Depending on the key, this is local to a particular partition.

Data records from a lookup file can be stored in the main memory.

As a result, obtaining records via the transform function is substantially quicker than retrieving them from the disc. It makes it possible for the transform component to handle the data records of several files quickly.

30. Describe the differences between a lookup file and a lookup and provide an example.

The Lookup file typically represents one or more serial files (Flat files). The volume of information is little enough to fit in the memory. Compared to retrieving data from a disc, transform functions may now obtain records significantly more quickly.