Introduction

Intelligence is the ability to learn and solve problems. Artificial Intelligence is the technique that makes machines intelligent. Artificial intelligence can make machines think and solve problems like human beings. It is widely used and finds applications in many industries. You may encounter artificial intelligence questions in interviews or in daily life. In this blog, we will look at some of the popular artificial intelligence questions.

Also, see - Locally Weighted Regression.

Artificial Intelligence Questions and Answers

Artificial intelligence is a broad topic and there are many artificial intelligence questions that can be asked to you in your technical interviews. To help you to get a complete grasp of this topic, we have selected some of the top artificial intelligence questions that one must know. Let us look at these popular artificial intelligence questions.

1. What is artificial intelligence?

Artificial intelligence aims to mimic human behaviour and thinking. Artificial intelligence models are made up of a combination of machine learning and deep learning models. The models are trained on a large dataset by suitable algorithms. The trained models can make human-like predictions and decisions. Let us look at some more artificial intelligence questions now.

2. What are some of the applications of artificial intelligence?

The applications of artificial intelligence are:

- Speech Recognition: Speech recognition is the technology that converts spoken language into written text. It involves analyzing audio signals to identify and transcribe spoken words accurately. It is Used in virtual assistants (e.g., Siri, Google Assistant), dictation software, and voice-activated systems.

- Automated Chatbots: Chatbots are computer programs designed to simulate conversation with human users. Automated chatbots use predefined responses or artificial intelligence to understand and generate human-like responses. They are deployed in customer support, online assistance, and various websites to engage and assist users.

- Natural Language Processing (NLP): NLP is a field of artificial intelligence that focuses on the interaction between computers and human languages. It involves the ability of machines to understand, interpret, and generate human-like language. It is used in language translation, sentiment analysis, chatbots, and voice recognition systems.

- Recommendation Engine: A recommendation engine, also known as a recommender system, is a software algorithm that suggests items to users based on their preferences, behavior, or past interactions. It is commonly found in e-commerce platforms (e.g., Amazon recommendations), streaming services (e.g., Netflix suggestions), and social media (e.g., personalized content feeds).

3. Why do we need Artificial Intelligence?

Artificial Intelligence (AI) is indispensable in today's technological landscape for a multitude of reasons. Its ability to automate repetitive tasks enhances efficiency, allowing humans to focus on creative and complex aspects of their work. AI's prowess in data analysis goes beyond human capacity, extracting valuable insights from vast datasets. Decision-making becomes more informed as AI systems analyze data and assess probabilities. The personalized experiences offered by AI, from tailored recommendations to customized services, significantly enhance user satisfaction.

4. What is machine learning? What are the different types of machine learning?

Machine learning is a type of artificial intelligence that enables computers to learn and make decisions without explicit programming. Instead of being explicitly programmed to perform a task, a machine learning system uses algorithms to analyze data, identify patterns, and improve its performance over time. It allows computers to learn from examples and experiences, making predictions or decisions without being explicitly programmed for every possible scenario.

| Type | Attribute |

|---|---|

| Supervised | It is based on labeled training data. Primarily used for predictions. |

| Unsupervised | In this, the training data is not labeled. The algorithm recognizes the relationship between the data. It is generally used for clustering the data. |

| Reinforced | Reinforcement Learning is a feedback-based machine learning technique in which an agent learns to behave in an environment by performing the actions and seeing the results. |

5. What is deep learning?

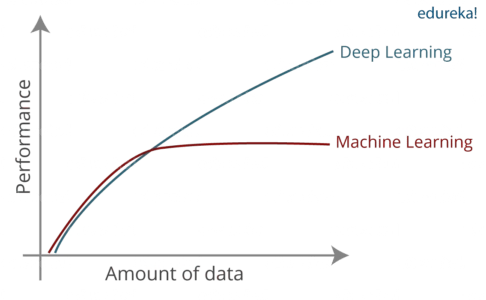

Deep Learning is a subset of Machine Learning, and it is based on Neural Networks. A neural network tries to mimic the brain's functioning by learning from experience. It contains neurons that transfer information from one node to another. Various parameters are tuned to get the highest accuracy as the neural network is trained through each epoch. Deep learning works much better than machine learning when the dataset size is very large.

7. What is the difference between artificial intelligence, machine learning, and deep learning?

| Artificial Intelligence (AI) | Machine Learning (ML) | Deep Learning (DL) |

|---|---|---|

| General concept of machines mimicking human intelligence. | Subset of AI, involves systems learning from data to improve performance. | Subset of ML, involves neural networks with multiple layers for learning intricate patterns. |

| Broader concept encompassing various techniques and approaches. | Specific subset focusing on algorithms that enable machines to learn from data. | Specialized subset using neural networks with deep architectures for complex tasks. |

| Can involve rule-based systems, knowledge representation, and various techniques. | Learns from data, identifying patterns and making predictions or decisions. | Learns hierarchical features from data through multiple layers of neural networks. |

| Speech recognition, robotics, expert systems. | Regression, classification, clustering. | Image and speech recognition, natural language processing. |

| May or may not heavily rely on large datasets. | Heavily relies on labeled datasets for training. | Often requires large labeled datasets for training complex models. |

| Can be rule-based or require explicit programming. | Involves human intervention for feature engineering and model selection. | Initially human-guided, but can automatically learn intricate features. |

| Variable, can be simple rule-based systems or complex knowledge representation. | Complexity depends on the chosen algorithms and model architecture. | Involves complex architectures with many layers, suitable for intricate tasks. |

| Broad range, including robotics, expert systems, and game playing. | Predictive analytics, recommendation systems, fraud detection. | Image and speech recognition, natural language processing, autonomous vehicles. |

8. What are the types of AI?

Artificial intelligence can be divided into different types on the basis of capabilities and functionalities.

- Narrow or Weak AI: This type of AI is designed and trained for a specific task or a narrow set of tasks. For example, virtual personal assistants (e.g., Siri, Alexa), image and speech recognition systems.

- General or Strong AI: This refers to machines with the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence.

- Artificial Superintelligence (ASI): ASI surpasses human intelligence in every aspect and is capable of outperforming the best human minds in virtually every field.

9. Explain some of the commonly used artificial neural networks.

Some commonly used ANN are:

- Feed forward Neural Network (FNN): FNN is the simplest form of artificial neural network where information travels in one direction, from input nodes through hidden layers to output nodes without forming cycles or loops.

- Feed backward Neural Network (FBN) - Not a Standard Term: If by "Feed Backward Neural Network" you meant a network with feedback connections, these are not standard terms. However, recurrent neural networks (RNNs) have connections that form cycles, allowing information to persist.

- Convolutional Neural Network (CNN): CNN is designed for image processing and pattern recognition. It uses convolutional layers to automatically and adaptively learn spatial hierarchies of features.

- Recurrent Neural Network (RNN): RNN has connections that form cycles, allowing information to persist. It is well-suited for tasks involving sequential data, as it maintains a memory of previous inputs. It is used in natural language processing, speech recognition, and time series analysis.

- Long Short-Term Memory (LSTM): LSTM is a type of RNN designed to overcome the vanishing gradient problem. It uses a more complex structure with memory cells and gates to retain and update information over long sequences. It is effective in tasks requiring modeling long-term dependencies, such as language translation and speech recognition.

- Autoencoders: Autoencoders are unsupervised learning models that learn efficient representations of data by training to reconstruct input data from a reduced, intermediate representation (encoder-decoder structure). It is used for data compression, denoising, and feature learning, as well as in generative models.

10. What are parameters in deep learning?

These are the coefficients of the model and are tuned by the model itself. The algorithm optimizes these coefficients while learning and changing the parameters, which minimizes the error. The only thing you have to do with those parameters is to initialize them.

11. What are hyperparameters in deep learning models?

Hyperparameters are the parameters that control the training process. These variables are adjustable and directly impact how successfully model trains. They are declared beforehand by us. Some of the hyperparameters are learning rate, number of epochs, and batch size.

12. What is "Q-Learning"?

Q-Learning is a reinforcement learning algorithm used in machine learning. It enables an agent to make decisions in an environment by learning a Q-value for each state-action pair. The agent explores the environment, updating Q-values based on rewards and iteratively improving decision-making. Q-Learning is commonly applied in scenarios with discrete actions and states, such as games or robotic control tasks.

13. What do you mean by overfitting?

Overfitting is a concept in data science when the machine learning model is so complex that it fits perfectly onto the training data. When the model fits onto the training data, it cannot perform on unseen data.

Must read - Underfitting and Overfitting in ML

14. How is overfitting fixed?

Overfitting is fixed by:

- Cross-validation: We divide our data into ‘training’ and ‘test’ data. The test data is used for in-time validation.

- Regularization: This shrinks the coefficient estimates towards zero. This discourages the learning of complex data.

- Early stopping: When we train data by an iterative process. We stop the learning before we reach overfitting.

- Pruning: This technique is used for decision trees.

- Dropout: Randomly selected neurons are ignored during training.

15. Which programming language is used for AI?

Several programming languages are used in AI development, each with its strengths. Common languages for AI include:

- Python: Widely used for its simplicity, extensive libraries (e.g., TensorFlow, PyTorch), and community support.

- Java: Known for its portability and scalability, commonly used in enterprise-level AI applications.

- C++: Offers high performance and is used in AI applications where efficiency is critical.

- R: Popular for statistical analysis and data visualization in AI research.

- Lisp: Historically significant in AI development, especially for symbolic reasoning.

- Prolog: Suitable for rule-based systems and symbolic reasoning in AI.

- Julia: Known for its high-performance numerical computing, gaining popularity in AI research.

16. What do you mean by vanishing and exploding gradient?

The vanishing gradient problem happens when we multiply the gradients with a number smaller than one repeatedly. The gradient becomes smaller and smaller in each step and becomes infinitesimally small. On the other hand, if we multiply the gradient with a number larger than one, the gradient keeps increasing and becoming so large that it makes our network unstable.

Learn more about vanishing and exploding gradients

17. What is meant by gradient descent?

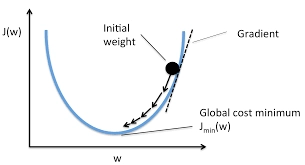

Gradient descent is an optimization algorithm used in machine learning and deep learning to minimize the error or loss function during the training of a model. It works by iteratively adjusting the model's parameters in the opposite direction of the gradient (slope) of the loss function with respect to those parameters. This process continues until the algorithm converges to a minimum where the gradient is close to zero. Gradient descent is a fundamental technique for updating the parameters of a model to find the optimal values that result in the best performance.

Read more about gradient descent

18. What is the intelligent agent in AI, and where are they used?

An intelligent agent in AI is a system that perceives its environment and takes actions to achieve specific goals. It receives input from the environment through sensors, processes this information internally, and then produces actions through actuators. Intelligent agents can be applied in various domains, including robotics, gaming, recommendation systems, and autonomous vehicles, where they interact with and adapt to their surroundings to accomplish tasks efficiently.

19. What is Markov's Decision process?

Markov Decision Process is a mathematical framework used in artificial intelligence and decision-making problems. It consists of states, actions, transition probabilities, rewards, and a discount factor. The key assumption is the Markov property, meaning that the future state depends only on the current state and action, not the sequence of previous states and actions. MDPs are employed in reinforcement learning to model decision-making processes, enabling agents to learn optimal policies for sequential decision tasks.

20. What do we mean by deep learning frameworks such as Keras, TensorFlow, and Pytorch?

A deep learning framework is a library or a tool that helps us build deep learning models easily and quickly. Deep learning frameworks provide a straightforward way to use a collection of pre-built and optimized components. This allows us to create deep learning models without going into the details of underlying algorithms.

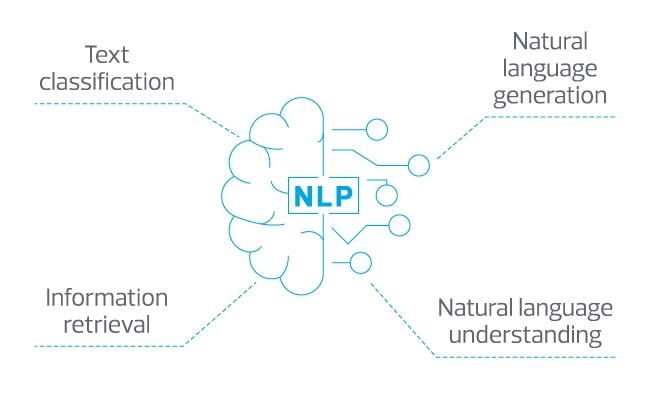

22. What do we mean by NLP?

NLP stands for natural language processing. It aims to make computers understand the text and spoken sentences the same way humans can. Some of the tasks performed by NLP are speech recognition, word sense disambiguation, named entity recognition, and natural language generation.

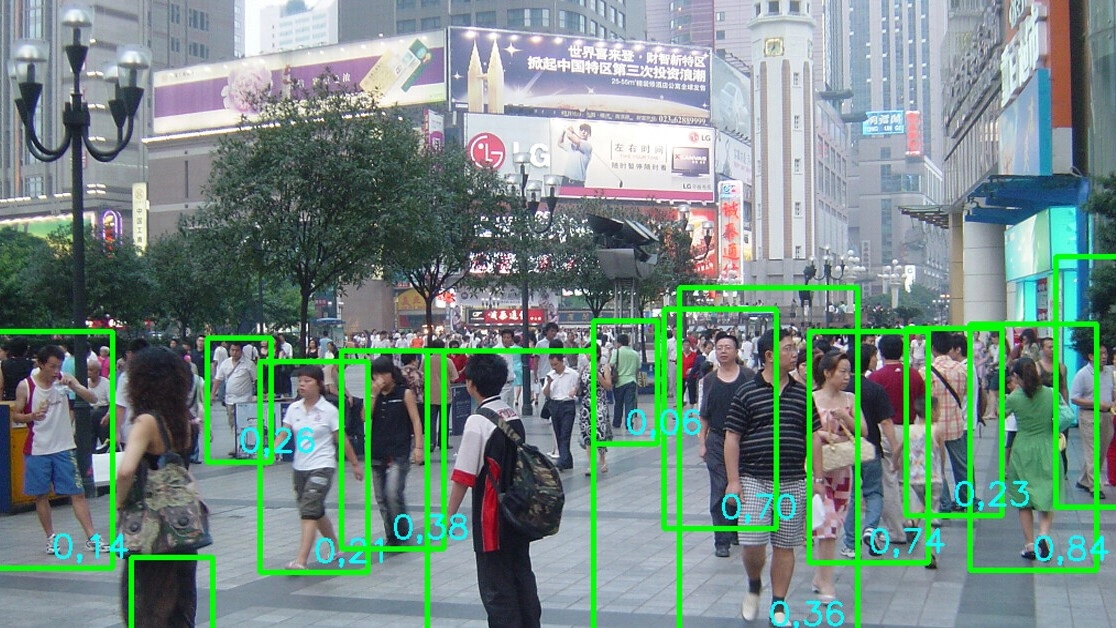

23. What do we mean by computer vision?

Computer vision is a field of Artificial Intelligence that aims to teach machines to see and perceive images. In computer vision, we make the machines understand the images with the help of pixels that extracts meaningful information from them. To get a complete overview, check out this blog.

24. What are some of the tasks performed by computer vision?

Some of the popular tasks performed by computer vision are:

- Facial Recognition

- Fingerprint recognition

- Object detection

- Character Recognition

- Medical imaging

- Automotive safety

- Deep space imaging

- Surveillance for security

25. What are parametric and non-parametric model?

A parametric model makes specific assumptions about the functional form of the underlying data distribution. It has a fixed number of parameters, and as more data is collected, these parameters are adjusted. For example, linear regression is a parametric model that assumes a linear relationship between input features and the output.

On the other hand, a non-parametric model does not make explicit assumptions about the functional form of the data distribution. It is more flexible, allowing the model complexity to grow with the amount of data. For example, k-Nearest Neighbors (k-NN) is a non-parametric model that classifies data points based on the majority class of their k nearest neighbors.

26. What is the role of attention mechanisms in neural networks?

Attention mechanisms enhance neural network performance by allowing the model to focus on specific parts of input data. They assign varying levels of importance to different elements, improving the model's ability to capture intricate patterns and relationships.

27. Explain the concept of federated learning and its applications in decentralized environments.

Federated learning enables model training across decentralized devices without sharing raw data. Applications include scenarios where data privacy is crucial, such as mobile devices, Internet of Things (IoT), and edge computing, allowing models to be trained collaboratively while preserving individual data privacy.

28. Explain the concept of explainable AI (XAI) and its importance.

Explainable AI focuses on making AI models transparent and interpretable. It's essential for building trust and understanding model decisions, particularly in applications where accountability and ethical considerations are paramount, such as healthcare and finance.

29. What are GANs (Generative Adversarial Networks) and how do they work?

GANs consist of a generator creating realistic data and a discriminator distinguishing real from generated data. They engage in a competitive process, improving the generator's ability to create realistic outputs. GANs are used in image generation, style transfer, and data augmentation.

30. Explain the concept of transfer learning in deep learning.

Transfer learning involves using pre-trained models on a source task to boost performance on a related target task. The idea is to leverage knowledge gained from the source task, speeding up learning and requiring less data for the target task.

31. What role does AI play in personalized medicine, and what challenges are associated with it?

AI in personalized medicine analyzes individual patient data to tailor treatment plans. Challenges include ensuring patient data privacy, interpreting complex AI-generated decisions, and addressing regulatory compliance to guarantee the ethical use of AI in healthcare.

32. Discuss the potential ethical implications of AI and machine learning in society.

Ethical concerns in AI include bias, privacy issues, job displacement, and transparency. Addressing these concerns requires responsible development practices, transparent decision-making processes, and adherence to ethical guidelines to ensure fair and equitable AI deployment.

33. Describe the challenges and advancements in natural language processing (NLP).

NLP faces challenges in understanding context and nuances. Advances include transformer architectures like BERT, which capture contextual relationships in language, significantly improving the performance of NLP models in tasks such as sentiment analysis and question answering.

34. How do adversarial attacks impact machine learning models, and how can they be mitigated?

Adversarial attacks involve manipulating input data to mislead machine learning models. Mitigation techniques include adversarial training (training models with adversarial examples) and input preprocessing to enhance robustness against adversarial perturbations.

35. Explain the concept of self-supervised learning and its advantages.

Self-supervised learning involves training models without labeled data. It leverages inherent structures within the data to generate pseudo-labels for training. This approach is advantageous in scenarios where labeled data is scarce, as the model can learn meaningful representations from the data itself.