Introduction

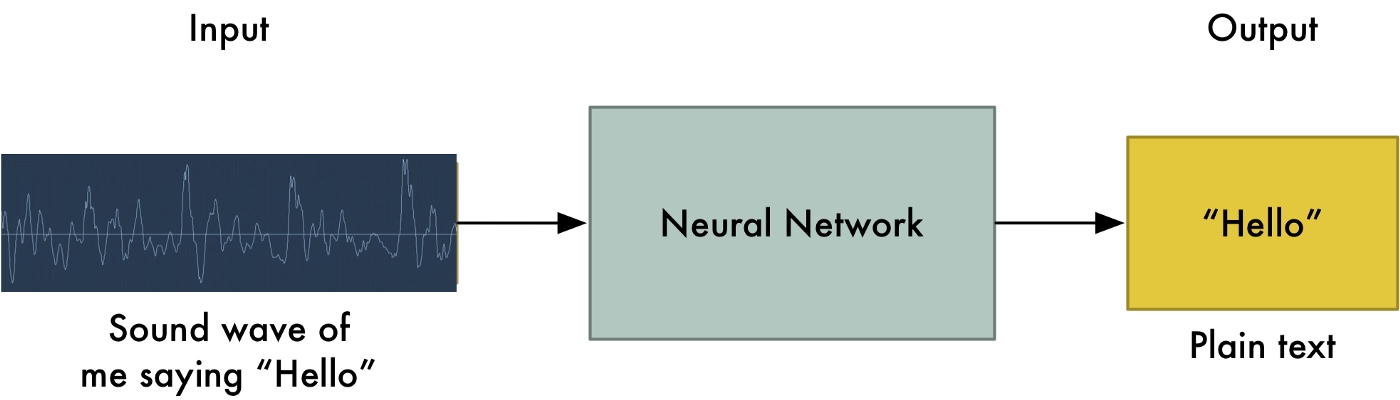

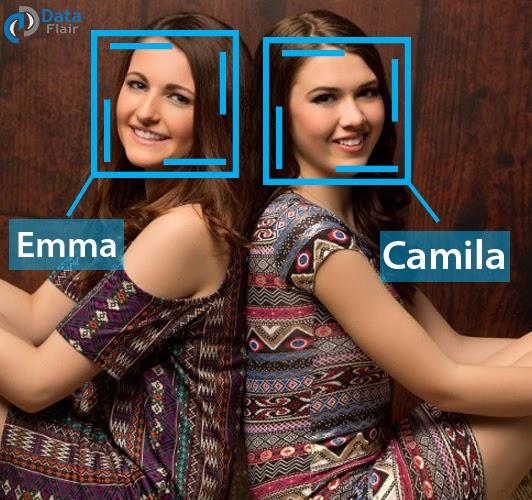

AI, as we know, is one the most in-demand technology today and might stay the same over several more years. ANN or Artificial Neural Networks is one of the subsidiaries of Artificial Intelligence. The most common example of an Artificial Neural Network would be Google Lens processing and recognising the object it captures. Many of you must have used Google lens for a variety of tasks, maybe translating some text on the wall or trying to know the breed of the dog you instantly fell in love with. But have you ever wondered about the sophisticated engineering that goes behind this? We’ll find that out in this blog.

Also Read, Resnet 50 Architecture

ANN and how it works

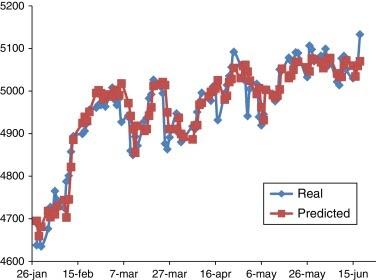

The best way to understand ANN and its working at the backend is to understand how you learn and perceive the surroundings around you. Remember your first time riding a bicycle? Countless number of iterations of riding, falling, and getting back up again, learning some minute details from your errors for the next time you hit the pedal. At a higher level, it is what ANN is all about. Executing a task and learning from mistakes.

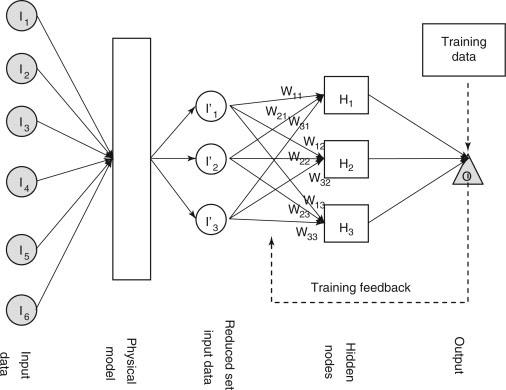

ANNs simulate the neural network in humans. A human neural network consists of billions of neurons. It’s these neurons that give humans the ability of learning and recognising things from their past experiences. These neurons work closely with each other towards a common objective. ANNs simulate this neural structure of a human brain with a layered structure, which basically consists of three major components- The input layer, the hidden layer, and The output layer.

Source - link

The input layer

The input layer is the first component in the architecture of an ANN. It receives the input for various explanatory attributes and includes the bias term as well. So say we have n input variables or attributes, the size of the input layer will be n+1 where the extra variable is the bias term.

Also read, Artificial Intelligence in Education

The hidden layer

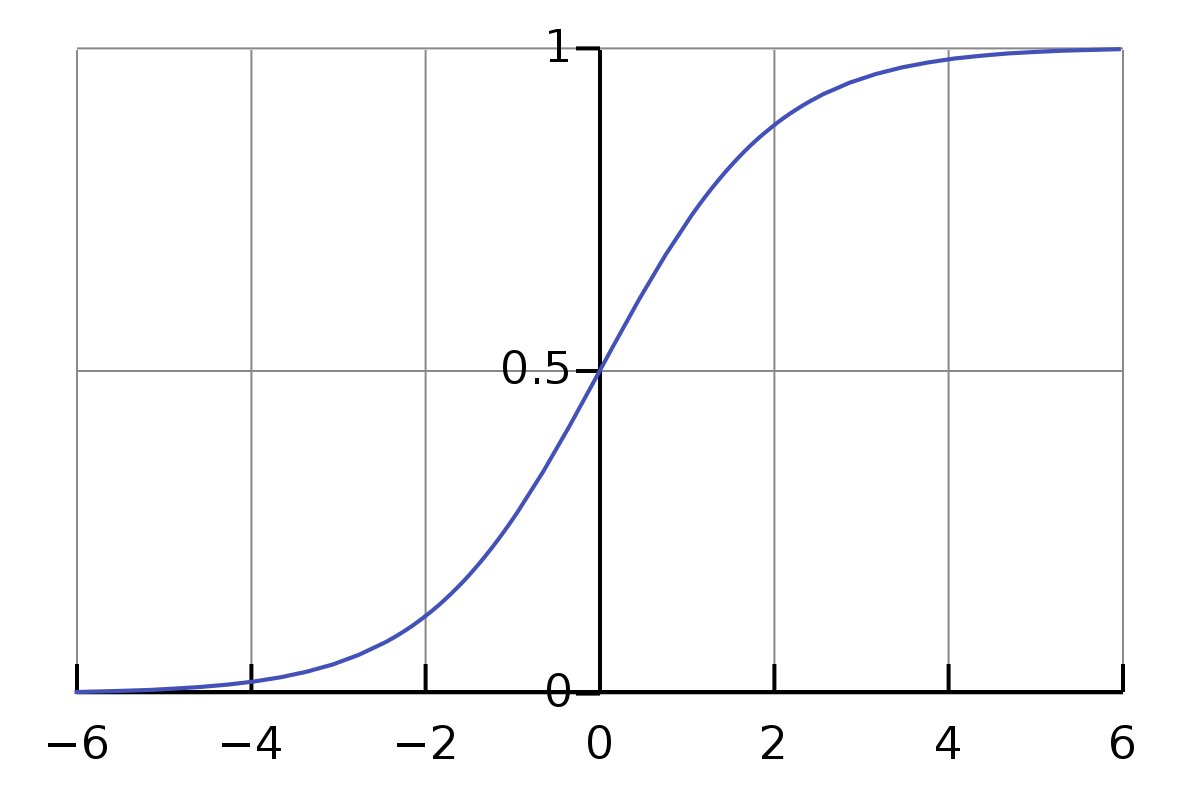

This is where the majority of computation happens. It receives the processed data from the input layer. This data is nothing but the input variables. Now, It may or may not be true that all these variables are equally essential in computing the final predictions. For example, let’s say we want to train an ANN model to recognise Siberian tigers. So there are some defining features about a Siberian tiger that would be very crucial for the predictions. Like their golden fur with prominent dark stripes and the canines. These features would be given more importance over other features of the input. Now there might be relatively less crucial features like the ears or the tail. They may be used to make the prediction but wouldn’t be considered defining features of a tiger. So to differentiate between the variables based on the impact they may have on the final predictions, we assign weights to these variables. Initially, random weights are assigned but these weights as we go along. This process of adjusting the weights is called ‘Backward propagation for errors’ or more commonly known as ‘Backpropagation’. We’ll discuss this in detail later in the blog.

The output layer

The final component in the ANN architecture. Finally, the hidden layers link to the ‘output layer‘. The output layer receives connections from hidden layers or from the input layer. It returns output corresponding to the input variables. The active nodes in the output layer combine and change values in the data to produce output values.

The key to an effective ANN lies in the appropriate selection of weights.