Introduction

The helper classifier GAN is just an augmentation of class-contingent GAN that expects that the discriminator not just anticipate assuming the picture is 'genuine' or 'counterfeit' yet, in addition, needs to give the 'source' or the 'class name' of the given picture. It is beneficial to identify any image's realness and a fun extension.

Auxiliary Classifier GAN

The Auxiliary Classifier GAN, or AC-GAN for short, is an expansion of the restrictive GAN that changes the discriminator to anticipate the class name of a given picture instead of getting it as information. It balances out the preparation cycle and permits the age of enormous excellent views while learning a portrayal in the inert space that is autonomous of the class name.

Here we will discuss in detail the following:

- The helper classifier GAN is a restrictive GAN that expects that the discriminator foresees the class mark of a given picture.

- The most effective method to foster generator, discriminator, and composite models for the AC-GAN.

- The most effective method to prepare, assess, and utilize an AC-GAN is to create photos from a good dataset.

One example of this is: let's take an off chance that the Generator creates the picture of a shoe. The model needs to foresee assuming it's a genuine picture or phony and anticipate the 'class marks' of the accurate and produced images.

AC-GAN Architecture

The AC-GAN design contains generator two models

- Generator: It takes arbitrary focus from an inactive space as information and creates pictures.

- Discriminator: It orders images as one or the other genuine (from the dataset) or counterfeit (produced) as well as foresee the class name.

In AC-GAN, the preparation of the essential GAN model has been gotten to the next level.

Here, the generator is given two boundaries rather than one. It gets irregular focuses from the inert space and a classmark as information utilizing which it endeavors to create a picture for that class. The expansion of the classmark as knowledge makes the picture age and arrangement process subject to the class name, thus the name. Utilizing this Generator model, the preparation interaction turns out to be more steady, and it can now be used to create pictures of a particular kind using the class name.

The discriminator here is given both a picture and the class name. So presently, it needs to characterize whether the image is genuine or counterfeit (same as in the past), and it likewise needs to anticipate the class mark of the picture.

Here the goal work currently has two sections:

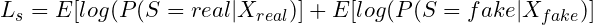

where,

LS = chances of predicting the correct source.

S = Source

X = Input image

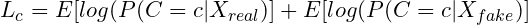

where,

LS = chances of predicting the correct class.

c = class label

X = Input image

The main motive for training the models is the following

- The discriminator is prepared to maximize LC +LS.

- The generator is trained to maximize LC − LS.

As the working of the GAN model, a 'minimax game' happens here, where the Discriminator is attempting to augment its prize (Lc + Ls), and the Generator is trying to limit the Discriminator's award (Lc - Ls), for example, increase its misfortune. The extra data helps with better preparation of the model and creates much better results than the past model.

As the above misfortune works, the generator and the discriminator 'battle' about this misfortune work. The generator and the discriminator both attempt to augment the class misfortune. The source misfortune is, anyway, a min-max issue. The generator tries to limit the source misfortune and dolt the discriminator. The discriminator then again attempts to augment the source misfortune and keep the generator from acquiring an advantage.

Contrasting proficiency and past models:

In the prior models, it was seen that raising the number of classes while utilizing a similar model diminished the nature of the results created by the model. Here, the AC-GAN model permits the partition of enormous datasets into subsets (class-wise) and prepares the generator and discriminator models for every subgroup separately. Primarily, this model is the same as the current GAN models. Notwithstanding, the progressions made above to the base GAN model will generally give excellent outcomes and settle the preparation process.

The model(Pytorch) example to understand code

Let's take a dataset as an example to help write our code. The dataset contains 60,000 pictures of 32x32 aspects. There are ten classes, each having 6,000 pictures.

The Generator, composed of a module -

import torch

from torch import nn

class generator(nn.Module):

#generator model

def __init__(self,in_channels):

super(generator,self).__init__()

self.fc1=nn.Linear(in_channels,384)

self.t1=nn.Sequential(

nn.ConvTranspose2d(in_channels=384,out_channels=192,kernel_size=(4,4),stride=1,padding=0),

nn.BatchNorm2d(192),

nn.ReLU()

)

self.t2=nn.Sequential(

nn.ConvTranspose2d(in_channels=192,out_channels=96,kernel_size=(4,4),stride=2,padding=1),

nn.BatchNorm2d(96),

nn.ReLU()

)

self.t3=nn.Sequential(

nn.ConvTranspose2d(in_channels=96,out_channels=48,kernel_size=(4,4),stride=2,padding=1),

nn.BatchNorm2d(48),

nn.ReLU()

)

self.t4=nn.Sequential(

nn.ConvTranspose2d(in_channels=48,out_channels=3,kernel_size=(4,4),stride=2,padding=1),

nn.Tanh()

)

def forward(self,x):

x=x.view(-1,110)

x=self.fc1(x)

x=x.view(-1,384,1,1)

x=self.t1(x)

x=self.t2(x)

x=self.t3(x)

x=self.t4(x)

return x #output of generato

Note that the convolution networks have painstakingly picked boundaries in the generator, so the result tensor has a similar aspect as the tensor coming from the preparation set. This is vital because both go to the discriminator to be assessed.

The Discriminator, additionally composed as a module -

import torch

from torch import nn

class discriminator(nn.Module):

def __init__(self,classes=10):

#we have 10 classes in the CIFAR dataset with 6000 images per class.

super(discriminator,self).__init__()

self.c1=nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=16,kernel_size=(3,3),stride=2,padding=1),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.c2=nn.Sequential(

nn.Conv2d(in_channels=16,out_channels=32,kernel_size=(3,3),stride=1,padding=1),

nn.BatchNorm2d(32),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.c3=nn.Sequential(

nn.Conv2d(in_channels=32,out_channels=64,kernel_size=(3,3),stride=2,padding=1),

nn.BatchNorm2d(64),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.c4=nn.Sequential(

nn.Conv2d(in_channels=64,out_channels=128,kernel_size=(3,3),stride=1,padding=1),

nn.BatchNorm2d(128),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.c5=nn.Sequential(

nn.Conv2d(in_channels=128,out_channels=256,kernel_size=(3,3),stride=2,padding=1),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.c6=nn.Sequential(

nn.Conv2d(in_channels=256,out_channels=512,kernel_size=(3,3),stride=1,padding=1),

nn.BatchNorm2d(512),

nn.LeakyReLU(0.2),

nn.Dropout(0.5)

)

self.fc_source=nn.Linear(4*4*512,1)

self.fc_class=nn.Linear(4*4*512,classes)

self.sig=nn.Sigmoid()

self.soft=nn.Softmax()

def forward(self,x):

x=self.c1(x)

x=self.c2(x)

x=self.c3(x)

x=self.c4(x)

x=self.c5(x)

x=self.c6(x)

x=x.view(-1,4*4*512)

rf=self.sig(self.fc_source(x))#checks source of the data---i.e.--data generated(fake) or from training set(real)

c=self.soft(self.fc_class(x))#checks class(label) of data--i.e. to which label the data belongs in the CIFAR10 dataset

return rf,c

If we start training, the number of epochs must be set to 100. The learning rate is set to 0.0002, and the batch size is 100.

The number of epochs ideally should be more for proper image synthesis.

The magnificence of GANs is that you can see the model preparation through the pictures. You can see the constructions coming to fruition across ages as the model gradually learns the circulation.