Introduction

Before we dive into the AWS Lambda Foundations let us first understand what is AWS Lambda?

Lambda is a compute service that allows you to run your code without the need for server provisioning or management. Lambda runs your code on a high-availability compute infrastructure and manages all aspects of it, including operating system and server maintenance, capacity provisioning and automatic scaling, code monitoring, and logging. You can use Lambda to run code for almost any type of application or backend service. All you have to do is write your code in one of Lambda's supported languages and you are ready to go.

Source: AWS

Next, we’ll discuss the AWS Lambda Foundations:

AWS Lambda Foundations

AWS Lambda Foundations incorporates diverse concepts. Each concept is imperative to run your application on AWS.

In this section, we are going to discuss the major concepts, features, and Architecture. Henceforth, let’s start:

Source: Yupeng tang

Lambda Concepts

Function: In Lambda, a function is a resource that you can use to run your code. A function contains code that processes events that you pass into the function or that other AWS services send to it.

Events: A Lambda function processes an event, which is a JSON-formatted document containing data. The event is converted to an object by the runtime and passed to your function code. When you call a function, you get to choose the event's structure and content.

Example - Student Data

You can also try this code with Online Javascript Compiler |

When an AWS service calls your function, the shape of the event is defined by the service.

Example service event – Amazon SNS notification

You can also try this code with Online Javascript Compiler |

Trigger: A Lambda function is invoked by a trigger, which is a resource or configuration. AWS services that you can configure to call a function and event source mappings are examples of triggers. In Lambda, an event source mapping is a resource that reads from a stream or queue and then calls a function.

Execution Environment: An execution environment contributes a secure and isolated runtime environment for Lambda functions. The processes and resources needed to run the function are managed by an execution environment. Moreover, the execution environment supports the function's lifecycle as well as any extensions that are associated with it.

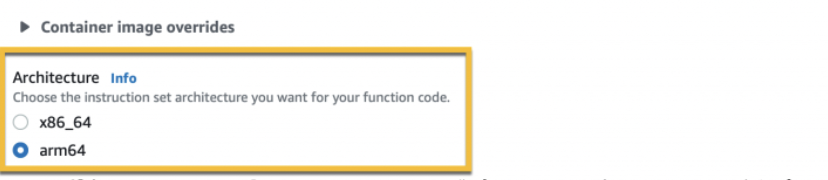

Instruction set architecture: The type of computer processor Lambda uses to run the function is determined by the instruction set architecture. Lambda offers the following instruction set architectures:

- for the AWS Graviton2 processor => arm64 – 64-bit ARM architecture.

- for x86-based processors => x86_64 – 64-bit x86 architecture.

Deployment package: A deployment package is used to deploy Lambda function code. There are two types of deployment packages that Lambda supports:

- A .zip file archive that comprises your function code and its dependencies. Lambda provides runtime and the operating system for your function.

- A container image that is compatible with the Open Container Initiative (OCI) specification. The Open Container Initiative is a non-profit organization dedicated to developing open industry standards for container formats and runtimes.

Furthermore, you can add your function code and dependencies to the image. You must also include the operating system and a Lambda runtime.

Layer: A Lambda layer is a.zip file archive that can include additional code or content. Libraries, a custom runtime, data, and configuration files can all be found in a layer.

Layers make it simple to package libraries and other dependencies that you can use with Lambda functions. The use of layers reduces the size of uploaded deployment archives and speeds up the deployment process. Layers also encourage code sharing and responsibilities separation, allowing you to iterate on business logic faster.

Extension: Lambda extensions allow you to extend the functionality of your functions. You can use extensions to connect your functions to your favourite monitoring, observability, security, and governance tools, for example. You can choose from a wide range of tools provided by AWS Lambda Partners or create your own Lambda extensions.

Concurrency: The number of requests served by your function at any given time is known as concurrency. Lambda creates an instance of your function to process the event when it is called. When the function code has completed its execution, it is ready to handle another request. If a request is still being processed when the function is called again, another instance is created, increasing the function's concurrency.

Qualifier: You can use a qualifier to specify a version or alias when calling or viewing a function. A version is a numerically qualified immutable snapshot of a function's code and configuration. For example:= My-function:1. An alias is a reference to a version that you can change to map to a different one or split traffic between two. For instance, consider my-function:BLUE. You can combine versions and aliases to give clients a consistent way to call your function.

Destination: An AWS resource to which Lambda can send events from an asynchronous invocation is known as a destination. For events that fail to process, you can specify a destination. Some services also provide a destination for successfully processed events.

Next, we’ll discuss the AWS Lambda Features:

AWS Lambda Features

For managing and invoking functions, Lambda provides a management console and API. It provides runtimes that support a common set of features, allowing you to quickly switch between languages and frameworks based on your requirements. You can also create versions, aliases, layers, and custom runtimes in addition to functions and many more.

Let us discuss each of these one by one:

-

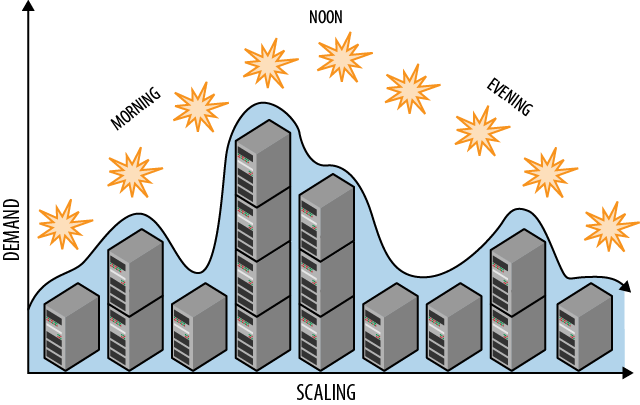

Scaling

Lambda is a service that manages the infrastructure that runs your code and scales automatically in response to requests. When your function is called more frequently than a single instance can process events, Lambda scales up by launching more instances. Inactive instances are frozen or stopped as traffic decreases. You only pay for the time your function takes to start up or process events.

-

Concurrency Controls

To make sure your production applications are highly available and responsive, use concurrency settings. Use reserved concurrency to prevent a function from consuming too much concurrency and to set aside a portion of your account's available concurrency for it. The pool of available concurrency is divided into subsets using reserved concurrency. A reserved concurrency function only uses concurrency from its own pool. -

Function URLs

Lambda has built-in support for HTTP(S) endpoints in the form of function URLs. You can give your Lambda function a dedicated HTTP endpoint with function URLs. After you've set up your function URL, you can use it to call it from a web browser, curl, Postman, or any other HTTP client.

A function URL can be added to an existing function or used to create a new function. -

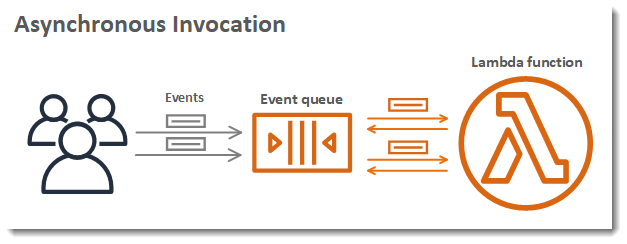

Asynchronous invocation

You can choose whether to call a function synchronously or asynchronously when you call it. You wait for the function to process the event and respond with a synchronous invocation. Lambda queues the event for processing and responds immediately with an asynchronous invocation.

Lambda handles retries for asynchronous invocations if the function returns an error or is throttled. You can configure error handling settings on a function, version, or alias to customize this behaviour. You can also tell Lambda to send events to a dead-letter queue if they fail to process or to send a record of any invocation to a destination. -

Event Source Mappings

You can create an event source mapping to process items from a stream or queue. An event source mapping in Lambda is a resource that reads items from an Amazon Simple Queue Service (Amazon SQS) queue, an Amazon Kinesis stream, or an Amazon DynamoDB stream in batches and sends them to your function. Hundreds or thousands of items can be processed in each event handled by your function. -

Destinations

A destination is an Amazon Web Services resource that receives function invocation records. You can configure Lambda to send invocation records to a queue, topic, function, or event bus for asynchronous invocation. You can set up different destinations for successful invocations and failed invocations. The invocation record describes the event, the function's response, and the reason for sending the record. -

Function Blueprints

You can start from scratch, use a blueprint, use a container image, or deploy an application from the AWS Serverless Application Repository when creating a function in the Lambda console. A blueprint is a piece of code that demonstrates how to use Lambda with an AWS service or a well-known third-party app. Blueprints for Node.js and Python runtimes include sample code and function configuration presets. -

Tools for testing and deployment

Lambda allows you to deploy code either as is or as container images. AWS and popular community tools like the Docker command line interface provide a rich tool ecosystem for authoring, building, and deploying Lambda functions (CLI). -

Templates for applications

The Lambda console can be used to build an application with a continuous delivery pipeline. Code for one or more functions, an application template that defines functions and supporting AWS resources, and an infrastructure template that defines an AWS CodePipeline pipeline are all available as application templates in the Lambda console. Every time you push changes to the included Git repository, the pipeline's build and deploy stages run.

The list is not over yet, AWS Lambda provides more functionalities and features.

Now, let us discuss the Programming Model of AWS Lambda:

Lambda Programming Model

Lambda offers a programming model that is shared across all runtimes. The programming model specifies how your code interacts with the Lambda system. By defining a handler in the function configuration, you tell Lambda where your function starts. The handler receives objects from the runtime that contain the invocation event as well as context information like the function name and request ID.

The runtime then sends another event to the handler after it finishes processing the first. Clients and variables declared outside of the handler method in initialization code can be reused because the function's class is kept in memory.

Create reusable resources like AWS SDK clients during initialization to save processing time on subsequent events. Each instance of your function, once initialised, can handle thousands of requests as we've discussed in the Lambda features.

Moreover, your function also has access to the /tmp directory for local storage. Request-serving instances of your function are kept active for a few hours before being recycled.

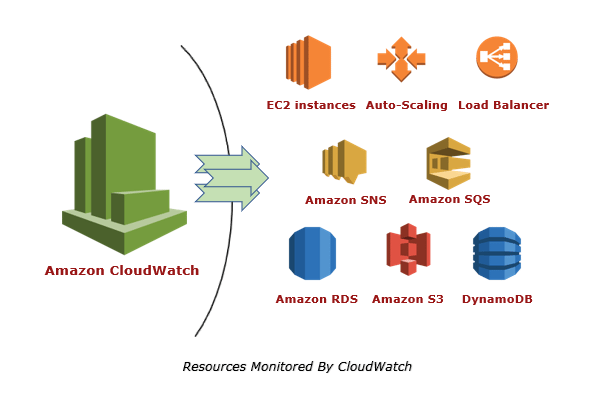

Your function's logging output is captured by the runtime and sent to Amazon CloudWatch Logs.

The runtime logs entries when function invocation begins and ends, in addition to logging the output of your function. A report log with the request ID, billed duration, initialization duration, and other information is included.

You may visit to the official documentation of AWS Lambda Programming Model documentation to learn more about the programming interface and supported languages.

Next, we’ll discuss the Lambda instruction set architecture:

If we recall it from the above discussed Lambda Concepts, we’ve talked about the two Instruction set architectures which are as follows:

- for the AWS Graviton2 processor => arm64 – 64-bit ARM architecture.

- for x86-based processors => x86_64 – 64-bit x86 architecture.

In this section, we’ll be exploring these two in detail:

Lambda Instruction Set Architecture

arm64 – 64-bit ARM architecture

Acorn RISC Machine is the abbreviation for ARM. It's a group of RISC (Reduced Instruction Set Computer) architectures for computer processors that can be configured for a variety of situations. ARM processor cores are used in the majority of mobile devices and embedded systems.

The ARM64 processor is a development of the ARM architecture that is used in servers, desktop computers, and the internet of things (IoT). New technologies such as high-resolution displays, realistic 3D gaming, and voice recognition all place increased processing demands on ARM64 processors.

x86_64 – 64-bit x86 architecture, for x86-based processors

x86 is a family of complex instruction set computer (CISC) instruction set architectures[a] initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The Intel 8086 microprocessor was released in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than a simple 16-bit address could cover. Because the names of several successors to Intel's 8086 processor end in "86," such as the 80186, 80286, 80386, and 80486 processors, the term "x86" was coined.

Advantages of using arm64 architecture over x86 architecture

- Lambda functions built on the arm64 architecture (AWS Graviton2 processor) offer significantly better price and performance than those built on the x86 64 architecture. For compute-intensive applications like high-performance computing, video encoding, and simulation workloads, arm64 is a good choice.

- The Graviton2 CPU is based on the Neoverse N1 core and supports Armv8.2 (with CRC and crypto extensions) as well as a number of other architectural extensions.

- Graviton2 improves the latency performance of web and mobile backends, microservices, and data processing systems by providing a larger L2 cache per vCPU, which reduces memory read time. Graviton2 also improves encryption performance and includes instruction sets that reduce CPU-based machine learning inference latency.

Now, let us discuss some FAQs based on the above discussion: