Introduction

Azure Databricks is a data analytics platform optimized for the Microsoft Azure cloud services platform. We will be learning more about Azure Databricks, like what it is and what its features are. We will also see how we can create an Azure Databricks service.

Azure Databricks

Azure Databricks is an Apache Spark implementation that provides the latest Apache Spark versions. It allows us to integrate with open-source libraries easily. Spin up clusters and quickly build on a fully managed Apache Spark environment with a global scale and Azure availability.

A few of the most important features of Azure Databrick are -

- Workspace - Azure Databricks provides a collaborative workspace that allows different people to work simultaneously.

- Runtime - Azure Databricks ensures benefits in terms of security and performance for big data workloads and analytics by including Apache Sparks, and these updates are made regularly.

- Databricks File System (DBFS) - This is an abstract layer on the top of object storage that enables us to mount storage items like Azure Blob Storage, which allows us to access data as it was stored on our local system file.

Must Read Apache Server

Create an Azure Databricks service

For creating Databricks, we need an Azure subscription, but we can use it free by registering as a free trial.

Sign in to the Azure portal using this link https://portal.azure.com and search for databricks in the create resource box:

Search for Azure Databricks in the search bar as indicated below:

Now, click on create button as shown below:

- Subscription - Choose the plan that we have from the drop-down

- Resource group - We are using the azure group azsqlshackrg, which we have already created. We can create it as per our needs.

- Workspace name - Here, we have to specify the workspace name (azdatabricks in this example).

- Location - We have to specify where we want to deploy our service, it will not impact for now, but it is important for premium tier and big business. (East US for this example).

- Pricing Tier - The pricing tier that we are interested in (Premium in this example)

Now. for creating the cluster, we need to click on the Review + Create button, and now in the review section, it will show us all the settings we have made.

Now click on the Create button as shown below:

Source: Microsoft

Since it has been created, now select "Go to resource" from the notification tab to open the service we just established:

On the portal, we may see information about our databricks service, such as the URL, price details, etc.

Now, we will create a cluster; for this, we must launch Workspace from the Asure Databricks site.

Source: Microsoft

The homepage of the Databricks portal is shown below in the screenshot. From here, we can now create notebooks and manage our papers on the workspace tab. We cal also create tables and databases using the Data tab as shown below:

We can also use Kafka, Azure Blob Storage, Cassandra, and other data sources. In the vertical list of options, select clusters:

Source: Microsoft

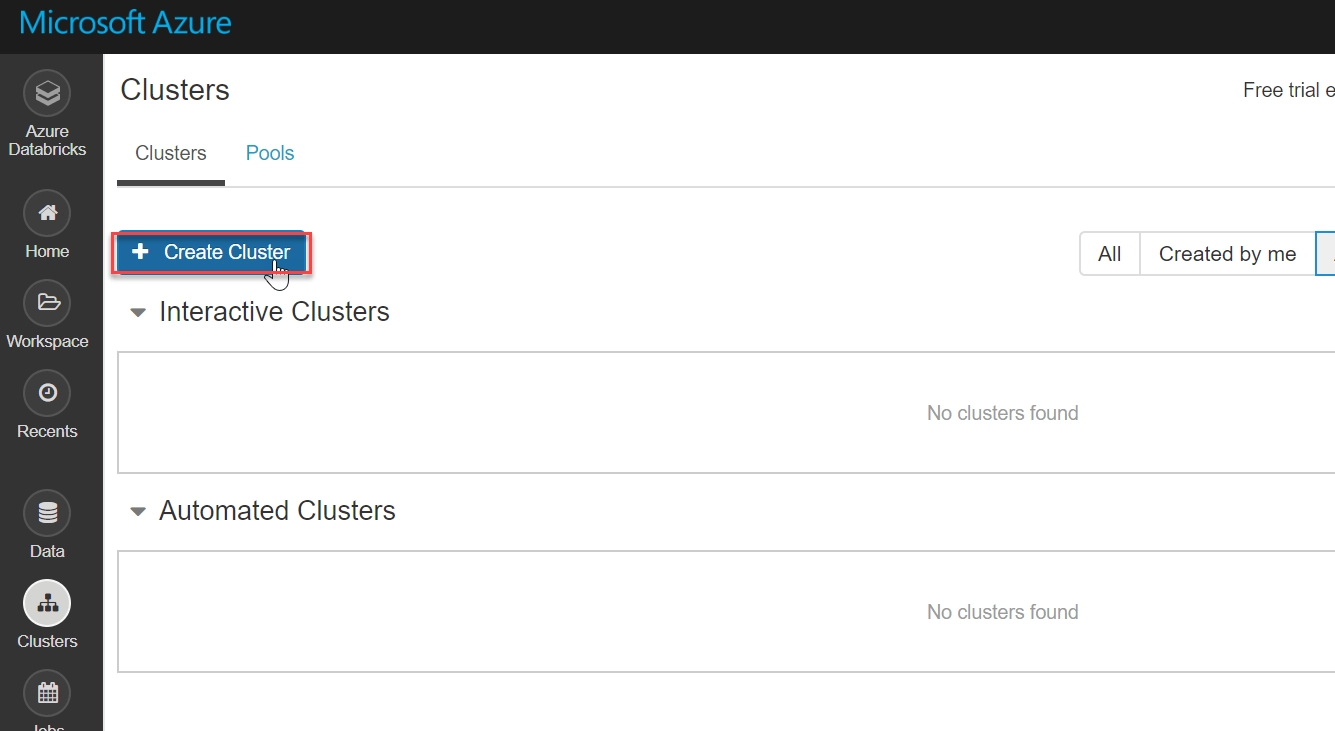

As a next step, we need to create a Spark cluster. Databricks have the special feature of auto-scaling, which is based on the needs of the business. Now go to the Clusters page and click Create Cluster as shown below in the screenshot:

Source: Microsoft

A few setup options for creating a databricks cluster are shown in the following screenshot. The setting I use to create a collection is -

- 5.5 runtime (data processing engine).

- Python 2.

- And the standard F4s series is configured for the low workload.

I do not allow autoscaling because this is a demo, and I am also not enabling the option which terminates the cluster when it is idle for 120 minutes.

Finally, on the New Cluster page, click on create collection button to get started:

Source: Microsoft

We can set our cluster the way we like. Many of the cluster configurations, including Advanced Options, are described on this Microsoft reference page in great detail.

The status of the cluster is shown pending in the screenshot below. It will take less time to create as it is created in the cloud infrastructure.

Source: Microsoft

Tandaaa !! We have successfully created a cluster that is up and running.

Source: Microsoft

Databricks is a fully automated managed service, which means that the cluster's resources are sent to a locked resource group, databricks-RG-azdatabricks-3 ... as shown in the diagram below, azdatabricks, VM, Disk, and other network-related applications are generated for Bridge Service:

Source: Microsoft

In the predefined Resource group, we'll also see that a dedicated Storage account has been deployed:

Source: Microsoft

Create a notebook in the Spark cluster

In the Spark cluster, a notebook is a web-based program that allows us to use codes and displays in various languages.

We can create notebooks and run Spark jobs once the collection starts.

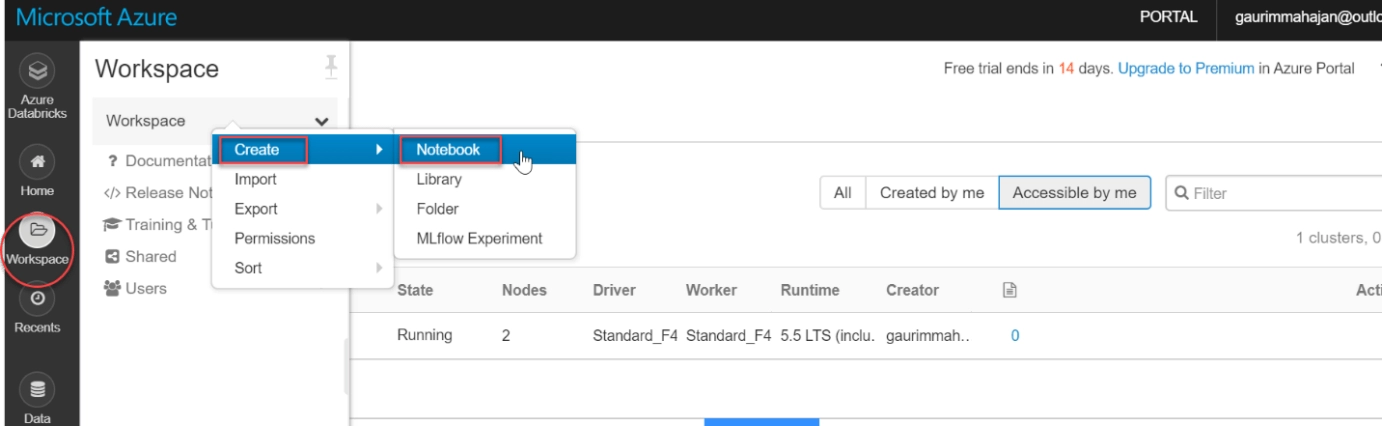

Now we have to click on the Create button under the Workspace tab in the left-hand menu bar and click on the selected Notebook option. For reference, see the image below:

Source: Microsoft

Now we have to give the notebook name, but it needs to be proper so that anyone can understand or get an idea about the notebook by reading the name. After this, we need to select a language such as SQL, Scala, Python, R, and the cluster name from the create notebook dialog box; then, we have to click on create button, which will add a notebook to the spark cluster that we just created.

Source: Microsoft