Working of Backpropagation Algorithm

The working of the backpropagation algorithm includes the following steps:

1. Forward Propagation: In this step, input data we fed into the neural network. Then the output is computed by passing the data through layers of neurons. Then we apply activation functions to produce predictions.

2. Error Calculation: In this step, we do the error calculation. So, the difference between the predicted output and the actual target values is calculated using a loss function.

3. Backward Propagation: In this step, the algorithm computes the gradient of the loss function concerning each weight by applying the chain rule. This involves:

-

Calculating Gradients: Derivatives of the loss function concerning the network’s weights are computed layer by layer, starting from the output layer and moving backward through the network.

-

Updating Weights: Weights are adjusted using gradient descent or its variants (e.g., Adam) based on the calculated gradients, reducing the loss function.

4. Iteration: The process of forward propagation, error calculation, and backward propagation is repeated for multiple epochs until the model converges to an optimal set of weights that minimize the loss.

How to Set the Model Components for a Backpropagation Neural Network

To set up a backpropagation neural network, follow these steps:

-

Define Network Architecture: Specify the number of layers, the number of neurons in each layer, and the type of activation functions (e.g., ReLU, sigmoid, tanh).

-

Initialize Weights: Set initial values for weights and biases, typically using random values to break symmetry and promote effective learning.

-

Choose a Loss Function: Select an appropriate loss function for the task (e.g., cross-entropy loss for classification, mean squared error for regression).

-

Select an Optimization Algorithm: Choose an optimizer (e.g., stochastic gradient descent, Adam) to update weights based on the computed gradients.

-

Set Hyperparameters: Define the learning rate, batch size, and number of epochs. These parameters control the training process and convergence.

-

Prepare Data: Split the dataset into training, validation, and test sets. Normalize or preprocess the data as needed to ensure effective training.

-

Train the Model: Execute the backpropagation algorithm through multiple iterations, updating weights and monitoring performance metrics (e.g., accuracy, loss) to assess progress.

Advantages of Using the Backpropagation Algorithm in Neural Networks

-

Efficiency: Backpropagation efficiently computes gradients using the chain rule, making it feasible to train large and deep neural networks.

-

Convergence: It helps neural networks converge to a set of weights that minimize the error, improving the model's performance.

-

Flexibility: The algorithm can be adapted to various types of neural networks, including feedforward, convolutional, and recurrent networks.

-

Scalability: Suitable for handling large datasets and complex models, thanks to advancements in optimization techniques and computational resources.

Limitations of Using the Backpropagation Algorithm in Neural Networks

-

Computational Intensity: Training large networks can be computationally expensive and time-consuming, requiring significant processing power and memory.

-

Vanishing and Exploding Gradients: In deep networks, gradients may become too small or too large during backpropagation, hindering learning and affecting convergence.

-

Local Minima: The algorithm may get stuck in local minima, leading to suboptimal solutions. Techniques like momentum and advanced optimizers can help mitigate this issue.

-

Overfitting: Without proper regularization and validation, the model may overfit the training data, resulting in poor generalization to new, unseen data.

Types of Backpropagation

There are two types of backpropagation:

Static Backpropagation

This type of backpropagation aims to produce a static output for a fixed input. This kind of neural network is used to solve a problem like optical character recognition.

Recurrent Backpropagation

This type of backpropagation is a type of network employed in fixed-point leaning. The activations are fed forward till it stains a fixed value, followed by which an error is calculated and propagated backward.

Essential derivatives

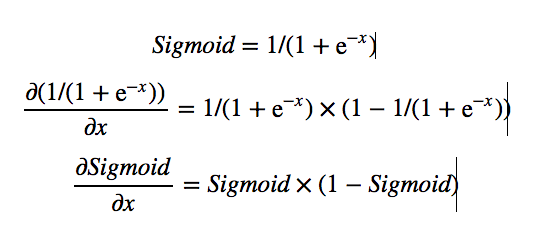

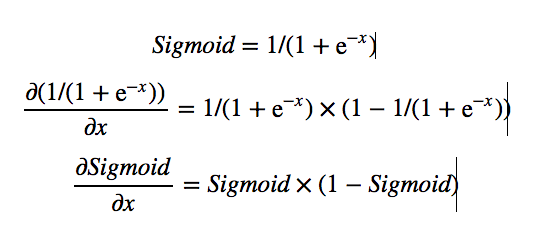

Sigmoid

The sigmoid derivation is a critical formula. The primary reason we use the sigmoid function is that it exists between 0 to 1. Thatswhy it is used for models where we have to predict the probability as an output. Since probability exists only between the range of 0 and 1, sigmoid is the right choice. The formula is:

Source: Link

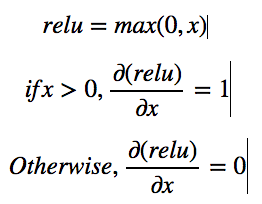

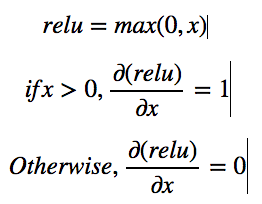

Relu

ReLU is the short form for Rectified Linear Activation Function. It is a piecewise linear function that will return the output as the input directly if it is positive, else it will output as zero. The ReLU is default activation when developing multilayer perceptron and convolutional neural networks. Mathematically it is represented as:

Source: Link

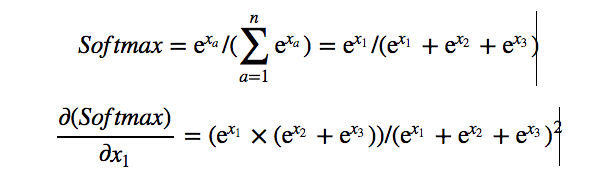

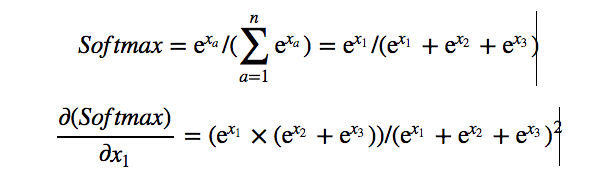

Softmax

The softmax is used as the activation function in the output layer of neural network models that predict a multinomial probability distribution. While, If you use the softmax layer as a hidden layer, you will keep all your nodes linearly dependent, which could result in many problems and poor generalization. In other words, the softmax function is used as the activation function for multi-class classification problems. The mathematical representation is:

Source: Link

Here's the backpropagation's pseudocode:

-

Initialize Parameters: Start with random weights and biases for the network.

-

Forward Pass: Pass the input data through the network to get the output.

-

Compute Loss: Calculate the error between the network’s prediction and the actual target.

-

Backward Pass: Calculate the gradient of the loss concerning each weight and bias by propagating the error backward through the network.

-

Update Weights and Biases: Adjust the weights and biases using the calculated gradients to minimize the error.

-

Repeat: Repeat the process with multiple iterations over the training data until the network learns the desired patterns.

-

Evaluate: Test the trained network on new data to ensure it performs well.

Frequently Asked Questions

What is the first step of backpropagation?

Initialize the network's weights and biases with random values before training begins.

What are the five steps in the backpropagation learning algorithm?

- Forward pass

- Compute loss

- Backward pass

- Update weights and biases

- Repeat

What is the chain rule and backpropagation in neural networks?

The chain rule calculates gradients for backpropagation by breaking down complex derivatives into simpler, manageable parts.

Is CNN backpropagation?

Yes, Convolutional Neural Networks (CNNs) use backpropagation to train by adjusting weights and biases based on the calculated gradients.

Conclusion

This article gave a brief explanation about backpropagation. We have discussed the types, applications, and functions of backpropagation, such as sigmoid, relu, and softmax functions. Apart from that, the mathematical representation of the derivative function used in backpropagation is presented. If you are interested to know more, check out our industry-oriented deep learning course curated by our faculty from Stanford University and Industry experts.

Check out this article - Padding In Convolutional Neural Network