Cloud Characteristics

Cost

The up-front costs can be greatly reduced as you are not buying huge amounts of hardware or leasing out new spaces for dealing with your big data on-premises. Pay as you go is a billing option provided to a cloud customer, where you are billed for resources used based on instance pricing. This is useful if one is not sure about the resources needed for a big data project.

Scalability

Scalability refers to the ability to vary the amounts of processing power with the same architecture. With regard to software, it refers to the consistency of performance per unit of power as hardware resources increase. Large volumes of structured and unstructured data require more processing power and storage. The cloud provides readily-available infrastructure and the ability to scale the infrastructure quickly to manage large spikes in traffic or usage.

Elasticity

Elasticity refers to the flexibility of computing resources in real-time, based on the customer’s need. The cloud sets no limit to the extent a customer can utilise or access a service when needed. This is extremely helpful for big data projects where the number of computing resources used depends on the volume and velocity of the data.

Resource Pooling

Multiple organizations can use the same resources. Computing resources are pooled to serve various consumers via a multi-tenant model. Different resources are dynamically assigned and reassigned according to consumer demand. This makes the cloud economically viable for big data projects.

Self-Service

Customers are provided with an interface to choose the services they want. A consumer can provision computing services, such as server time and network storage, as required without involving human interaction, unlike in a data center, where one would have to request the resources from IT operations.

Fault-tolerant and Disaster Recovery

The cloud has provisions to allow recovery when a part of the cloud system fails to respond. Data is a valuable component and losing it will certainly result in various problems. In case of emergencies and hazardous accidents such as earthquakes, floods and fires, data losses need to be minimal.

FAQs

Name some providers in the Big data Cloud Market.

Amazon’s Public Elastic Comput Cloud is one of the most high-profile IaaS service providers by Amazon Web Services. Google offers Google Comput Engine, Big Query, and Prediction API to deal with Big Data. Microsoft provides Windows Azure and HDInsigh to support connection with Microsoft Excel and other business intelligence (BI) tools.

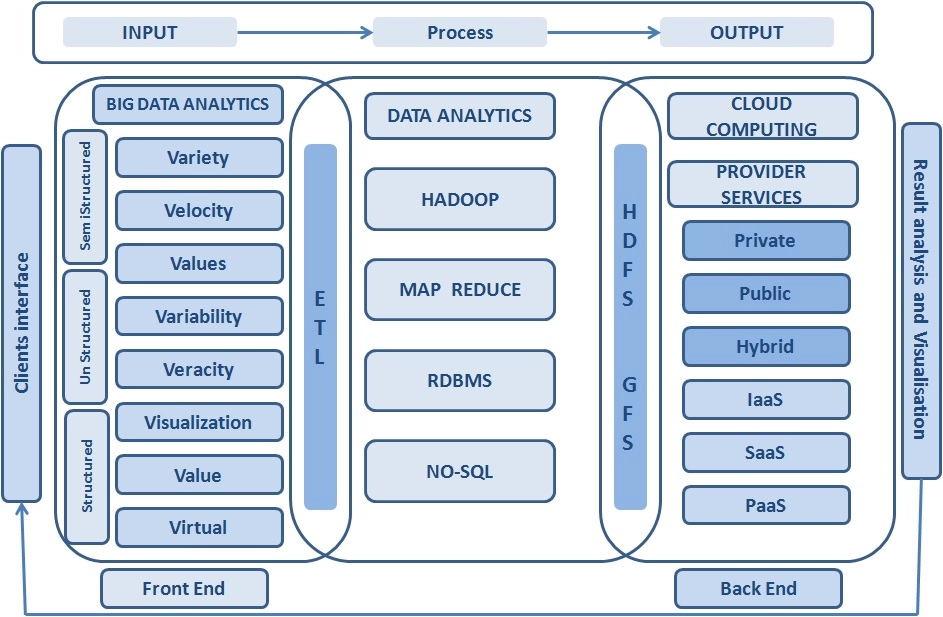

What are the different cloud service models?

There are three types of cloud service models. Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service(SaaS).

Conclusion

This article extensively discusses the different Cloud characteristics that are favourable for Big Data. We hope that this blog has helped you enhance your knowledge about the relationship between Big Data and Cloud Computing. Also, check out our articles on Cloud Computing Infrastructure and Cloud Architecture. Learn more about Big Data, Microsoft Azure, AWS and Google Cloud.

Explore our Coding Ninjas Library and upvote our blog to help other ninjas grow. Happy Coding!