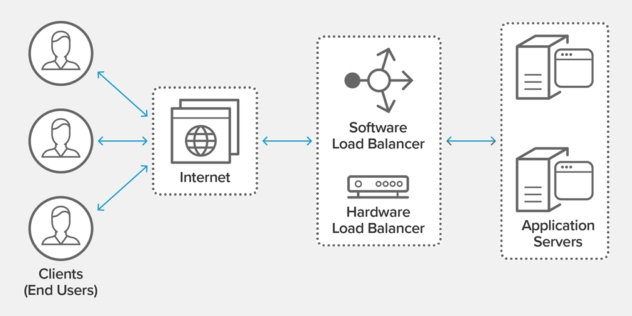

Software load balancer

A software-defined load balancer sits in front of servers and directs traffic, but not as a physical appliance. Software-defined load balancing efficiently distributes network load and client requests across all appropriate servers. It redirects traffic if one server fails, distributing requests to the remaining online servers. It also automatically sends requests to every new server added to the server group.

Installable load balancers and Load Balancer as a Service(LBaaS) are the two main types of software-based load balancers. Nginx, Varnish, HAProxy, and Linux Virtual Server(LVS) are examples of installable software load balancers. These load balancers need to be installed, configured, and managed. While Elastic Load Balancing - Amazon Web service(AWS ELB) and Stratoscale LBaaS are examples of LBaaS. Cloud providers handle them, and they come with built-in fault tolerance, flexibility, installation, and management.

Layer 4 load balancer

Layer 4 load balancing decides how to balance client requests across servers based on information defined at the networking transport layer (Layer 4). A Layer 4 load balancer makes load-balancing decisions based on the source and destination Internet Protocol(IP) addresses and ports reported in the packet header rather than the contents of the packet.

Layer 7 load balancer

Layer 7 load balancing occurs at the high-level application layer, which deals with the actual message content. Hypertext Transfer Protocol (HTTP) is the most widely used Layer 7 protocol for website traffic. Layer 7 load balancers are far more advanced than Layer 4 in routing network traffic and are especially useful for TCP (Transmission Control Protocol)-based traffic like HTTP.

Global server load balancing (GSLB)

Load balancing across globally distributed servers is called global server load balancing (GSLB). This facilitates traffic distribution across geographically distant application servers. GSLB is typically used to achieve one or more application goals: cloud bursting, disaster recovery, custom content, and performance.

Load-balancing algorithms

Effective load balancers decide which device in a server farm is best suited to process an incoming data packet. This necessitates the use of algorithms that are built to disperse loads in a specified manner.

Round Robin

Round robin is a simple mechanism for ensuring that each client request is routed to a different virtual server from a rotating list. It's simple for load balancers to implement, but it ignores the current load on a server. A server may get overloaded if it receives a large number of processor-intensive queries.

Least Connection Method

The least connection load balancing is a dynamic load balancing algorithm that distributes client requests to the application server with the fewest active connections when the client request is received. When application servers have identical specifications, an application server may be overwhelmed due to longer-lasting connections; this approach considers active connection load.

Least Response Time Method

The least response time is a load balancing method that chooses which application server receives the next request based on the response timings of the application servers. The application server weights are calculated using the application server response time to a health check. The next request is sent to the application server that responds the fastest.

Least Bandwidth Method

The least bandwidth technique, a relatively simple algorithm, looks for the server currently serving the least amount of traffic (measured in Mbps). Similarly, the least packets technique chooses the service with the fewest packets received in a specific time period.

Hashing Methods

Decisions are based on a hash of data from the incoming packet in the hashing load balancing method. This comprises information like the source/destination IP address, port number, URL, domain name, and connection or header information.

Custom Load Balancing Method

Through the Simple Network Management Protocol(SNMP), the load balancer can use the custom load method to query the load on specific servers. The administrator can specify the server load they want to query (CPU use, memory, and response time) and then mix them to meet their needs.

Features of Cloud load balancing

Cloud load balancing is filled with beneficial features. It ensures application resilience, improves performance, and safeguards cloud applications and services from failures. Let's go over some of its other features and applications.

Seamless Autoscaling

We can easily autoscale our apps and handle a surge or drop in workload with the help of cloud load balancers. This feature is cost-effective because it minimizes resources when the cloud application and server requests decrease.

Active health checks

Cloud load balancers are set up to regularly deliver specified health check requests to each cloud application's servers. This is done to keep track of the upstream servers' health. The load balancer verifies the response at the end of the health check.

Peak performance during high traffic

Cloud load balancing companies ensure that the workload is adequately divided in real-time, regardless of the number of requests or traffic on our cloud application.

Supports Several Protocols

Cloud load balancing software is designed specifically for cloud applications, and it supports several of the most recent protocols, such as HTTP/2, TCP, and UDP load balancing.

The benefits of cloud load balancing

Here are some of the most significant benefits of using a cloud load balancer.

Ability to Handle Traffic Surges

Cloud load balancers control server requests so that each server works at an efficient and high-performing capacity, rather than using several IT specialists to combat traffic surges. With this even distribution, servers can obtain the best possible results in the least amount of time.

Flexibility

Traffic is routed through cloud load balancers and distributed across multiple servers and network units. Hence, even if a single node in a chain of linked nodes cannot handle the workload, the burden is immediately transferred to another active node. Application traffic can be controlled easily and flexibly with cloud load balancing.

High Performing Applications

We may scale your services and ensure that additional traffic does not reduce efficiency by using the correct load balancing solutions. Load balancers step in and distribute the workload to keep the system running smoothly.

Cost-Effectiveness

Companies that use a good cloud load balancing service provide better cloud service performance to their clients, making them more reliable in the long run. A bonus is that it’s all achieved at a significantly lower cost of ownership.

Reliability and Increased Scalability

Cloud load balancers quickly adapt to traffic surges when scalability is a concern. When a cloud service goes down, cloud load balancers are very good at routing traffic away from the downed resource and moving the workload to another cloud resource.

FAQs

-

How much time does it take to provision a load balancer?

After submitting the API request, provisioning a load balancer usually takes less than one minute. During instances of high system load, the provisioning procedure should take no more than a few minutes to finish.

-

What is the load balancer health API?

The load balancer health API is a programmatic method of determining a load balancer instance's health in relation to its backend servers.

-

Can we specify a range of TCP ports to load balance?

No. We need to specify the individual TCP port we want to load balance. But we can load the balance for any port between 1-65535.

-

What are the advantages of using the Cloud Load Balancer's SSL termination?

Traffic is decrypted at the Cloud Load Balancer with SSL termination, and unencrypted traffic can be sent to one or more Cloud Servers for processing.

-

When should we use the load balancer health API?

When we want to create a notification and monitoring system or interface with the one we already have, we should use the health API.

Key Takeaways

In this article, we have extensively discussed the concepts of cloud load balancing. We started with the introduction of cloud load balancing, various techniques of cloud load balancing, load balancing algorithm, features of cloud load balancing, then concluded with the benefits of cloud load balancing.

We hope that this blog has helped you enhance your knowledge regarding cloud load balancing and if you would like to learn more, check out our articles on different cloud service providers. Do upvote our blog to help other ninjas grow. Happy Coding!