Introduction

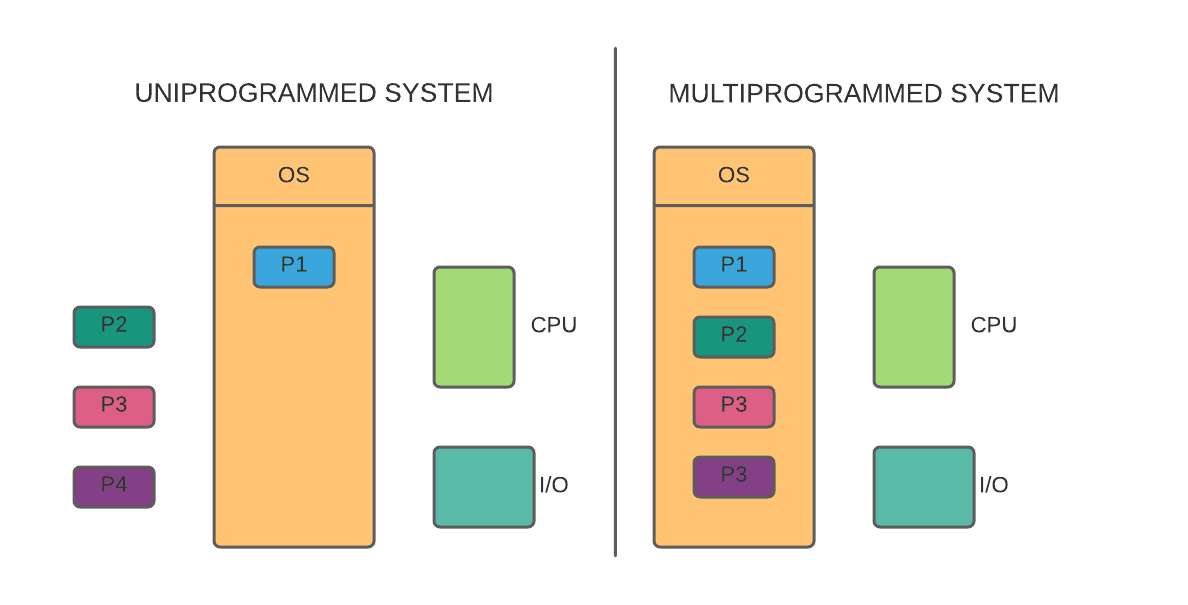

As you know there are two types of systems - uniprogramming systems and multiprogramming systems. Earlier systems were uniprogrammed like MS-DOS, where only one process can reside in the main memory. So, the CPU sits idle when a process goes for an I/O operation. This results in the wastage of CPU since a process goes for an I/O operation frequently. Moreover, the other processes that are waiting for the CPU time have to wait a lot, resulting in their starvation.

On the other hand, today’s systems are multiprogrammed. The benefit of having a multiprogrammed system is that more than one process can reside in the main memory. So, here the operating system doesn’t keep the CPU idle. As soon as a process goes for an I/O operation, the operating system allocates the CPU to another process. Thus increasing the CPU utilization and saving the processes from starvation.

The procedure of achieving the maximum CPU utilization is known as CPU scheduling. In this article, we will study some concepts of CPU scheduling and several CPU scheduling algorithms.

Recommended Topic, FCFS Scheduling Algorithm, Multiprogramming vs Multitasking

What is CPU scheduling?

As stated above, CPU scheduling is the procedure of achieving the maximum utilization of the CPU. In simple words, instead of keeping the CPU idle like in a uni-programming computer system and wasting its time, the CPU is allocated to some other processes which are waiting for the CPU to execute them. Thus, this pattern continues to keep the CPU busy.

Moreover, the operating system can determine which process will get the CPU when another process has gone for an I/O operation through CPU scheduling.

CPU scheduling occurs in either of the following cases:

- When a process switches from the running state to the waiting state (e.g. I/O request).

- When a process switches from the running state to the ready state.

- When a process switches from the waiting state to the ready state (e.g. completion of an I/O request).

- When a process terminates.

This kind of scheduling is a fundamental function of the operating system. Almost all computer resources are scheduled before use. The CPU is, of course, one of the primary computer resources. Thus, its scheduling is central to operating system design.

Before understanding the CPU scheduling further, let us know some terminologies related to the CPU scheduling.

Necessary CPU scheduling terminologies

- Arrival Time: The time at which a process arrives in the ready queue or ready state.

- Exit Time: The time the process completes its execution and exit from the system.

- Execution Time: The total time a process takes to complete its execution. Also known as burst time or running time.

- CPU-I/O Burst Cycle: The process execution consists of a cycle that includes CPU burst and I/O burst. A process begins its execution with a CPU burst. That is followed by an I/O burst, followed by another CPU burst, then I/O and so on. Finally, the process’ execution is completed from a CPU burst. This is known as the CPU-I/O burst cycle.

Now, we will explore some concepts of CPU scheduling.

CPU Scheduler

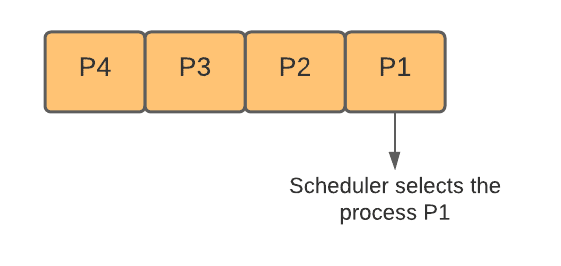

Whenever the CPU becomes idle, it must be allocated to some other process present in the ready queue by the operating system. This selection of the process for allocation is carried out by the CPU scheduler or short-term scheduler. The CPU scheduler selects a process from the main memory, which is in its ready state, which is ready for execution and allocates the CPU to that process.

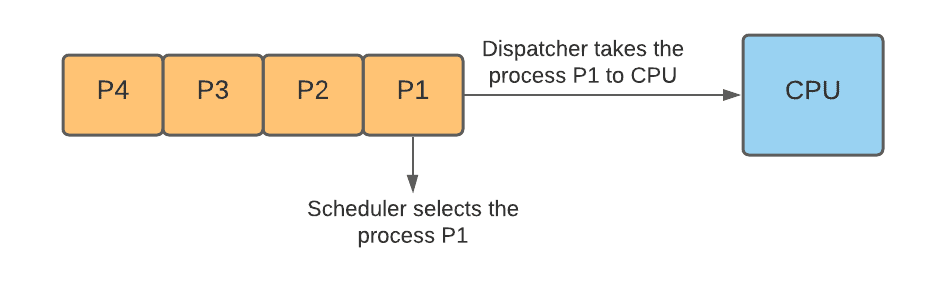

The below figure shows four processes, namely P1, P2, P3 and P4. Out of these, the process P1 is selected by the CPU scheduler for execution. How this selection is made depends on which scheduling algorithm is employed.

Let us now discuss the various CPU scheduling algorithms:

Types of CPU Scheduling

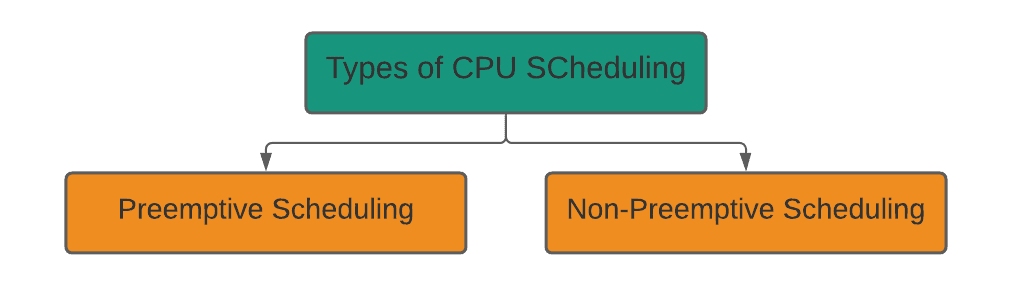

There are two types of CPU scheduling methods.

We will discuss these two types one by one.

Preemptive Scheduling

In preemptive scheduling, the tasks are assigned based on their priorities. For example, a low-priority task is running. After some time, a high-priority task comes into the system for execution. Then since the new task is of high priority, the operating system will keep the low priority task on hold and allocate the CPU to the high priority task. As the high-priority task will finish its execution, the CPU will be reallocated to the low-priority task. This is known as preemptive scheduling.

Preemptive scheduling also occurs when a process switches from the running state to the ready state and switches from the waiting state to the ready state.

Non-Preemptive Scheduling

In non-preemptive scheduling, once the CPU is assigned to a process, it is released only when the process switches its state from the running state to the waiting state or terminates. It is the only method that works across multiple hardware platforms. This is because, unlike preemptive scheduling, it does not need special hardware (such as a timer).

Also read, Free Space Management in OS

Dispatcher

The dispatcher is another component involved in CPU scheduling. The duty of the dispatcher is to give control of the CPU to the process selected by the CPU scheduler for execution. The dispatcher should work very fast since it is invoked every time the CPU is allocated to a process. The time taken by the dispatcher to stop one process and start another process is known as the dispatch latency. Thus, the functions of the dispatcher are-

- Switching context (switching from one process to another process)

- Switching to user mode (In dual-mode, switching the system from kernel mode to user mode)

- In the newly loaded program, navigate to the correct location.

In the figure below, the process P1 selected by the CPU scheduler is taken to the CPU by the dispatcher.

Scheduling Criteria

To achieve maximum CPU utilization, there are some criteria defined. These criteria include:

- CPU Utilization: CPU utilization is the main work of the operating system. The operating system tries to keep the CPU as busy as possible. The CPU utilization ranges from 0 to 100 percent, depending on the type of the system. For a lightly loaded system (a system in which few processes are running), CPU utilization should be nearly 40 per cent. And for a heavily loaded system (a system in which many processes are running), CPU utilization should be near 90 per cent.

- Throughput: The number of completed processes per unit time is called the system's throughput. For short processes, the throughput can be ten processes per second. And for long processes, the throughput can be one process per hour.

- Turnaround Time: Turnaround time is the time from the arrival of a process to the finishing of the process. We can also say that turnaround time is the time taken by the process to execute. This includes the sum of the time spent by the process to get into the memory, time spent in the ready queue, time spent in the execution and time spent in I/O operations.

- Waiting Time: Waiting time of a process is the time spent by a process in the ready queue. This includes whether the process is waiting for the CPU for the first time or after the completion of an I/O operation.

- Response Time: Response time is the time from the submission of a process and the generation of the first response.

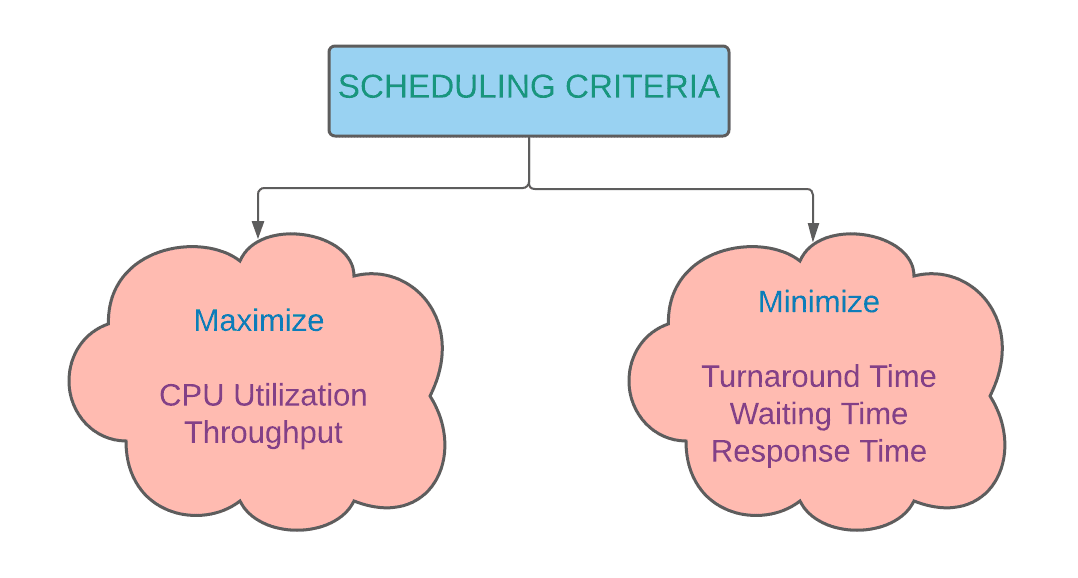

For doing the best CPU utilization possible, the operating system tries to maximize and minimize the above criteria:

- CPU Utilization and Throughput should be maximum.

- Turnaround time, waiting time and response time should be minimum.

CPU Scheduling Algorithms

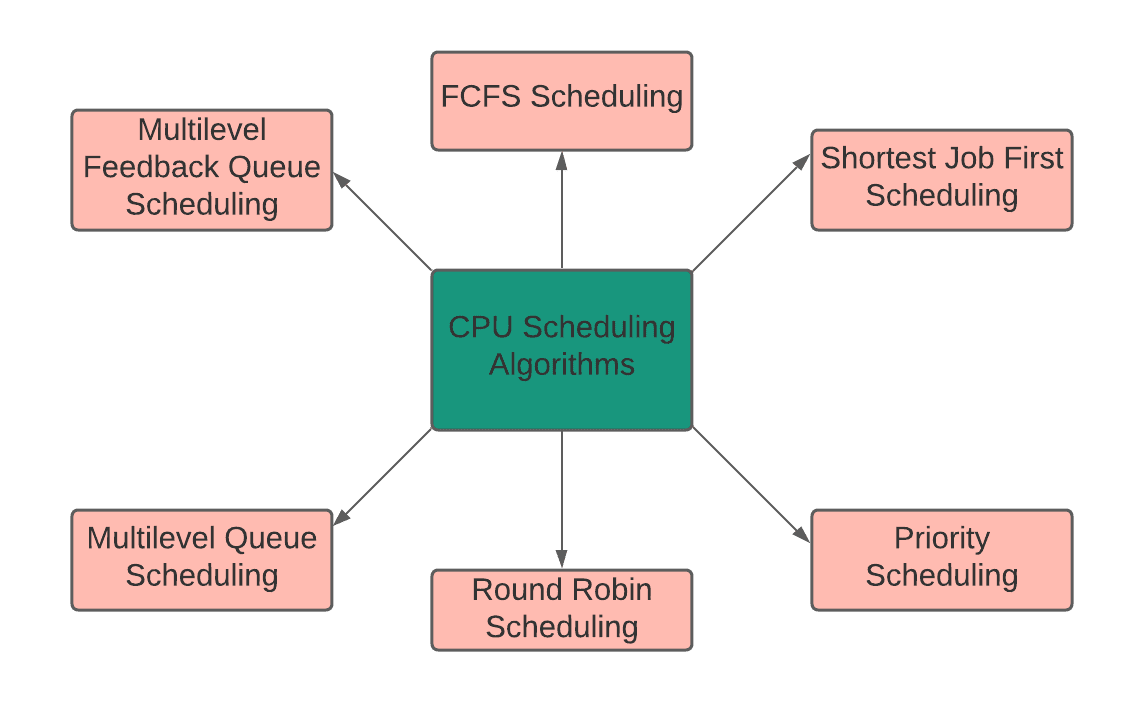

There are six types of CPU scheduling algorithms for CPU utilization. These are-

We will now discuss these algorithms one by one:

FCFS Scheduling Algorithm

The first comes first serve, or FCFS scheduling algorithm is the simplest CPU scheduling algorithm. In this algorithm, the first process (whose arrival time is least) gets the CPU first. This algorithm is implemented using a FIFO queue. When a process enters the ready state or the ready queue, its process control block (PCB) is added at the end of the FIFO queue. As soon as that process gets the CPU, it starts running, and its PCB is removed from the FIFO queue. This algorithm works for the non-preemptive type of scheduling.

Advantages-

- This algorithm is easy to understand and implement.

- This algorithm offers fair scheduling.

- There is no starvation in the FCFS scheduling algorithm.

Disadvantages-

- This algorithm has poor performance.

- This algorithm results in a higher average waiting time.

- This algorithm suffers the convoy effect.

- This algorithm is not optimal.

Shortest Job First Algorithm

In the shortest job first or SJF algorithm, the processes are allocated to the CPU in the increasing order of their execution time. The process which has the shortest execution time gets the CPU first. Likewise, it happens for all the processes. If two processes have the same execution time, the FCFS scheduling algorithm breaks the tie. This algorithm works for the preemptive and non-preemptive type of scheduling.

Advantages-

- This algorithm gives the minimum possible average waiting time.

- This algorithm is optimal.

Disadvantages-

- This algorithm can result in starvation.

- This algorithm is complex to implement.

Priority Scheduling Algorithm

In this algorithm, the processes are allocated to the CPU according to their priorities. The process which has the highest priority gets the CPU first. Likewise, it happens for all the processes. The priority of a process can be decided according to the system requirements. If two processes have the same priorities, the FCFS scheduling algorithm breaks the tie. This algorithm works for the preemptive and the non-preemptive type of schedules.

Advantages-

- The priority of a process can be selected based on the system requirements like memory requirement, time requirement, etc.

Disadvantages-

- This algorithm can result in starvation.

- This algorithm is complex to implement.

Round Robin Scheduling Algorithm

This algorithm is specially designed for time-sharing systems. Here, a time quantum or time slice is defined, and the ready queue is treated as a circular queue. A time quantum is a small unit of time ranging from 10 to 100 milliseconds in length. The CPU scheduler traverses this circular ready queue and allocates the CPU to each process till the defined time quantum. As soon as the time quantum is reached, the CPU is allocated to the next process. This cycle goes on until all the processes are completed with their execution. This algorithm works for the preemptive type of scheduling.

Advantages-

- This algorithm offers fair scheduling.

- This algorithm gives a better response time.

- There is no starvation in this algorithm.

Disadvantages-

- This algorithm is not suitable for priority-based systems.

- This algorithm gives more turnaround time.

Multilevel Queue Scheduling Algorithm

This Multilevel Queue Scheduling Algorithm algorithm partitions the ready queue into separate queues. Each separated queue has its CPU scheduling algorithm according to which the process gets the CPU. Moreover, each separated ready queue is assigned a priority. The separated queue with the highest priority gets the CPU first.

For example, there are seven processes in the ready queue P1, P2, P3, P4, P5, P6, and P7. This ready queue is partitioned into three queues.

Queue Q1 has processes P1 and P2 along with the FCFS scheduling algorithm. Queue Q2 has processes P3, P4, and P5 along with a Round-Robin scheduling algorithm.

Queue Q3 has processes P6 and P7 along with the FCFS scheduling algorithm.

If Queue Q1 has priority 2, Queue Q2 has priority 1, and Queue Q3 has priority 3.

So, first, all the processes of Q2 will execute, then the processes of Q1 and then of Q3.

Note: For n-ready queues, we have to assign n+1 scheduling algorithms.

Multilevel Feedback Queue Scheduling Algorithm

This algorithm is an enhancement of the Multilevel Queue Scheduling Algorithm. This algorithm enables the process to switch between the queues. The idea behind this algorithm is to classify processes based on the characteristics of their CPU bursts. For example, if a process consumes too much CPU time, it is kept in a low-priority queue.

You can also read about layered structure of operating system.