Concurrent access to shared resources can cause unexpected or misleading outcomes in parallel programming, hence areas of the program where the shared resource is used must be protected in ways that prevent concurrent access. The critical section, often known as the critical region, is considered a protected area in a particular code segment.

Accessing or modifying the same data may lead to data inconsistency. Hence, ensuring data consistency needs mechanisms to verify that cooperative processes run smoothly. Cooperative processes are those that can affect the execution of other processes and vice versa.

Also see: Multiprogramming vs Multitasking, Open Source Operating System

What is a Critical Section Problem in OS?

The critical-section problem in operating system is the starting point for our consideration of process synchronization. Consider a system with n processes (P0, P1, …, Pn-1). Every process has a critical section of code in which the process may change common variables, update a table, write a file, and so on. When one process is running in its critical section, no other process is permitted to run in that area.

The term "critical section" refers to a code segment that is accessed by several programs. The critical section includes shared variables or resources that must be synchronized in order to ensure data consistency.

It's a characteristic of a program that seeks to access shared resources. Any resource in a computer, such as a CPU, data structure, any IO device, or memory location.

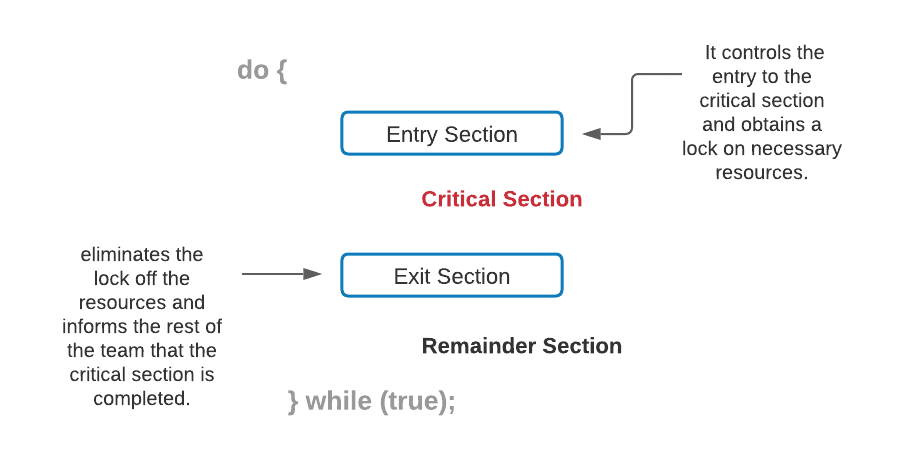

There are two methods that control the entry and exit from the critical section:

The wait() method controls the entry to the critical section, whereas the signal() function controls the exit.

The following is a schematic that depicts the crucial section:-

The following are the four most important parts of the critical section:

- Entry Section: It is a step in the process that determines whether or not a process may begin.

- Critical Section: This section enables a single process to access and modify a shared variable.

- Exit Section: Other processes waiting in the Entry Section are able to enter the Critical Sections through the Exit Section. It also ensures that a process that has completed its execution gets deleted via this section.

- Remainder Section: The Remainder Section refers to the rest of the code that isn't in the Critical, Entry, or Exit sections.

Solutions to the Critical Section Problem

Any proposed synchronization technique dealing with the critical section problem must fulfill the following requirements:

1. Mutual Exclusion

The system must make certain that-

- The processes have mutually exclusive access to the critical section.

-

At any one time, only one process should be present inside the critical section.

Mutual exclusion is a form of binary semaphore that is used to manage access to a shared resource. To prevent prolonged priority inversion concerns, it features a priority inheritance mechanism.

2. Progress

When no one is in the critical section and someone wants entry, this solution is utilized. Then, in a certain amount of time, those processes not in their reminder section should select who should go in. If no process is in its critical section and one or more threads desire to execute it, any of these threads must be permitted to do so.

3. Bounded Waiting

When a process requests to be placed in the critical section, there is a limit to the number of processes that may be placed in that area. As a result, after the limit has been reached, the system must enable the process to enter its critical section.

Note: The essential requirements are Mutual Exclusion and Progress. All synchronizing mechanisms must meet these requirements. The optional criteria are bounded waiting. However, if feasible, this criterion should be met.

In Process Synchronization, the critical section plays an essential part in resolving the problem. The following are the key approaches with respect to solving the critical section problem:

Peterson’s Solution

This is a software-based solution to critical section problems that are extensively employed. Peterson's solution was created by a computer scientist named Peterson, as the name itself suggests.

When a process is at a critical section, this approach allows the other process to just execute the remaining code and vice versa. This strategy also assists in ensuring that only one process may execute in the critical section at any given moment.

All three requirements are preserved in this solution:

- Mutual exclusion ensures that only one process has access to the vital area at any one moment.

- Progress is also reassuring because a process that is not in the crucial area cannot prevent other processes from entering it.

- Every process is given a fair chance to join the Critical section, ensuring bounded waiting.

Example:

ANY PROCESS Pi

FLAG[i] = true

while( (count != i) AND (CS is !free) ){ wait;

}

FLAG[i] = false

count = j; //after this, choose another process to access CS

Explanation:

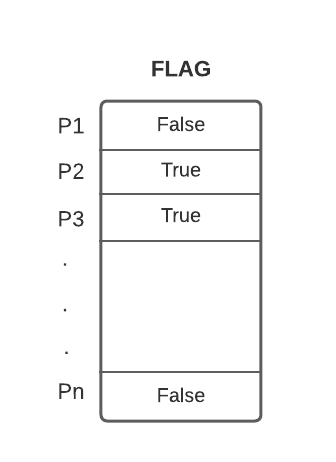

- Suppose there are N processes (P1, P2,... PN) and each process must enter the Critical Section at some point.

- A FLAG[] array of size N is kept, with the value false by default. As a result, anytime a process needs to reach the critical section, it must set the critical section flag to true. If Process Pi wishes to enter, for example, it will set FLAG[i]=TRUE.

- The count variable shows the process number that is presently waiting to be entered into the CS.

- While quitting, the process that enters the critical section would modify the count to a different number from the list of ready processes.

- Example: When a count equal to 2 is reached, P2 enters the Critical section, and while exiting it sets the count to 3, and hence P3 leaves the wait loop.

Synchronization Hardware

For critical section code, several systems include hardware assistance. If we could prevent interruptions from occurring while a shared variable or resource is being updated, we could easily address the critical section problem in a single-processor system.

We could be certain that the present sequence of instructions would be permitted to run in order without being pre-empted in this way. Unfortunately, in a multiprocessor system, this method is not possible.

In a multiprocessor environment, disabling interrupts might take a long time since the message is sent to all processors. The arrival of threads into the critical section is delayed as a result of the message transmission lag, and system efficiency decreases as a result.

Mutex Locks

Since synchronizing hardware is a difficult approach to install for everyone, Mutex Locks were created as a strict software method.

In this method, a LOCK is gained over the important resources used inside the critical section in the entrance part of the code. This lock is released in the exit section.

Semaphore Solution

Semaphore is a non-negative variable that is shared across threads. It's a different algorithm or solution to the problem of the critical section. It's a thread that's waiting for a semaphore, which can be signaled by another thread.

For process synchronization, it employs two atomic operations: 1) wait() and 2) signal().