Steps

Writing and running programs in TensorFlow has the following steps:

- Create Tensors (variables) that are not yet executed/evaluated.

- Write operations between those Tensors.

- Initialise your Tensors.

- Create a Session.

- Run the Session.

Implementation:

Below is the basic implementation of implementing TensorFlow with very basic python code.

import tensorflow as tf

y_hat = tf.constant(36, name=’y_hat’) # Define y_hat constant. Set to 36.

y = tf.constant(39, name=’y’) # Define y. Set to 39

loss = tf.Variable((y – y_hat)**2, name=’loss’) # Create a variable for the loss

init = tf.global_variables_initialiser() # When init is run later (session.run(init)),

# the loss variable will be initialised and ready to be computed

with tf.Session() as session: # Create a session and print the output

session.run(init) # Initialises the variables

print(session.run(loss)) # Prints the loss

a = tf.constant(2)

b = tf.constant(10)

c = tf.multiply(a, b)

print(c)

The output here will not be 20. All we did was put in the ‘computation graph’, but we have not run this computation yet. In order to actually multiply the two numbers, we will have to create a session and run it.

sess = tf.Session()

print(sess.run(c))

A placeholder is an object whose value we can specify only later. To specify values for a placeholder, we can pass in values by using a “feed dictionary” (feed_dict variable). Below, we created a placeholder for x. This allows us to pass in a number later when we run the session.

x = tf.placeholder(tf.int64, name = ‘x’)

print(sess.run(2 * x, feed_dict = {x: 3}))

sess.close()

When we specify the operations needed for a computation, we are telling TensorFlow how to construct a computation graph. Finally, when we run the session, we are telling TensorFlow to execute the computation graph.

From above python code I have tried to provide with basic ideas of working with TensorFlow, Implementing Neural Network here will take a lot of time and we will get diverted from the basic idea of the topic, so we could always refer here for more information and reading.

Keras:

It is a powerful and easy-to-use free open-source Python library for developing and evaluating deep learning models. It was developed under project ONEIROS (Open-ended Neuro-Electronic Intelligent Robot Operating System) where the primary author was François Chollet. It lay on top of the efficient numerical computation libraries like Theano and TensorFlow. It also allows you to define and train neural network models in just a few lines of code.

Advantages of using Keras:

- Allows users to productise deep models on smartphones (Android and iOS).

- Contains numerous implementations of commonly used neural-network building blocks.

- Keras contains some good Prelabelled Datasets.

- Multiple methods for Data Pre-processing.

- Modularity.

Writing and running programs in TensorFlow has the following steps:

- Load Data.

- Define Keras Model.

- Compile Keras Model.

- Fit Keras Model.

- Evaluate Keras Model.

- Tie It All Together.

- Make Predictions.

Implementation

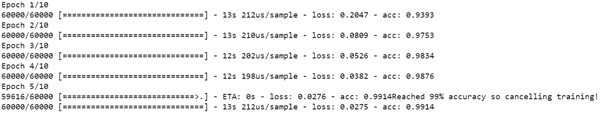

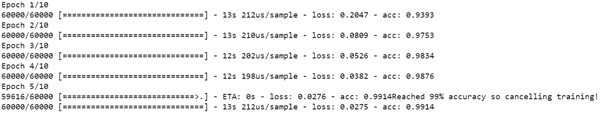

In this section, we will discover how to create your first deep learning neural network model in Python using Keras. Here we would be using Keras API through TensorFlow. We will here build a simple model for handwritten digit recognition and take the accuracy till 99% using two dense layers with 512 and 10 neurons, the first layer would be using relu activation function and the last layer with 10 neurons would be for classification of 10 digits. I have used Jupyter notebook for making the model as it is easier to visualise the project, you could also use google colab. We will see step by step process below.

import tensorflow as tf

path = “ “ #path of the dataset

def train_mnist():

class myCallback(tf.keras.callbacks.Callback): #callback to stop the training when a certain accuracy is reached

def on_epoch_end(self, epoch, logs={}):

if(logs.get('acc')>0.99):

print("Reached 99% accuracy so cancelling training!")

self.model.stop_training = True

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data(path=path) #Loading the dataset

x_train, x_test = x_train/255, x_test/255 #normal distribution of the dataset

callbacks = myCallback() #making a callback object

#creating model

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)), #making a tensor of shape 784 (28*28 pixel)

tf.keras.layers.Dense(512, activation=tf.nn.relu), #making a dense layer of 512 neuron with relu activation

tf.keras.layers.Dense(10, activation=tf.nn.softmax) #making a dense layer of 10 neuron with softmax activation

])

model.compile(optimizer='adam', #using adam optimizer

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# model fitting

history = model.fit(

x_train, y_train, epochs=10, callbacks=[callbacks] #training for 10 epochs

)

return history.epoch, history.history['acc'][-1]

train_mnist()

Training this can take time depending on the hardware (Processor and RAM) but you can always make use of virtualisation (online notebooks like google colab), that are free and can provide descent speed. Here from the model history we can see that the model has stopped training when it reached 99% accuracy because of callback class. From each epoch you can easily see that the loss is decreasing and the accuracy is increasing as the model learns. In other cases where the accuracy is not that good you can always tune your hyper parameter’s and can make your training more accurate i.e. you can try for changing the number of epochs.

Conclusion

You have now seen that with a few lines of code you can create a CNN model that can reach an accuracy of 99% in training without the actual implementation of the hard-core mathematics been used, this all is because of frameworks like Keras and TensorFlow. Well, you are always free to implement the core ideas and the algorithms of machine learning from the basic using TensorFlow. Tensorflow is also used to perform scientific calculation cause of its computational graphs. TensorFlow can provide an edge with the rich libraries and can make use of hardware acceleration very well and is also maintained by Google thus is reliable also.