Architecture of autoencoders

An autoencoder is composed of three parts:

-

Encoder: The input image is compressed into a latent space representation and encoded as a compressed representation in a lower dimension by an encoder, which is a fully connected, feedforward neural network. The compressed image is a deformed reproduction of the original image.

-

Code: The reduced representation of the input supplied into the decoder is stored in this network section.

- Decoder: The decoder, like the encoder, is a feedforward network with the same structure as the encoder. This network is responsible for reassembling the Code's input to its original proportions.

Source

The input is compressed and stored in the layer called Code by the encoder, and then the initial information is decompressed from the Code by the decoder. The primary purpose of the Autoencoder is to provide an output that is identical to the input.

It's worth mentioning that the architecture of the decoder is the inverse of that of the encoder. This isn't required, but it's standard procedure. The sole requirement is that the input and output dimensions are the same.

Denoising autoencoders

Simple autoencoders have been extended to include denoising autoencoders; nonetheless, it's worth noting that denoising autoencoders were not designed to denoise a picture automatically.

Source

Encoding autoencoders introduce noise to the input image and then learn to eliminate it. As a result, instead of copying the input to the output, features of the data are understood. While training, these autoencoders use a partially damaged input to retrieve the original undistorted input. The model learns a vector field to cancel out the extra noise for translating the input data toward a lower-dimensional manifold that describes the raw data. The encoder will learn a more robust representation of the data by extracting the most relevant features.

Source

Noises partially contaminate the data randomly added to the input vector in the case of a Denoising Autoencoder. After that, the model is trained to predict the original, uncorrupted data point as its output.

The encoder function converts the original data X into a latent space that exists at the bottleneck. The decoder function transfers the bottleneck's latent space to the output. In this example, the output function is the same as the input function. As a result, we're attempting to reconstruct the original image using generalized non-linear compression.

The encoding network can be represented by a conventional neural network function that has been activated, where h represents the latent dimension.

h = σ(W x + b)

Where the W represents the layer matrix.

In the same way, the decoding network can be represented in the same way, but with various weights, biases, and perhaps activation functions. We can then write the loss function in terms of these network functions, and we'll utilize that loss function to train the neural network using the conventional backpropagation process.

xˆ = σ(transpose(W)* h + c)

h is similar to some compressed input data represented by C(x).

And the loss function given by:

When we use Auto-Encoders, we get an advantage since the dimensionality of the data we use is reduced, as is the learning time for your situations. Another advantage of backpropagation coding is its compactness and speed.

The static topics of abstract topics are interesting in Auto-Encoders, resulting in a better data transition than training CNNs. Other things to consider are: Unlabeled Auto-Encoders may be trained; Auto-Encoders are unsupervised models, and CNNs already require them.

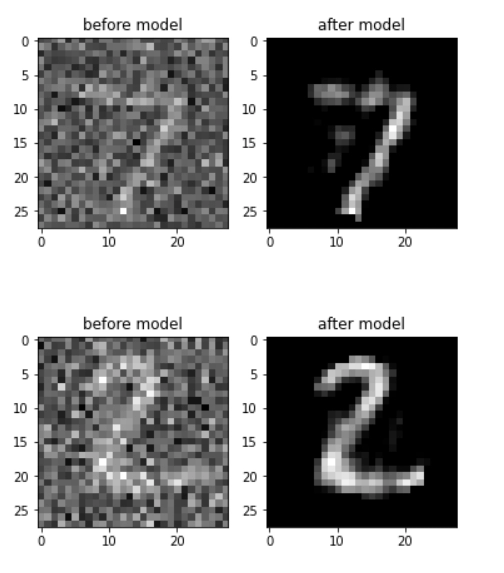

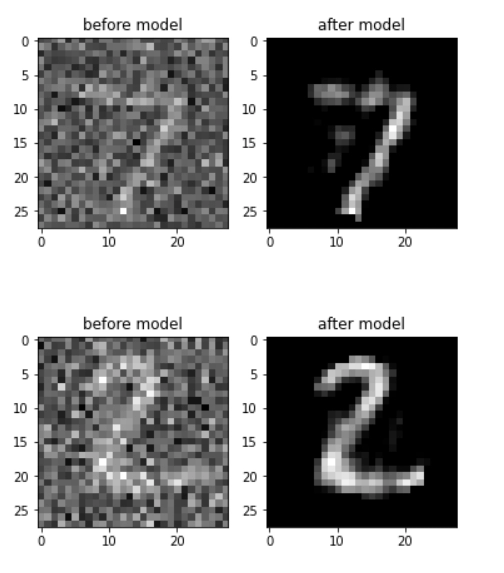

Implementation and training of a DAE(Denoising autoencoders) with Keras

We must use noisy input data to train a denoising autoencoder. We'll need to add noise to the original image to do so. The amount of corrupted data is determined by the amount of information in the data. In most cases, 25-30% of data is corrupted. If your data has less information, this number may be larger.

Using MNIST data, I constructed a denoising autoencoder in Keras, which will provide you with an understanding of how a denoising autoencoder works.

Importing libraries:

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

Noise generator function:

def generate_noise(images, noise_factor=0.5):

return images + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=images.shape)

# network output data

(y_train, _), (y_test, _) = keras.datasets.mnist.load_data()

y_train = y_train.astype("float32") / 255.

y_test = y_test.astype("float32") / 255.

# network input data

x_train = generate_noise(y_train)

x_test = generate_noise(y_test)

# dataset sizes

print(f"x_train.shape:{x_train.shape} y_train.shape:{y_train.shape}")

print(f"x_test.shape:{x_test.shape} y_test.shape:{y_test.shape}")

Resizing the image:

# image size

input_dim = x_train[0].shape + (1,)# + 1 color channel

output_dim = y_train[0].shape + (1,)# + 1 color channel

# build model

model = keras.Sequential([

keras.Input(shape=input_dim),

layers.Flatten(),# (28, 28, 1) -> (784, )

layers.Dense(784, activation="relu"),

layers.Reshape((28, 28, 1))# (784, ) -> (28, 28, 1)

])

Training the model:

model.compile(loss="mse", optimizer="adam", metrics=["accuracy"])

# print(model.summary())

epochs = 15

batch_size = 128

# train model

model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs)

predictions = model.predict(x_test)

# display model [input <> output]

for i in range(2): #for easy calculations

fig, (ax1, ax2) = plt.subplots(1, 2)

ax1.set_title("before model")

ax1.imshow(x_test[i], cmap=plt.get_cmap("gray"))

ax2.set_title("after model")

ax2.imshow(predictions[i], cmap=plt.get_cmap("gray"))

plt.show()

Output:

Frequently Asked Questions

What is the difference between autoencoders that are overcomplete and those that are undercomplete?

When the dimension of the code or latent representation is greater than the dimension of the input, the autoencoder is referred to as an overcomplete autoencoder. When the dimension of the code or latent representation is less than the dimension of the input, the autoencoder is referred to as an undercomplete autoencoder.

What is a bottleneck, exactly?

The autoencoders convert data from a high-dimensional format to a low-level format. The term "latent representation" or "bottleneck" refers to this low-level data representation. Only useful and important features that represent the input are included in the bottleneck.

What makes autoencoders and PCA so different?

The distinction between the autoencoder and the PCA is that the PCA utilizes a linear transformation to reduce dimensionality, whereas the autoencoder uses a nonlinear transformation.

What are Variational Autoencoders?

A variational autoencoder (VAE) describes an observation in latent space in a probabilistic manner. Rather than creating an encoder that produces a single value to describe each latent state characteristic, we'll create an encoder that produces a probability distribution for each latent state property.

Conclusion

Denoising Autoencoders are an essential tool for feature extraction and selection. You saw an autoencoder model in this blog that can successfully clean extremely noisy photos that it has never seen before (we used the test dataset). Our algorithm does a good job of recovering them.

Here you can check the implementation where they utilize Theano to create a very rudimentary Denoising Autoencoder and train it on the MNIST dataset.

The world of machine learning is vast, to learn more about machine learning check out our machine learning course at coding ninjas.