Introduction

Someone learning maths or physics must have come with the matrix topic once in its learning phase. Matrices are vital in some situations, like differential equations or linear transformations.

But in these cases, we have to use the same matrix again and again with a different scalar constant. So finding all the matrices, again and again, leads to wasted time, so is there any efficient method to solve this problem?

The answer to the above question is yes. We can use EigenVectors and EigenValues to solve this issue.

We will learn more about these terms while moving further with the blog, so let's move to our topic without wasting time.

EigenValues and its Properties

EigenValues and EigenVectors are somewhat related. We can understand Eigenvalues as a special or unique set of scalars used in linear equations or differential equations. It is used primarily with matrices. Eigen refers to a German word that means characteristic or proper.

So EigenValues can be referred to as the proper or characteristic values of the root.

Simplifying the above statements, the EigenValues can be referred to as the scalar vectors used to transform the EigenVectors.

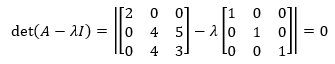

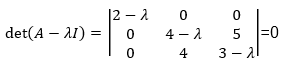

The basic equation representing these values is:

Ax = λx

The scalar value or number λ is the EigenValue of the matrix A.

EigenVectors

EigenVectors are the vectors used in linear equations as they do not change the direction when used and applied with linear equations. It varies by only a scalar factor.

These refer to the non-zero EigenValues. They refer to the direction in which the transformation is stretched, whereas EigenValues refers to the factor by which transformation is stretched.

An Eigenspace contains all the EigenVectors that have the same Eigenvalues.

In the equation:

Ax = λx

A is the matrix, x is the EigenVector corresponding to A, and λ is the EigenValue.

For one EigenValue, there can be an infinite number of EigenVectors.

The EigenVectors are linearly dependent for different EigenValues.

Properties of EigenValues

- EigenValues of hermitian and real symmetric matrices are real.

- EigenVectors are linearly dependent for different EigenValues.

- Singular matrices have Zero EigenValues.

- For the Square matrix λ=0, it is not an Eigenvalue.

- In the case of the scalar multiple of the matrix, if λ is an Eigenvalue of the square matrix A, then aλ will be the EigenValue of aA.

- If λ is an Eigenvalue of the square matrix A and n>=0 is an integer, then λn will be An 's EigenValue.

- If λ is an Eigenvalue of the square matrix A and p(x) is the polynomial of the variable x, then p(λ) will be the EigenValue of p(A).

- If λ is an Eigenvalue of the square matrix A, then λ-1 will be the EigenValue of A-1.

- If λ is an Eigenvalue of the square matrix A, then λ will be the EigenValue of At.

- The sum of EigenValues of matrix A is equal to A's trace.

- For two matrices of the same order like A, B, the EigenValue of AB is equal to the EigenValue of BA.