Introduction

You might have noticed hallucinations in some human beings. Hallucinations are basically an experience involving the apparent perception of something not present. But what are hallucinations in computer vision? Hallucination in computer vision produces weird output and predicts strange results.

To understand more about hallucinations in computer vision, let us discuss a computer vision topic called image captioning and hallucination in image captioning.

Image Captioning And Hallucinations

Image captioning has been the subject of extensive research. For numerous photos, Neural Baby Talk (NBT), a research project in image captioning, outputs the object "bench" incorrectly. This error is called a hallucination. The ability of visually impaired and blind persons to comprehend the world and images around them is one of the most important uses of image captioning. Many studies have discovered that visually impaired people favor the accuracy of the picture caption above image coverage. As a result, object hallucination is a major concern that has the potential to harm visually impaired persons.

The issue of hallucination also leads to another issue. The problem is that hallucinating model tends to construct very inaccurate internal representations of the visual. The following are some of the many questions that researchers are looking into:

- Which models are most likely to experience hallucinations?

- What are some of the most common causes of hallucination?

- How effectively do established measures of hallucination capture hallucination?

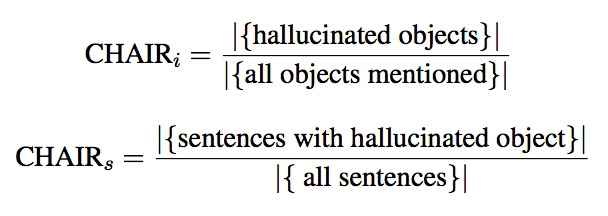

After analyzing many captioning models, researchers were able to answer all the questions. The researchers introduced CHAIR (Caption Hallucination Assessment with Picture Relevance), a new metric that attempts to determine the image relevance of generated captions. The researchers provided picture and language model consistency scores, knowing that hallucinations could be caused by a variety of factors. These results will go more into the problem that arose as a result of the language model. The researchers also point out that many of the measurements they rely on fail to capture and account for the hallucination phenomenon.