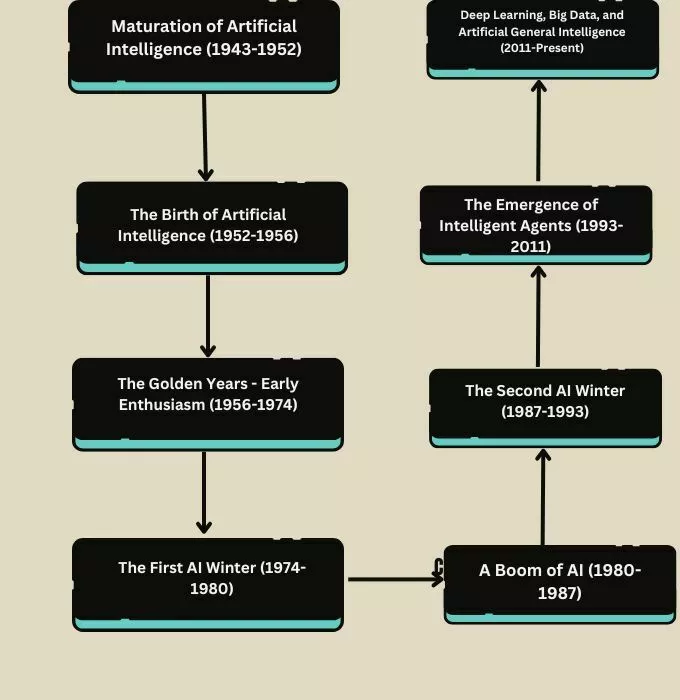

Maturation of Artificial Intelligence (1943-1952)

The journey of Artificial Intelligence began way back in the 1940s, not with fancy robots or talking machines, but with a bunch of scientists, mathematicians, and philosophers pondering over a simple yet profound question: Can machines think? During this period, a key development was the creation of the first electronic computers, which laid the groundwork for AI.

-

One notable figure was Alan Turing, a British mathematician. He came up with the Turing Test, a method to determine if a machine can exhibit intelligent behavior indistinguishable from a human. This was a big deal because it gave people a way to think about and measure intelligence in machines.

-

Around the same time, Warren McCulloch and Walter Pitts introduced a concept called the neural network. They made a model showing how neurons in the brain might work together to process information, suggesting that machines could potentially mimic this process.

-

Despite these groundbreaking ideas, the technology needed to make AI a reality was still in its infancy. Computers were huge, expensive, and not widely available. Plus, they didn't have the processing power needed for complex calculations. So, while the ideas were there, turning them into working AI was still a bit out of reach.

- In essence, the period from 1943 to 1952 was all about planting seeds. Scientists and thinkers laid the foundational theories and concepts of AI, setting the stage for the incredible advancements that would follow.

The Birth of Artificial Intelligence (1952-1956)

Between 1952 and 1956, we saw what you might call the first real steps of artificial intelligence. This is when AI moved from just an idea to something people actually started working on. It's like when you've been planning a big project, and you finally start putting those plans into action.

-

One of the key moments was when a man named Arthur Samuel created a program that could play checkers. Now, this might not sound like a big deal, but it was huge at the time. The program could not only play the game but also learn from its experiences, getting better over time. This was one of the first examples of what we now call machine learning, where a computer program improves through experience.

-

Then, there was the Dartmouth Conference in 1956, which is often considered the official birthdate of artificial intelligence as a field. A group of smart folks got together at Dartmouth College and said, "Hey, we think machines can be made to simulate any aspect of learning or intelligence." This was bold, but it kicked off serious research into making machines that could think and learn.

-

During these years, the focus was on creating programs that could solve problems and learn. Researchers were optimistic, and there was a sense that AI could quickly tackle complex problems. They started building systems that could do things like prove mathematical theorems or understand language, which were big steps forward.

- So, the period from 1952 to 1956 was when AI took its first baby steps. People began to create actual AI programs, and the field of artificial intelligence officially got its name and its mission.

The Golden Years - Early Enthusiasm (1956-1974)

After the Dartmouth Conference, the field of artificial intelligence entered a period of high hopes and big dreams, often called the "golden years." During this time, researchers made significant advances, and there was a lot of excitement about what AI could achieve.

-

One of the standout projects was the development of a program called ELIZA by Joseph Weizenbaum in the mid-1960s. ELIZA was a kind of computer therapist that could chat with people, and though it was pretty basic by today's standards, it was one of the first programs to process natural language. It made people feel like they were talking to a human, which was pretty cool back then.

-

Around the same time, another program called SHRDLU, created by Terry Winograd, was able to understand and respond to commands in English within a controlled environment. If you told SHRDLU to move a block or describe where something was, it could understand and act accordingly. This was a big step forward in making computers understand and use human language.

-

Researchers were also making progress in solving complex math problems and improving how computers could learn and make decisions. There was a feeling that AI could soon replicate many human abilities, and the possibilities seemed endless.

-

This period was marked by a lot of funding for AI research, mainly from governments and big corporations, who were eager to see where this new technology could go. The optimism was high, and it felt like AI was on the brink of becoming a part of everyday life.

- The golden years of AI were a time of groundbreaking developments and growing confidence in the field. Researchers were pushing the boundaries of what machines could do, fueled by the belief that AI could transform the world.

The First AI Winter (1974-1980)

After the initial excitement, the field of Artificial Intelligence hit its first major setback, a period often called the "AI Winter." This was a time when the high hopes and big promises of AI started to crumble under the weight of reality. Funding dried up, and the public's interest waned as the challenges of AI became more apparent.

-

One of the main reasons for this downturn was the realization that AI research had underestimated the complexity of mimicking human intelligence. Early AI systems could perform well on specific tasks but couldn't adapt to new or broader challenges. It was like having a tool that worked perfectly for one job but was useless for anything else.

-

Another issue was the limitations of the technology at the time. Computers in the 1970s were far less powerful than what we have today. They were slower, had less memory, and couldn't process information as efficiently. This made it difficult to develop more advanced AI systems that could learn and adapt.

-

The funding for AI research also took a hit. Both government and private investors became skeptical of AI's potential, leading to a significant reduction in support. Without the necessary funds, many AI projects couldn't continue, and the progress of the field slowed down.

-

Despite these challenges, the "AI Winter" wasn't all bad. It served as a wake-up call for the AI community. Researchers realized they needed to adjust their expectations and approaches. They began to focus on more achievable goals and started laying the groundwork for the AI systems we have today.

- In summary, the First AI Winter was a tough time for artificial intelligence. But it was also a period of reflection and recalibration, helping to set the stage for future advancements.

A Boom of AI (1980-1987)

Just when things seemed difficult for Artificial Intelligence, the 1980s brought a fresh wave of optimism and progress, marking a significant rebound. This period is often seen as a mini-boom for AI, thanks to several key developments that reignited interest and investment in the field.

-

One of the game-changers was the advent of expert systems. These were AI programs designed to mimic the decision-making abilities of a human expert in specific fields, such as medicine or engineering. One famous example was MYCIN, developed at Stanford University, which could diagnose bacterial infections and recommend antibiotics. Expert systems showed the practical value of AI in solving real-world problems, which attracted attention from businesses and industries.

-

The hardware also got a significant upgrade during this time. The development of faster and more powerful computers meant that AI systems could handle more complex tasks and process larger amounts of data. This was crucial for running more sophisticated AI programs.

-

Another boost came from increased funding, especially from governments and corporations that started to see the potential benefits of AI. In the United States, the Defense Advanced Research Projects Agency (DARPA) invested heavily in AI research, contributing to significant advancements.

-

This period also saw the rise of machine learning, a subset of AI that focuses on developing algorithms that allow computers to learn from and make predictions or decisions based on data. This shift towards data-driven AI was a significant move away from the rule-based systems that dominated the early years of AI research.

- The mini-boom of the 1980s was an important time for AI, showing the world that AI had practical applications and could bring real value to society. It set the stage for the rapid advancements in AI technology that we see today.

The Second AI Winter (1987-1993)

Following the excitement of the 1980s, Artificial Intelligence faced another challenging period known as the Second AI Winter. This was a time when expectations for AI once again exceeded its actual capabilities, leading to disappointment and a significant reduction in funding and interest.

-

One of the key issues was the limitations of the expert systems that had been so popular in the early '80s. While these systems were useful in specific contexts, they struggled with tasks outside their narrow area of expertise. They required a lot of manual effort to update and maintain, which made them less appealing to businesses as the initial excitement wore off.

-

Another problem was the hardware limitations of the time. Even though computers had become more powerful, they still couldn't handle the complex computations needed for more advanced AI applications. This limited the progress that could be made in developing more sophisticated AI systems.

-

The hype from the previous boom also set unrealistic expectations. Stories of what AI could achieve led to a bubble of sorts, with investments flowing into projects that weren't yet feasible. When these projects failed to deliver, both public and private funding dried up, leading to a downturn in AI research.

-

During this winter, the progress in AI slowed significantly, but it wasn't all bad news. The challenges faced during this period forced researchers to rethink their approaches to AI, leading to new ideas and methodologies that would later contribute to the resurgence of the field.

- Despite the downturn, a dedicated group of researchers continued to work on AI, laying the groundwork for future advancements. This period was a time of reflection and recalibration, reminding the AI community of the importance of setting realistic goals and expectations.

The Emergence of Intelligent Agents (1993-2011)

As the Second AI Winter passed, the late '90s and early 2000s marked a significant shift in the AI landscape. This period is characterized by the emergence of intelligent agents, systems designed to operate autonomously, observe their environment, and take actions to achieve specific goals. These agents became the building blocks for more complex AI applications, laying the groundwork for the smart technologies we use today.

-

One of the key developments was the rise of the internet and the exponential increase in digital data. This abundance of information became a rich playground for AI systems, allowing them to learn and adapt in ways that weren't previously possible. Search engines, for example, began using AI to improve how they indexed and retrieved information, making the web more accessible and useful.

-

Another significant advancement was in the field of robotics. Robots started becoming smarter and more autonomous, capable of performing tasks without constant human oversight. From manufacturing lines to exploration rovers on Mars, intelligent agents began to take on roles that were dangerous, tedious, or impossible for humans.

-

During this time, AI also made significant achievments in natural language processing (NLP), the technology behind understanding and generating human language. This led to more sophisticated chatbots and virtual assistants, capable of helping with a range of tasks from customer service to scheduling appointments.

-

The emergence of intelligent agents also saw AI integrating more seamlessly into everyday devices and applications. From personalized recommendations on streaming services to predictive text on smartphones, AI began to subtly enhance our daily interactions with technology, making it more intuitive and user-friendly.

- This era set the stage for the next wave of AI advancements, with intelligent agents becoming an integral part of our digital lives. It was a period of growth and integration, where AI started to move out of research labs and into the real world.

Deep Learning, Big Data, and Artificial General Intelligence (2011-Present)

In the most recent chapter of AI's story, starting around 2011, we've seen an explosion in AI capabilities, largely thanks to two key developments: deep learning and big data. This era has brought us closer to the dream of Artificial General Intelligence (AGI) - a type of AI that can understand, learn, and apply knowledge in a way that's similar to how humans do.

-

Deep learning is a technique in machine learning that uses structures called neural networks, which are inspired by the human brain. These networks can learn from large amounts of data, making sense of complex patterns and making predictions. The more data these networks are fed, the better they become at tasks like recognizing speech, translating languages, and identifying objects in images.

-

The rise of big data has gone hand-in-hand with deep learning. In our digital world, we generate huge amounts of data every day - from social media posts to online transactions. This data is fuel for AI systems, allowing them to learn from a vast array of examples. It's this combination of deep learning and big data that's behind many of the AI breakthroughs we've seen in recent years.

-

One of the most publicized milestones in this era was when Google's AI, AlphaGo, defeated a world champion Go player. Go is a complex board game, and this victory was a clear sign of how far AI had come in understanding and strategizing complex tasks.

-

Today, AI is not just about performing specific tasks; it's about integrating intelligence into a wide range of applications, from self-driving cars to personalized medicine. These systems are not only capable of learning from data but also improving their learning over time, opening up new possibilities for AI applications.

-

The current era is marked by a cautious optimism. While we celebrate the advancements, there's also an awareness of the ethical and societal implications of AI. As we inch closer to creating more advanced forms of AI, the focus has also shifted towards ensuring these technologies are developed responsibly and for the benefit of all.

- This period in AI's history is still being written, with each breakthrough bringing us closer to understanding what it means to create intelligent machines. It's an exciting time to be part of this journey, witnessing firsthand the unfolding future of AI. Today AI is pretty normal for us, from the famous tools like GPT and Bard to more advanced AI tools, our life is heavily dependent on AI and day by day its getting better. This journey was not easy and we should thank all the scientists and people involved who believed in th power of AI and due to them we are here today celebrating AI’s power and its tools.

Frequently Asked Questions

What is the difference between AI and machine learning?

AI is a broad field focused on creating machines capable of performing tasks that typically require human intelligence. Machine learning is a subset of AI that involves teaching computers to learn from data and improve over time without being explicitly programmed for each task.

Can AI surpass human intelligence?

Currently, AI excels in specific tasks but lacks the general understanding and adaptability of human intelligence. The concept of Artificial General Intelligence (AGI), where AI matches or surpasses human intelligence across the board, remains a theoretical possibility and a subject of ongoing research.

How is AI impacting jobs?

AI is transforming many industries, automating routine tasks but also creating new job opportunities that require more complex human skills like creativity, problem-solving, and emotional intelligence. The impact on jobs is significant, emphasizing the need for skills adaptation and lifelong learning.

Conclusion

In this article, we talked briefly about the history of AI, from its early conceptual stages to the era of deep learning and big data. We've seen how AI evolved through periods of optimism and setbacks, leading to the sophisticated systems we interact with today. From the foundational work in neural networks to the breakthroughs in machine learning, each chapter of AI's history has built upon the last, pushing the boundaries of what's possible.

You can refer to our guided paths on the Coding Ninjas. You can check our course to learn more about DSA, DBMS, Competitive Programming, Python, Java, JavaScript, etc. Also, check out some of the Guided Paths on topics such as Data Structure andAlgorithms, Competitive Programming, Operating Systems, Computer Networks, DBMS, System Design, etc., as well as some Contests, Test Series, and Interview Experiences curated by top Industry Experts.