Introduction

Feedforward neural networks are the inspiration from biological neural networks, which basically contain infinite neural networks connected in loops, that pass the messages. Generally, to solve regression problems or classification problems, the last layer of neural networks contains only one unit. This is the major problem the obtained output with one unit data is not efficient, so people invented Feedforward neural networks by utilizing human nerves architecture.

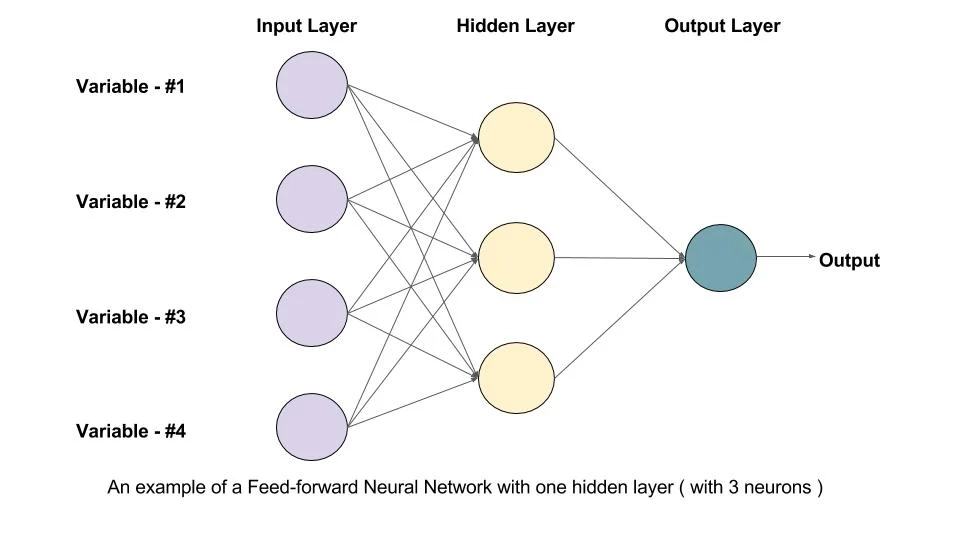

To understand the above image let’s take a generalized example. If you are walking on the road and suddenly a snake has been encountered in front of you, this is the input the snake image is the input layer. Now your brain needs to process all the information and give you some possible cases like running, beating it with a stick, or just waiting until snakes move another path or scream. So this process is done under hidden layers. From all the possible outcomes you will select the good output that is best with your situation, this is called as output layer. Now let’s explore some architecture and try to develop a simple model.

Feedforward Architecture

A Feedforward Neural Network is an artificial neural network in which the connections between nodes do not form a cycle. The opposite of a Feedforward neural network is a recurrent neural network, in which certain pathways are cycled. The Feedforward model is the simplest form of a neural network as information is only processed in one direction. While the data may pass through multiple hidden nodes, it always moves in one direction and never backward.

A Feedforward Neural Network is commonly seen in its simplest form as a single layer perceptron. In this model, a series of inputs enter the layer and are multiplied by the weights. Each value is then added together to get a sum of the weighted input values. If the sum of the values is above a specific threshold, usually set at zero, the value produced is often 1, whereas if the sum falls below the threshold, the output value is -1.

The single-layer perceptron is an important model of Feedforward neural networks and is often used in classification tasks. Furthermore, single-layer perceptrons can incorporate aspects of machine learning. Using a property known as the delta rule, the neural network can compare the outputs of its nodes with the intended values, thus allowing the network to adjust its weights through training in order to produce more accurate output values. This process of training and learning produces a form of gradient descent.

In multi-layered perceptrons, the process of updating weights is nearly analogous, however, the process is defined more specifically as back-propagation. In such cases, each hidden layer within the network is adjusted according to the output values produced by the final layer.