Introduction

Before we start with today’s discussion, i.e, Process synchronization, let us understand them individually. What is a process? A process is defined as an entity that represents the fundamental unit of work to be carried out in the system. To put it simply, we write our programs in a text file, and when we execute them, they transform into a process that performs all of the tasks specified in the program. Now, what is synchronization? It is the situation in which two or more actions, events, etc. occur at the same time. Process synchronization is the process to handle problems that arise while multiple cooperative processes are executed simultaneously.

In this article, we’ll be understanding what exactly happens when multiple processes arrive and run at the same time, what are the consequences they made, and how to overcome those:

Process Synchronization

When two or more processes cooperate, the order in which they execute must be preserved; otherwise, there may be conflicts in their execution and inappropriate outputs produced.

Let us go through how many types of processes exist and which processes make conflicts:

There are basically two types of processes:

- Independent processes

- Cooperative processes

Independent Processes

As the name implies, two or more processes are said to be Independent processes if they are independent of each other. In other words, if the execution of one process does not affect the execution of another process is under the category of Independent processes. By this, we can conclude that even if the multiple independent processes are executed simultaneously then there will be negligible impact on the ultimate outcome.

Cooperative Processes

A cooperative process is one that can affect the execution of another process or be affected by the execution of another process. Such processes must be synchronized in order for their execution order to be guaranteed. Now a question may arise, how can a process be cooperative with another one? For example, when two processes share the same data( variable, memory ..) at the same time are considered Cooperative processes.

Recommended Topic, FCFS Scheduling Algorithm, Multiprogramming vs Multitasking

Producer-Consumer Problem

A producer process produces information that is consumed by a consumer process. For example, a compiler may produce the assembly code, which is consumed by an assembler. The assembler, in turn, may produce object modules, which are consumed by the loader.

One solution to the producer-consumer problem using shared memory. To allow producer and consumer processes to run concurrently we must have available a buffer of items that can be filled by the producer and emptied by the consumer. There are two kinds of buffer:

-

Unbounded buffer

It places no practical limit on the size of the buffer. The consumer may have to wait for new items but the producer can always produce new items. -

Bounded buffer

Assumes a fixed buffer size. In this case, the consumer must wait if the buffer is empty, and the producer must wait if the buffer is full.

Let us take an example of a bounded buffer:

In the example, we are going to count the number of items present in the buffer using a variable counter, that is when something is added to the buffer by the producer, the variable will be incremented(counter++), and when something is removed from the buffer, the variable will be decremented(counter--).

Suppose that the value of the variable counter is currently 5.

The producer and consumer processes execute the statements `counter++` and `counter- -` concurrently.

Following the execution of these two statements, the value of the variable counter may be 4, 5, or 6 but the only correct result, though, is counter == 5, which is generated correctly if the producer and consumer execute separately.

For instance, let’s say you have 5 candies, your friend gives you one more candy. Total = 6, but you have consumed that candy. The new total will be 5 only.

Now let us frame the above discussion:

What is Process Synchronization?

Process synchronization is the task of coordinating process execution so that no two processes can access the same shared data and resources.

Why is process synchronization important?

It is especially important in a multi-process system where multiple processes are running concurrently and multiple processes are attempting to access the same shared resource or data at the same time.

This can result in inconsistent shared data. As a result, changes made by one process may not be reflected when other processes access the same shared data. To avoid this type of data inconsistency, the processes must be synchronized with one another.

To conclude, processes must be scheduled to avoid inconsistencies caused by concurrent access to shared data. Inconsistency in data can lead to what is known as a race condition.

Race Condition

When more than one process is either executing the same code or accessing the same memory or any shared variable; there is a possibility that the output or value of the shared variable is incorrect, so all the processes race to say that my output is correct. This is commonly referred to as a race condition.

Since several processes access and process the same data in parallel, the outcome is determined by the order in which the data is accessed.

This condition mainly occurs in a critical section when the outcome of multiple thread executions differs depending on the order in which the threads execute.

However, this dilemma can be avoided if the critical section is treated as the atomic instruction. As the name suggests, atomic means one, so one process at a time should have the authority to modify the shared resource in order to avoid the race condition.

Critical Section Problem

If more than one process wants to access the same code segment, then that segment is known as the Critical Section. There are shared variables present in the critical section, which helps in synchronization so that the data variables' consistency is maintained.

In other words, a Critical Section is a code segment or a collection of instructions/statements that must be executed atomically like accessing a resource file, global data, input port, output port, etc. It means that in a group of cooperating processes, only one process must be executing its critical section at any given time. Any other process that wants to run its critical section must wait until the first one has been completed.

For example, when we go to buy a movie ticket, we must follow the queue and purchase ours only after the person in front of us has purchased the ticket; in the meantime, the ticket counter will be updated with the correct number of tickets purchased by the customers. Likewise, each cooperative process must wait for the first process to complete its critical section in order to avoid the race condition.

There are two methods that control the entry and exit from the critical section:

- The wait() method controls the entry to the critical section and is represented by P().

- The signal() method controls the exit from the critical section and is represented by V().

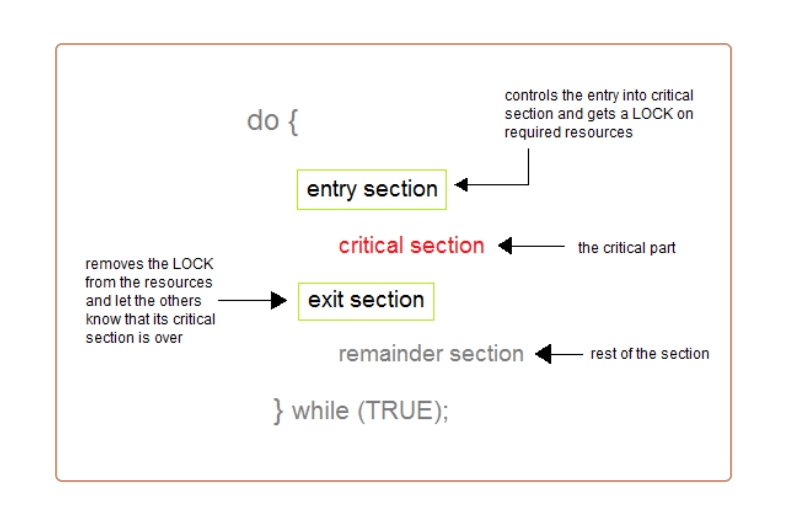

Sections of a Program in a critical section

Entry Section: It is a section that decides the entry of a specific process.

Critical Section: This part allows one process to enter and alter the shared data.

Exit Section: The Exit section permits the other processes that are waiting in the Entry Section to enter into the Critical Section. It also checks that a process that finished its execution should be removed through this Section.

Reminder Section: All other parts of the Code, which are not in the Critical, Entry, and Exit Section, are known as the Reminder Section.

There are certain rules that must be followed by each cooperative process to be in a critical section:

Rules for Critical Section

The rules for the Critical Section are as follows:

- Mutual Exclusion

- Progress

- Bounded Waiting

Mutual Exclusion

If there is a process that is executing in a critical section, then no other process is permitted to enter into the critical section. A form of binary semaphore is used to regulate access to shared resources. It has a priority inheritance system that aids in the avoidance of prolonged priority inversion issues.

Progress

We use it when the critical section is vacant and a process wants to enter it. Processes that are not present in their reminder section decide who should enter within a certain amount of time. Also, if a process doesn’t want to run into the critical section, then it should not deter other processes from entering into the critical section.

Bounded Waiting

The rule for bounded waiting says that every process is allotted a timestamp so that the processes do not need to wait for more time.

Must Read Evolution of Operating System, Open Source Operating System

Solutions to the Critical Section Problem

Some common solutions to the critical section problem are as follows:

1. Peterson Solution: If one process enters a critical section, the other process can only execute the leftover code, and vice versa. This approach proposed by Peterson is extensively utilized and ensures that only one process runs at a time in the critical section.

Example: Consider N processes (P1, P2,... PN), each of which must pass over the Critical Section at some point. There is an array FLAG[ ] of size N which is by default set to false. As a result, if a process intends to reach the critical section, the flag must be set to true.

A variable TURN indicates the process number that is about to enter the Critical Section. When a process enters the critical section, the TURN value is changed to another number from the list of ready processes when it exits.

Below is the code snippet for the same:

Code:

|

PROCESS Pi FLAG[i] = true // change the state from false to true while( (turn != i) AND (CS is !free) ) { wait; } CRITICAL SECTION FLAG[i] = false turn = j; //choose another process |

2. Hardware Synchronization: Hardware can sometimes assist in resolving critical section problems. Some operating systems include lock functionality. This locks a process when it enters a critical section and unlocks it when it exits a critical section. As a result, when a process is already inside a critical section, other processes cannot enter.

3. Mutex Locks: A mutex lock is a strict software method in which a process is given a LOCK over critical resources in the entry section of code. This LOCK can be used by the process inside the critical section and removed in the exit section.

4. Semaphore Solution: A semaphore is a non-negative variable shared by multiple threads. It is a signalling mechanism in which a thread waiting on a semaphore signals another thread. It uses wait and signal to synchronize processes.

Below is an example of a Semaphore Solution:

|

Check out this problem - Count Inversions

Let us now look at the faqs based on the above discussion:

Must Read Threads in Operating System

Frequently Asked Questions

What is the need for process synchronization?

The need for synchronization originates when processes need to execute concurrently. The primary goal of synchronization is to allow resources to be shared without interference.

What is the race condition in OS?

When two or more threads have access to shared data and attempt to change it at the same time, a race condition occurs. Because the thread scheduling algorithm can switch between threads at any time, you don't know which threads will try to access the shared data first.

What is the producer-consumer problem in OS?

The producer-consumer problem entails filling and depleting a bounded buffer. The producer inserts entries into the buffer, and if it is full, it can sleep. If the buffer is empty, the consumer must retrieve data from it by sleeping.