Basic Terminologies

Tensor- Tensor is an n-dimensional array running on the GPU.

Variables- Variables are nodes in the computation graph used to store the data and the gradients.

Module- Modules in the neural networks are used to store states. States are also called learners with weights.

PyTorch v/s TensorFlow

PyTorch

- PyTorch offers dynamic computation graph.

- PyTorch can make use of standard Python flow control.

- PyTorch supports native Python debugger.

- PyTorch ensures dynamic inspection of Variables and Gradients.

- PyTorch is mainly used for research at this point in time.

TensorFlow

- TensorFlow does not offer dynamic computation graph.

- TensorFlow cannot make use of common Python flow control.

- TensorFlow cannot use native Python debuggers.

- TensorFlow does not support dynamism.

- TensorFlow is used in the production.

Basic Implementations

The first step is to install all the packages. We will be importing the torch package here. Let's start by constructing a 5x3 matrix that is uninitialized.

from __future__ import print_function

import torch

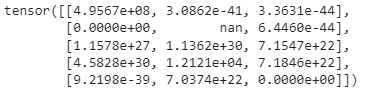

# constructing a 5x3 matrix, uninitialized:

x = torch.empty(5,3)

print(x)

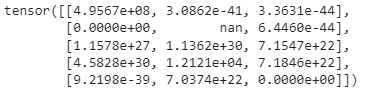

Output

From the output, we have the empty tensor. Similarly, let's construct another 5x3 matrix. But this time, we will be putting random values into it, and we can check out the output.

x = torch.rand(5, 3)

print(x)

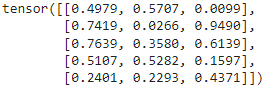

Output

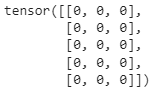

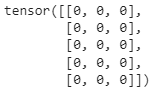

Every time we run the code, we'll get different output. Let's construct a matrix filled with zeros, and specifically, we'll mention the data type to belong.

# constructing a matrix filled with zeroes and of dtype long

x = torch.zeros(5,3,dtype=torch.long)

print(x)

Output

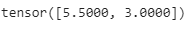

We can even construct the tensor directly from the data.

x = torch.tensor([5.5, 3])

print(x)

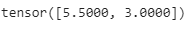

Output

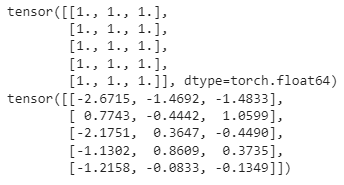

Here 5.5 and 3 are not dimensions but the tensor data. Next, we will create a tensor that is based on an existing tensor. These methods will reuse all the properties of the input tensor. For example, the data type and any other dependencies on other packages unless the user provides the new values.

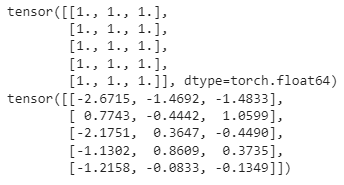

x = x.new_ones(5,3, dtype=torch.double)

print(x)

x= torch.randn_like(x, dtype=torch.float)

print(x)

Output

Here we'll be filling the tensor with ones and later overriding the data type from double to float. So the output we get is the float data type of the new tensor value.

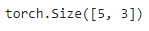

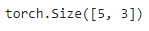

We can print the size of the tensor like:

print(x.size())

Output

The size is 5x3. But 5x3 is a tuple output, so that it will support all the tuple operations there. For the case of simplicity, Let's consider the essential tuple operation called Addition.

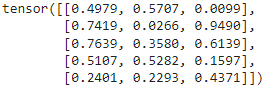

We'll construct another tensor, fill it with random dimensions 5x3, and perform the Addition operations.

y = torch.rand(5, 3)

print(x+y)

Output

In this way, we can perform various operations on tensor objects.

Frequently Asked Questions

-

How is PyTorch designed?

Pytorch is the cousin of lua-based frameworks. It is actively used in the development of Facebook.

-

What are the main elements of PyTorch?

1) PyTorch tensors

2) PyTorch NumPy

3) Mathematical operations

4) Autograd Module

5) Optim Module

6) nn Module

-

What are Tensors in PyTorch?

Tensors in PyTorch are the same as the numpy array. It is a multi-dimensional array of the same data type.

-

What are the Features of PyTorch?

The main Features of PyTorch are-

1) PyTorch offers dynamic computation graphs.

2) PyTorch can make use of standard Python flow control.

3) PyTorch ensures dynamic inspection of Variables and Gradients.

-

What is an Activation function?

The Activation function calculates a weighted sum and further adds bias with it to give the result.

Key Takeaways

This blog taught the concepts and the basic implementation behind PyTorch. PyTorch is the field of research, and various models have been implemented using it. Check the link for advanced implementation. For a detailed concept in Machine Learning, visit here.

Check my other blog- Logistic Regression.