Reinforcement Learning: The constituent elements

Before we dive into the workings of Reinforcement learning, let's understand a few technical terms that are used very frequently when talking about RL problems.

- Agent: An entity that can interpret its surroundings and act on them.

- Environment: The physical environment in which the agent operates

- Action: Moves made by the agent.

- State: The agent's current status

- Reward: Feedback from the environment after evaluating the agent's action.

- Policy: Method used by the agent to choose the following action depending on the current condition.

- Value: Future reward that an agent would earn for performing an action in a specific state

How Reinforcement learning work?

Reinforcement Learning (RL) is a trial-and-error-based approach where an agent interacts with an environment, takes actions, and receives rewards or penalties based on those actions. Over time, the agent refines its strategy to maximize cumulative rewards by learning from past experiences.

Key Components of Reinforcement Learning

- Policy: The decision-making strategy that determines the next action based on the current state.

- Reward Function: Provides feedback on actions, guiding the agent toward its goal.

- Value Function: Estimates the expected future cumulative rewards from a given state.

- Model of the Environment: Predicts future states and rewards, aiding in strategic planning.

Example: Reinforcement Learning in PacMan

Games are the finest way to understand an RL problem.

Consider the classic game PacMan, in which the agent's (PacMan's) purpose is to consume the food in the grid while dodging the ghosts in its path.

In this example, the grid world represents the agent's interactive environment in which it operates. Agents are rewarded for consuming food and punished if they are encountered by the ghost (loses the game).

The states represent the agent's position in the grid environment, while the overall cumulative reward represents the agent winning the game.

Consider the classic game PacMan, where the goal is to consume food while avoiding ghosts.

- Agent: PacMan

- Environment: The game grid

- Actions: Moving in different directions

- Rewards & Penalties: Eating food increases the score (positive reward), while getting caught by a ghost results in a penalty (game over).

- States: The agent’s position in the grid at any given time

- Objective: Maximize the cumulative score while minimizing the risk of losing

Now that we've defined each constituent, let's take a look at how we can solve the problem!

'

'

source: link

In order to construct an optimum strategy, the agent must solve the conundrum of exploring new states while maximizing its total benefit. This is referred to as the Exploration vs. Exploitation trade-off. To strike a balance between the two, the optimal overall plan may include short-term compromises. As a result, the agent should gather sufficient knowledge to make the best overall decision in the future.

Types of Reinforcements in Reinforcement Learning (RL)

Reinforcement Learning (RL) uses a reward-based system where an agent learns by interacting with an environment. The two main types of reinforcement are Positive Reinforcement and Negative Reinforcement.

1. Positive Reinforcement

Positive reinforcement occurs when an agent receives a reward for taking an action that leads to a desired outcome. It encourages the agent to repeat beneficial actions.

Example

- In video games, a player earns bonus points for completing a level quickly, reinforcing speed and efficiency.

- In robotics, a robot receives a higher score when successfully completing a task.

Advantages

- Encourages learning and optimizes performance.

- Helps the agent explore beneficial behaviors.

- Increases long-term efficiency in decision-making.

Disadvantages

- Over-reliance on rewards may lead to exploitative behavior.

- Can cause unnecessary exploration, slowing down learning.

2. Negative Reinforcement

Negative reinforcement involves removing a negative stimulus when the agent performs a desired action, making the behavior more likely to occur.

Example

- In self-driving cars, the system reduces penalties when it follows traffic rules correctly.

- In machine learning models, errors are penalized, forcing the model to adjust and improve.

Advantages

- Helps in avoiding mistakes and learning quickly.

- Reduces undesirable behaviors, making the system more stable.

- Increases efficiency by minimizing failures.

Disadvantages

- May lead to short-term learning without long-term strategy development.

- Excessive penalization can result in unpredictable behavior.

Both reinforcement types play a crucial role in training intelligent systems and improving decision-making in RL.

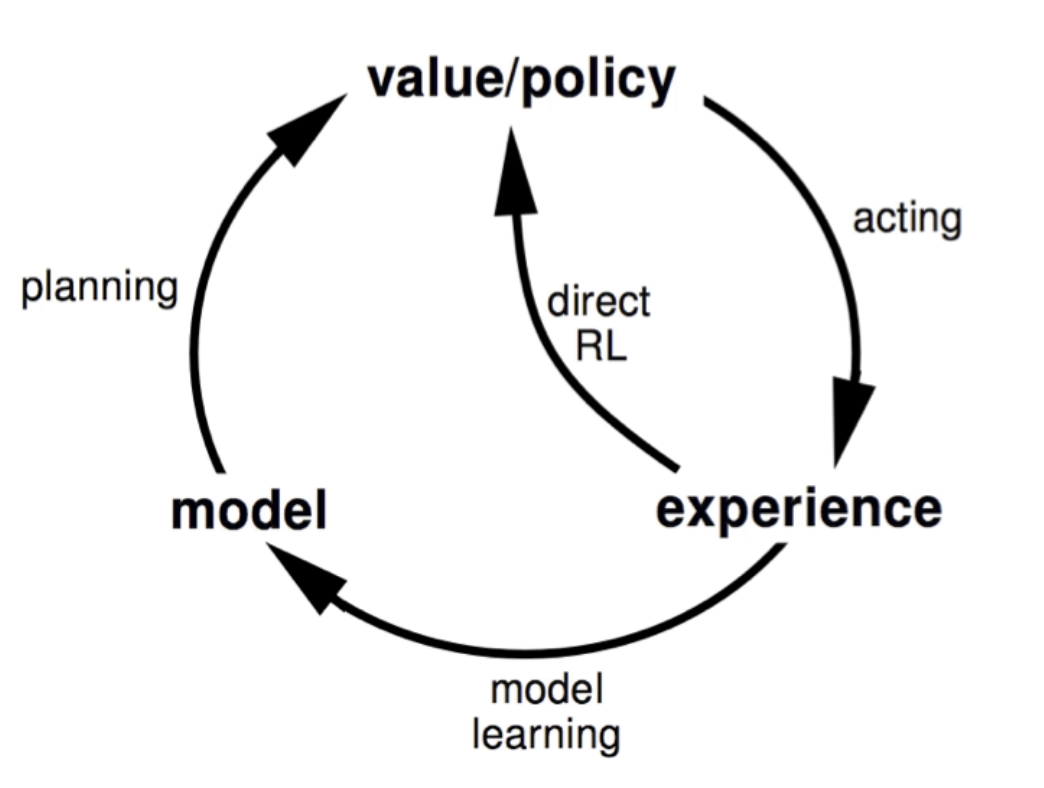

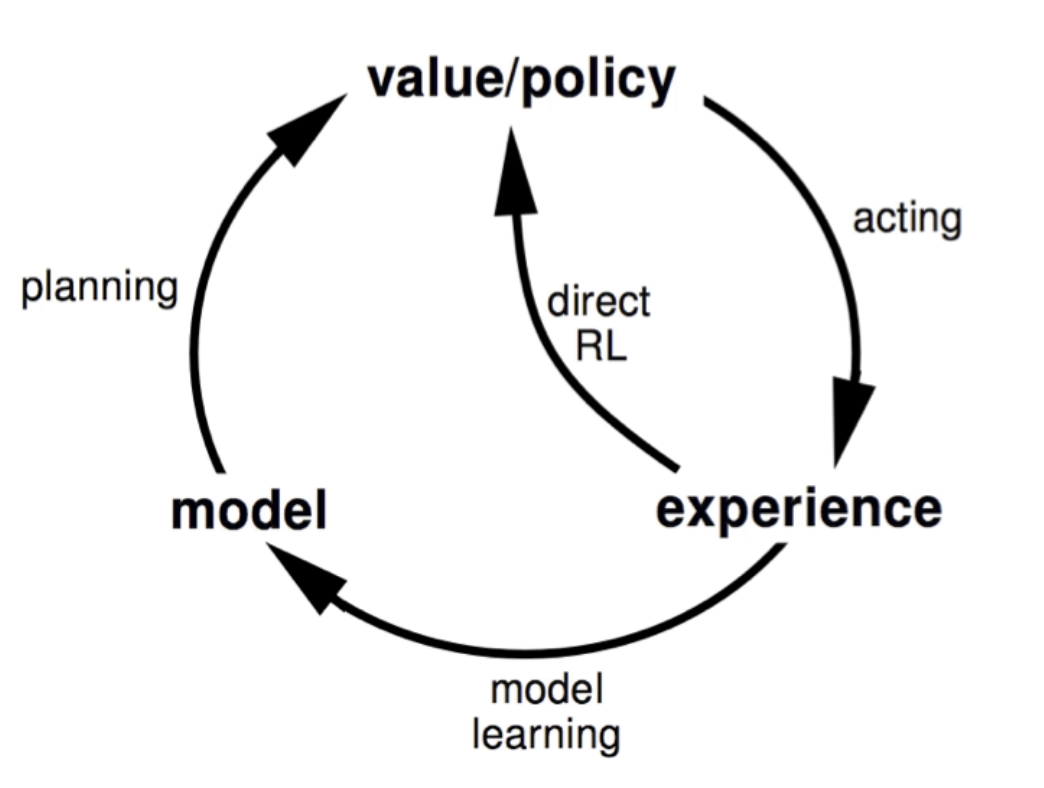

Reinforcement Learning: The approaches

There are primarily three approaches to implementing reinforcement learning in ML, and they are as follows:

- Value based

The value-based approach is concerned with determining the optimal value function, which is the maximum value at a given point in time under any policy. - Policy based

The policy-based approach seeks the best policy for the greatest future benefits while avoiding the use of the value function. In this technique, the agent strives to adopt a strategy in which each step's activity contributes to maximizing the future reward. This can further be divided into two categories:- Stochastic: Here, probability determines the action.

- Deterministic: The same action is produced regardless of the state.

- Model based

This is the process of indirectly learning optimum behavior through doing actions and monitoring the results, which include the next state and the immediate reward.

Source: link

Reinforcement learning algorithms

The algorithms used most often in Reinforcement learning include:

- Q-Learning

It is a value-based, off-policy learning algorithm used for temporal distance learning. The temporal difference learning methods compare temporally consecutive predictions. - SARSA

State Action Reward State Action is an on-policy temporal difference. SARSA selects additional actions and rewards based on the same policy that decided the initial step. - DQN

Deep Q Neural Network, or DQN, is Q learning with the help of neural networks. Defining and updating a Q-table in a large state space environment is a daunting task. To solve this very issue, we use the DQN algorithm to approximate Q values for every action and state.

Applications of Reinforcement Learning

- Self Driving cars

There are several factors to take into account with self-driving automobiles, such as speed restrictions in various locations, drivable zones, and avoiding crashes, to name a few. Motion planning, trajectory optimization, and scenario-based policies for highways are some aspects that can be automated with the help of reinforcement learning. - Robotics

There has been a lot of progress in using RL in robotics. Reinforcement learning is one of the primary machine learning approaches being explored in controlled environments where industrial robots may work from fixed positions under less hazardous conditions. - Gaming

Reinforcement learning is often used in making interactive complex video games. We saw the example of PacMan earlier. Google's AlphaGo is another popular software based on RL that shot to fame after defeating a professional human player in the game of Go. - Traffic control

With the help of reinforcement learning, it's possible to create traffic systems that not only provide insights into the older data but also help city developers understand the population's behavioral trends.

Advantages of Reinforcement learning

- Reinforcement Learning is used to address complicated issues that conventional approaches cannot handle.

- The model can fix mistakes made during the training phase.

- In RL, training data is gathered by the agent's direct contact with the environment. Training data is the experience of the learning agent, not a distinct collection of data that must be provided to the algorithm. This considerably decreases the supervisor in charge of the training process's workload.

- Traditional machine learning algorithms are built to excel at individual subtasks, with no regard for the larger picture. RL, on the other hand, does not break the problem down into subproblems; instead, it works straight to maximize the long-term goal.

Disadvantages of Reinforcement learning

- RL approaches produce training data on their own by interacting with the environment. As a result, the rate of data gathering is constrained by the environment's dynamics. High latency environments slow down the learning curve.

- The learning agent can trade-off short-term benefits for long-term advantages. While this basic premise makes RL valuable, it also makes it difficult for the agent to choose the best policy.

Check out this problem - Optimal Strategy For A Game

Frequently Asked Questions

How is reinforcement learning different from supervised learning?

In contrast to supervised learning, the agent learns autonomously via feedbacks in Reinforcement Learning.

Is Reinforcement Learning only trial-and-error learning, or does it also require planning?

Modern reinforcement learning is concerned with both trial-and-error learning and deliberative planning with a model of the environment. In general, it refers to any predictions about the environment's future behavior dependent on the agent's actions.

Is Q-Learning a subset of Reinforcement learning?

No, Q learning is actually one of the most used algorithms for solving problems based on RL. Here, An agent attempts to learn the best policy from its previous interactions with the environment. An agent's prior experiences are a series of state-actions-rewards.

Conclusion

Now we have a fair idea about Reinforcement Learning, its workings, the algorithms involved, and some of its wide applications. This is just the tip of the iceberg as far as reinforcement learning is concerned. You can check out articles on other types of Machine learning too. To learn more about Supervised Learning, check this article out, and to find out more about Unsupervised learning, you can check this article out.