Introduction

A bivariate normal distribution is formed by two independent random variables instead of the "ordinary" normal distribution, which includes just one random variable. The two variables are both normally distributed, and when they are put together, they have a normal distribution. A three-dimensional bell curve represents the jointly normal distribution visually.

In this blog, we will be discussing the most commonly used definition to define jointly gaussian random variables and start from its basics because the Gaussian distribution can be defined in a variety of ways; there is no universal agreement on a concise description.

Jointly Gaussian Random Variables

Gaussian Random Variable

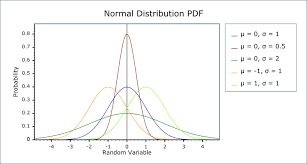

In Gaussian Random variable, a random variable that is continuous and has a probability density function of the type

Where µ is mean, and σ 2 is the variance.

N (µ, σ2 ) refers to a Gaussian distribution with mean µ and variance σ 2

X ∼ N (µ, σ2 ) ⇒ X is a Random Gaussian variable with mean µ and variance σ 2

If X ∼ N (0, 1), X is a standard Gaussian Random variable.

The below diagram represents the figure for a standard gaussian random variable.

Jointly Gaussian Random Variable

Let X1 and X2 be two variables. . . , and Xd is real-valued random variables that are established along with the same sample space. If their joint characteristic function is provided by the equation below, they are said to be jointly Gaussian.

Here p is the correlation of x1 and x2.

Gaussian random variables that are independently Gaussian are always jointly Gaussian. Below is a diagram of the two-dimensional joint Gaussian probability density function to understand this better.

Source: Link

To compute its density, it is necessary to know the mean and variance of a linear combination of jointly Gaussian random variables.

Probability Density Function for Jointly Gaussian Random Variable

The two parameters, various discuss µ, and σ2, the first and second-order moments, are obtained from the pdf and are entirely defined by the Gaussian pdf P(x1) and P(x2).

Source: Link

In the new basis, the joint Gaussian probability density function is a joint distribution given by

This equation is used to present that each marginal probability density function in the new basis is an independent gaussian PDF, with each distribution having varied variance.

Covariance Matrix

A covariance matrix is a symmetrical matrix that can be diagnosed by changing the basis. Any joint Gaussian random variable pdf has a basis for which the pdf expressed is product distribution.

A covariance Matrix is shown as follows in the below-given diagram:

Where the diagonal elements of Σ are referred to as the variance of random variables X and the generic elements Σij = E(Xi − mXi )(Xj − mXj ) is the covariance of the jointly gaussian random variables.

A (symmetric) positive semi-definite matrix must be used as the covariance matrix cov. Because cov's determinants and inversion are computed as pseudo-determinant and pseudo-inverse, respectively, cov does not require complete rank.

The covariance matrix is the identity times that value if the parametric cov is a scalar, a vector of diagonal records for the covariance matrix if the parameter cov is a vector of diagonal records for the covariance matrix, or a two-dimensional array-like if the parameter cov is a two-dimensional array-like.