Introduction

When you build a log bucket and enable Log Analytics for it, Cloud Logging makes the log data available in Logging's Log Analytics interface. You don't have to route and keep a separate copy of the data in BigQuery if you enable Log Analytics. Log Analytics allows you to examine log data in the same way that BigQuery does. You may still query and analyze the data using the Logging features you're used to.

You may configure a view of the data in the Logs Analytics-enabled bucket directly in BigQuery if you wish to mix and use your logs data with other data in BigQuery. The identical query that works in Log Analytics works in BigQuery as well.

Let us learn how the data is queried using the logging query language.

Logging query language

You may use the Logging query language through the Google Cloud console's Logs Explorer, the Logging API, or the command-line interface. The Logging query language may query data and construct filters to generate sinks and log-based metrics.

A query is a Boolean expression that specifies a subset of all the log entries in the Google Cloud resource you have chosen, such as a Cloud project or folder.

You may construct searches based on the LogEntry indexed column using the logical operators AND and OR. The Logging query language syntax looks like this when the resource.type field is used in the following examples:

- Simple restriction: resource.type = "gae_app"

- Conjunctive restriction: resource.type = "gae_app" AND severity = ERROR

- Disjunctive restriction: resource.type = "gae_app" OR resource.type = "gce_instance"

- Alternatively: resource.type = ("gae_app" OR "gce_instance")

- Complex conjunctive/disjunctive expression: resource.type = "gae_app" AND (severity = ERROR OR "error")

Syntax notation

The following sections offer an introduction to the Logging query language syntax and go into depth about how queries are built and how matching is accomplished. Some of the examples include explanatory text in the form of comments.

Take note of the following:

- A query cannot be longer than 20,000 characters.

- Except for regular expressions, the Logging query language is case-insensitive.

Syntax summary

The syntax of the Logging query language may be conceived of in terms of queries and comparisons.

A query is a string that contains the following expression:

expression = ["NOT"] comparison { ("AND" | "OR") ["NOT"] comparison }

A comparison can be expressed as a single value or as a Boolean expression:

"The cat in the hat"

resource.type = "gae_app"

The first line is an example of a single-value comparison. These kinds of comparisons are global constraints. Each log entry field is implicitly compared to the value using the has operator. In this case, the comparison is successful if any field in a LogEntry or its payload has the words "The cat in the hat."

The second line is an example of a Boolean expression comparison of the form [FIELD_NAME] [OP] [VALUE]. The elements of the comparison are as follows:

- [FIELD_NAME]: a field in a log entry. Example, resource.type.

- [OP]: comparison operator. Example, =.

- [VALUE]: a number, string, function, or parenthesized expression. For example, "gae_app". For JSON null values, use NULL_VALUE.

Boolean operators

AND and OR are short-circuit operators in Boolean logic. The NOT operator takes priority over the OR and AND operators in that order. For example, the following two expressions are interchangeable:

a OR NOT b AND NOT c OR d

(a OR (NOT b)) AND ((NOT c) OR d)

Between comparisons, you can omit the AND operator. You may also use the - (minus) operator instead of the NOT operator. For instance, the following two questions are identical:

a=b AND c=d AND NOT e=f

a=b c=d ~ e=f

AND and NOT are always used in this article.

You may use the AND, OR, and NOT operators on any filters except those used by log views. Only AND and log views support NOT operations.

To combine AND and OR rules in the same statement, use parenthesis for nesting the rules. If you do not utilize parenthesis, your query may not function properly.

Boolean operators must always be capitalized. As search phrases, lowercase and, or, and not being processed.

Read more, Introduction to JQuery

Comparisons

Comparisons are structured as follows:

[FIELD_NAME] [OP] [VALUE]

The elements of the comparison are as follows:

[FIELD_NAME]: is the path name of a log entry field. Examples of field names include:

- resource.type

- resource.labels.zone

- resource.labels.project_id

- insertId

- jsonPayload.httpRequest.protocol

- labels."compute.googleapis.com/resource_id"

The path name must be double-quoted if a route name contains special characters. Because it contains a forward slash /, compute.googleapis.com/resource id must be double-quoted.

[OP]: is one of the following comparison operators.

- = -- equal

- != -- not equal

- > < >= <= -- numeric ordering

- : -- "has" matches any substring in the log entry field

- =~ -- regular expression search for a pattern

- !~ -- regular expression search not for a pattern

[VALUE]: can be a number, a string, a function, or a parenthesized expression. Strings can represent any text and Boolean, enumeration, and byte-string data. Prior to the comparison, the [VALUE] is transformed to the field's type. NULL VALUE is used for JSON null values.

Use the following syntax to search for a JSON null value:

- sonPayload.field = NULL_VALUE -- includes "field" with null value

- NOT jsonPayload.field = NULL_VALUE -- excludes "field" with null value

- If [VALUE] is a parenthesized Boolean comparison combination, the field name and comparison operator are applied to each element. As an example:

- jsonPayload.cat = ("longhair" OR "shorthair")

- jsonPayload.animal : ("lovely" AND "pet")

The first comparison determines if the field cat is "longhair" or "shorthair." The second verifies that the field animal's value contains both the words "lovely" and "pet," in any order.

Field path identifiers

Log entries are all instances of the LogEntry type. The identifier that is (or begins) the left-hand side of the comparison must be a LogEntry type field.

The following is the most recent list of log input fields. If relevant, each field is followed by the following level of names for that field:

- httpRequest: { cacheFillBytes, cacheHit, cacheLookup, cacheValidatedWithOriginServer, latency, protocol, referer, remoteIp, requestMethod, requestSize, requestUrl, responseSize, serverIp, status, userAgent }

- insertId

- jsonPayload { variable }

- labels { variable }

- logName

- metadata { systemLabels, userLabels }

- operation{ id, producer, first, last }

- protoPayload { @type, variable }

- receiveTimestamp

- resource { type, labels }

- severity

- sourceLocation: { file, line, function }

- spanId

- textPayload

- timestamp

- trace

The following are some field path Identifiers that you may use in your comparisons:

resource.type: If your first path identifier is a resource, the following identifier must be a MonitoredResource type field.

httpRequest.latency: If your first path identifier is httpRequest, the following identifier must be a HttpRequest type field.

labels.[KEY]: If labels is your first path identifier, the following identifier, [KEY], must be one of the keys from the key-value pairs in the labels field.

logName:Since the logName field is a string, it cannot be followed by any subfield names.

Special characters

LogEntry fields that include special characters must be quoted. For example:

jsonPayload.":name":apple

jsonPayload."foo.bar":apple

jsonPayload."\"foo\"":apple

Monitored resource types

Select a monitored resource type for quicker queries. See Monitored resource types for a list of resource types.

Compute Engine VMs, for example, use the resource type gce_instance, while Amazon EC2 instances use aws_ec2_instance. The example below explains how to limit your queries to both types of VMs:

resource.type = ("gce_instance" OR "aws_ec2_instance")

Missing fields

If you use a field name in a query and it doesn't appear in a log entry, the field is either missing, undefined, or defaulted:

-

If the field is part of the log entry's payload (jsonPayload or protoPayload), or if it is in a label in the log entry's labels section, it is missing. Using a missing field will not result in an error, but all comparisons will fail silently.

Examples: jsonPayload.nearest_store, protoPayload.name.nickname -

The field is defaulted if it is defined in the LogEntry type. Comparisons are carried out as though the field existed and had its default value.

Examples: httpRequest.remoteIp, trace, operation.producer -

Otherwise, the field is undefined, which is discovered before the query is executed.

Examples: thud, operation.thud, textPayload.thud

Object and array types

Each log entry field can store a scalar, object, or array.

- A scalar field contains a single number, such as 174.4 or -1. A string is also regarded as a scalar. Scalar types include fields that may be converted to (or from) strings, such as Duration and Timestamp.

-

An object type is a collection of named values, such as the JSON value:

{"age": 24, "height": 67} -

A collection of items of the same type is stored in an array field. A field containing measurements, for example, may include an array of numbers:

{8.5, 9, 6}

Values and conversions

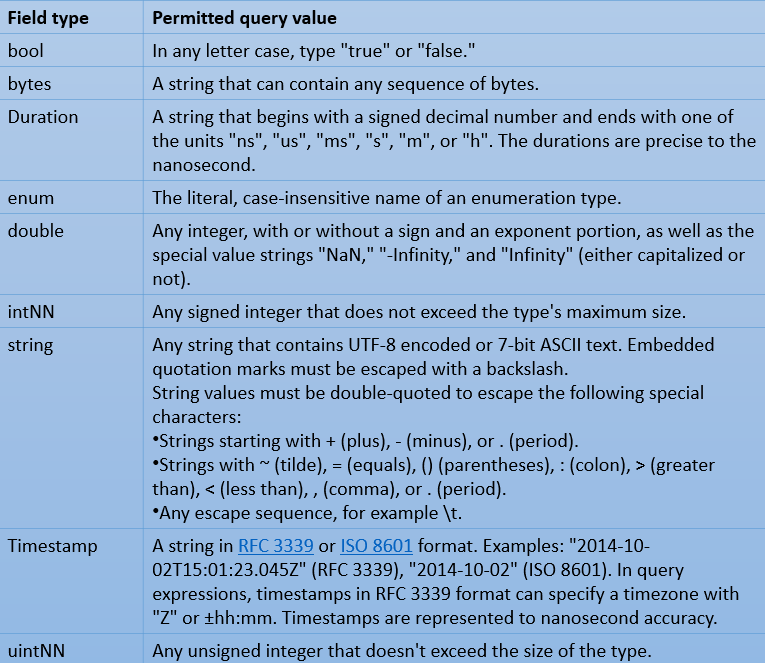

The right-hand side value is converted to the type of the log entry field as the first step in assessing a comparison. In comparisons, scalar field types are permitted, as are two extra kinds whose values are represented as strings: TimeStamp and Duration.

Types of log fields

The type of a log entry field is decided as follows:

Protocol buffer fields are log fields defined in the type LogEntry and the component type. There are defined types for protocol buffer fields.

Log fields in protoPayload objects are likewise protocoled buffer fields with defined types. The name of the protocol buffer type is contained in the protoPayload field "@type."

When a log entry is received, the kinds of log fields inside jsonPayload are deduced from the field's value:

- Fields with unquoted numbers have the type double.

- Fields with true or false values are of type bool.

- Strings are the type of fields whose values are strings.

Since long (64-bit) numbers cannot be represented precisely as double values, they are stored in string fields.

Only protocol buffer fields recognize the Duration and Timestamp types. Those values are stored in string fields elsewhere.

Comments

Comments begin with two dashes (--), and any content after the dashes is ignored until the line ends. Comments can be included at the start of a filter, between words, or at the conclusion.

Example:

-- All of our target users are emitted by Compute Engine instances.

resource.type = "gce_instance"

-- Looking for logs from "alex".

jsonPayload.targetUser = "alex"

Comparison operators

The equality (=,!=) and inequality (<, <=, >, >=) operators have different meanings depending on the underlying type of the left-hand field name.

- All numeric types: Normal meaning of equality and inequality is applicable to numbers.

- bool: Equality means having the same Boolean value. true>false defines inequality.

- enum: Equality means having the same enumeration value. The underlying numeric values of the enumeration literals are used by Inequality.

- Duration: Equality means having the same length of time. The length of the time determines inequality. As an example, "1s">"999ms" as durations.

- Timestamp: Equality refers to the same point in time. If a and b are Timestamp values, a b indicates that an occurred earlier in time than b.

- bytes: Operands are compared left to right, byte by byte.

- string: Letter case is ignored in comparisons.

Global restrictions

A global restriction exists when the comparison consists of a single value. The has (:) operator is used in logging to detect if any field in a log entry, or its payload, includes the global restriction. If it does, the comparison is successful.

Example:

The most basic query stated in terms of a global restriction has only one value:

"The Cat in the Hat"

For a more intriguing query, combine global restrictions with the AND and OR operators. For example, if you want to see all log entries with a field containing cat and another field containing either hat or bat, submit the query as:

(cat AND (hat OR bat))

There are three global restrictions in this case: cat, hat, and bat. These global restrictions are applied independently, and the results are aggregated in the same way the expression would have been written without parenthesis.

Functions

Built-in functions can be used as global restrictions in queries:

function = identifier ([argument, {argument} ]), where argument is a value, field name, or parenthesized expression.

Below are the different functions:

log_id

The log_id function retrieves log entries from the logName field that match the provided [LOG_ID] argument:

Syntax:

log_id([LOG_ID])

source

The source function looks for log entries from a specific resource in the hierarchy of organizations, folders, and Cloud projects.

The source function does not match any of the child resources. For example, using source(folders/folder_123) matches logs from the folder_123 resource rather than logs from the Cloud project resources included within folder_123.

Syntax:

source(RESOURCE_TYPE/RESOURCE_ID)

sample

The sample function chooses a fraction of all log entries:

Syntax:

sample([FIELD], [FRACTION])

[FIELD] denotes the name of a log entry field, such as logName or jsonPayload.a field. The field's value affects whether the log entry is included in the sample. The field type must be either string or numeric. Setting [FIELD] to insertId is an excellent choice because that field has a distinct value for each log entry.

[FRACTION] is the percentage of log entries with values for [FIELD]. It is a value larger than 0.0 but less than 1.0. For example, if you enter 0.01, the sample will comprise around one percent of all log entries with [FIELD] values. If [FRACTION] is 1, then all log entries with [FIELD] values are picked.

ip_in_net

The ip_in_net function determines whether an IP address in a log entry belongs to a subnet. You can use this to determine whether a request came from an internal or external source.

Syntax:

ip_in_net([FIELD], [SUBNET])

[FIELD] is a string-valued log entry field containing an IP address or range. The field can be repeated, but only one of the repeated fields must have an address or range in the subnet.

[SUBNET] is a string constant representing an IP address or range. If [SUBNET] is not a valid IP address or range is an error.

Searching by time

You can set specific limits on the date and time of log entries to display in the interface. For example, if you include the following conditions in your query, the preview displays only the log entries from the specified 30-minute period, and you won't be able to scroll outside of that time frame:

When creating a query with a timestamp, you must use the dates and times below.

timestamp >= "2016-11-29T23:00:00Z" timestamp <= "2016-11-29T23:30:00Z"

Using regular expressions

Regular expressions can be used to build queries and filters for sinks, metrics, and anywhere log filters are used. Regular expressions can be used in the Query builder and with the Google Cloud CLI.

The following are the characteristics of regular expression queries:

- A regular expression can only match fields of the string type.

- String normalization is not used; for example, kubernetes is not the same as KUBERNETES.

- By default, queries are not anchored and are case-sensitive.

- On the right side of the regular expression comparison operator, = ~and!~, Boolean operators can be used to connect multiple regular expressions.

The following is the structure of a regular expression query:

Match a pattern:

jsonPayload.message =~ "regular expression pattern"

Contradicts a pattern:

jsonPayload.message !~ "regular expression pattern"

The = and! convert the query to a regular expression query, and the pattern to be matched must be enclosed in double quotation marks. To search for patterns that contain double quotation marks, use a backslash to escape them.

Finding log entries quickly

The following LogEntry fields are always indexed while logging:

- resource.type

- resource.labels.*

- logName

- severity

- timestamp

- insertId

- operation.id

- trace

- httpRequest.status

- labels.*

- split.uid

Custom indexed fields can also be added to any log bucket.

Optimize your queries

Reduce the number of logs, the number of log entries, or the time span of your searches to make them faster. Better yet, you can reduce all three.

Examples:

Use the right log name: Specify the log containing the log items in question. Examine one of your log entries to ensure you have the correct log name.

Choose the right log entries: If you know the log entries you're looking for are coming from a particular VM instance, identify it. Examine one of the log entries you wish to search for to ensure that the label names are correct.

Choose the right time period: Set a time period for the search. The GNU/Linux date command is a simple way to determine relevant timestamps in RFC 3339 format.