Introduction

We will be using the Internet Movie Database for the sentiment analysis purpose, otherwise very well known as IMDb data. IF we want to watch a new movie, we always look at IMDb. We will be taking those movie reviews and using open-source sentiment, toolkit, and compute sentiment score for each study. We also have the actual manually tagged flag as positive or negative for a review in this data set. So, we can even compare how well these open-source tool kits are doing concerning a human label or tag provider. So, let us get started.

Implementation

We will use the standard libraries of Pandas, NumPy, Spacy, and TextBlob library, a widely used open-source sentiment toolkit. It is derived from Google. And then, we have a 'classification report' as well, which we can use to compare how the sentiment, TextBlob sentiment prediction fares concerning the human labels that we have. So, let us import these libraries.

import pandas as pd

import numpy as np

import spacy

from textblob import TextBlob

from sklearn.metrics import classification_report

Let us read the data set.

data = pd.read_csv('https://drive.google.com/uc?id=1_nbSPqf4a3x2gH38syU75gFh5JXojY6B',nrows=1000)

print(data.head())

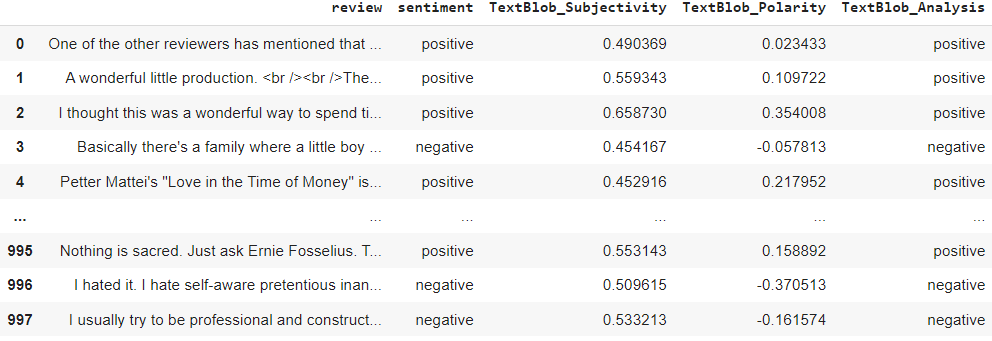

The data set looks like this. It has two columns, the review, and the sentiment. We have all these reviews here as documents. And for each study, we will have a human label tag as positive or negative, which we will not use right now; we can use it later to compare how good our prediction is.

data['TextBlob_Subjectivity'] = data['review'].apply(lambda x: TextBlob(x).sentiment.subjectivity)

data['TextBlob_Polarity'] = data['review'].apply(lambda x: TextBlob(x).sentiment.polarity)

I have written two 'apply' functions very straightforwardly. So, this TextBlob library outputs two scores. One score is called the Subjectivity score, and another score is the Polarity score. The polarity score is the sentiment score, and it ranges from -1 to +1. The closer it is to -1, the negative it is, and the closer it is to +1, the positive it is. And if it is a little bit around 0, it is neutral.

Then, the subjectivity score ranges between 0 to 1, and what gives us output is it just says how subjective a statement is. For example, the subjectivity score might be very high for a line like "you are gorgeous" because "gorgeous" is subjective. It is not an objective thing. So, it gives a score between 0 to 1; the closer it means that a particular line of text is very subjective.

We will be looking only at the polarity score because that is the sentiment score we are interested in. I have written the apply function where I am taking these reviews and applying TextBlob for that review.

We will convert the text into a TextBlob object. And then, I use the "dot sentiment dot subjectivity" function to get the subjectivity score. Similarly, for polarity, we will

convert the text into a TextBlob object.

data[['TextBlob_Subjectivity','TextBlob_Polarity']]

We can see the polarity score ranges from +1 to -1. This is the score that we are interested in. We will scale the scores as positive or negative because our human label tag is in that particular format.

data['TextBlob_Analysis'] = data['TextBlob_Polarity'].apply(lambda x: 'negative' if x<0 else 'positive')

I am getting another apply function where I flag the score as unfavourable if the score is less than 0, and if it is 0 or above 0, I am flagging that as positive. So, if we print the data, we have a new column called TextBlob Analysis. We can compare and see how many match the human-labelled data, which shows how powerful these toolkits are.

print(classification_report(data['sentiment'], data['TextBlob_Analysis']))

Here, I am using this classification report. If we see this matrix, some of us

might have heard of it as a confusion matrix because it is very confusing. It says that we have two matrices, precision, and recall.

When we say negative, and it says 88% precision, it just says, of all negative sentiment

scores, that TextBlob predicted, 88% of those scores are negative in the human label aspect, which is pretty good. It is almost 90%. And similarly, if I see the recall, it is 46%.

It means that we can take only about 46 or 50% of all the human-labeled negatives in TextBlob, which implies that TextBlob flagged the remaining 50%, where the human-labeled as unfavourable have flagged as positive in the prediction.

So, maybe they are sarcastic, or it is not capturing that part that a human can capture. So, otherwise, in positive, we see 64 and overall, we see about 76, this 76 is the overall

position. Out of all the positive and negative predicted by the TextBlob, 76% of them are positive and negative to the human label here,

Check out this problem - First Missing Positive