Introduction:

Before jumping to Optimal substructure and Overlapping subproblems, let us first see what does dynamic programming mean?

Dynamic programming is a technique we apply to recursive problems. Suppose in any recursive problem we compute the answer for the same subproblem multiple times. In that case, we can store the answer for that subproblem to save the time of re-calculating the same subproblem, which helps us to bring down the exponential time complexity to the polynomial-time complexity. Hence, to apply dynamic programming, recursive problems should have Optimal substructure or Overlapping subproblems, which we will discuss further.

Overlapping subproblems

As we know when we solve the recursive problems, we first solve the subproblem and then use the answer of the subproblem to solve the main problem. It might be possible that multiple problems require the answer to the same subproblem to solve them. Hence, here we can apply the dynamic programming concept to store the answer for all the subproblems to avoid re-calculating them again and again. Most of the time this helps us to reduce the time complexity from exponential to polynomial. On the other hand, if any problem has no subproblem or overlapping subproblem, then there is no need to apply dynamic programming because we don’t need to answer the same subproblem multiple times.

Let us understand this with an example.

We will solve the problem to find the Nth Fibonacci number.

Let us first see the recursive code for this problem.

|

class Main {

|

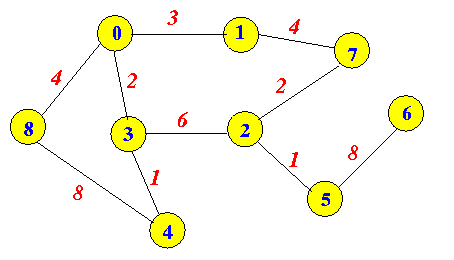

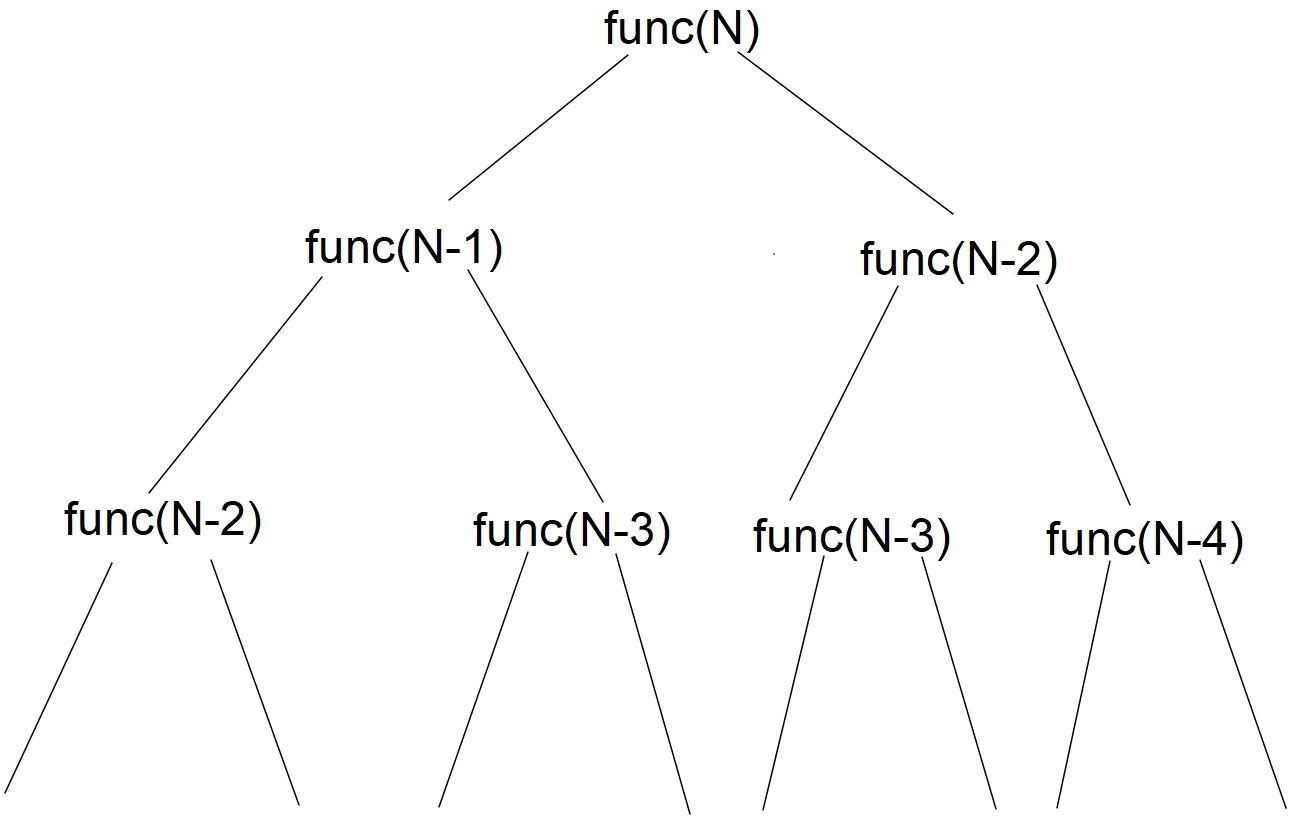

In the above code, we have a lot of subproblems that are being calculated a lot of times, making the overall time complexity exponential, i.e. O(2 ^ N). Let us make it more clear with a recursive tree.

In the above recursive tree, we can see that we are calculating the answer for two calls((N - 2) and (N - 3)) twice.

This is the reason why storing the answer for the overlapping subproblems reduces the time complexity.

There are two ways to solve this:

- Memoization method

- Tabulation method

Memoization Method:

In the memoization method, we do some updates on the recursive code. We store the answer for the current state in the D.P. table. At any moment if we encounter any pre-calculated state we return the answer from the D.P. table.

Refer to the below code for more understanding.

|

class Main { // Declaring a DP table. static int dp[]; public static int fib(int n) { if(n <= 1) return n; /* If the answer for the current recursive state is already calculated return it from the DP table. */ if(dp[n] != -1) return dp[n]; return dp[n] = fib(n - 1) + fib(n - 2); } public static void main (String[] args) { int n = 9; dp = new int[n]; /* -1 denotes that the answer for the current recursive state is not calculated yet. */ Arrays.fill(dp, -1); System.out.println(fib(n)); } } |

After memoizing the above recursive code we have decreased the time complexity from exponential time O(2N) to linear time O(N). We are also using O(N) space to maintain the D.P. table.

Tabulation Method:

In the tabulation method, we solve the problem recursively using the D.P. table. We first calculate the answer for the smaller subproblems and then using the answer of these smaller subproblems we calculate the answer for bigger subproblems.

Refer to the below code for more understanding.

|

class Main { //Declaring a DP table. static int dp[]; public static void main (String[] args) { int n = 9; dp = new int[n]; //Base case dp[0] = 0; dp[1] = 1; for(int i = 2;i <= n;i++){ dp[i] = dp[i - 1] + dp[i - 2]; } System.out.println(dp[n]); } } |

Using the tabulation method in the above code we have decreased the time complexity from exponential time O(2N) to linear time O(N). We are also using O(N) space to maintain the D.P. table.

In both types of methods, i.e., memoization and tabulation, we store the answer for the subproblems and use them to find the answer for the bigger problems.

Check out Longest Common Substring