Develop Event Receiver

This section in the "Overview of Eventarc" series will deal with the topic Development of Event Receiver.

The following event types can be obtained via event providers (sources):

- Cloud Audit

- Direct events

- Messages published to Pub/Sub topics

Event Receiver Response

To inform the router that an event was successfully received, your receiver service should deliver an HTTP 2xx response. The router will resend the event and considers any other HTTP responses to be delivery failures.

Open Source

The CloudEvents GitHub repository contains the HTTP body's structure for every event.

The repository includes the following to help you understand and use CloudEvents data in your programming language:

- Google Protocol Buffers for CloudEvents data payloads

- Generate JSON schemas

- A public JSON schema catalog

Using a CloudEvents SDK library

CloudEvents SDK library is used to develop event receiver services. This feature is available in the given languages:

- C#

- Go

- Java

- Ruby

- JavaScript

- Python

Sample Receiver Source Code

You can read Cloud Storage events using Cloud Audit Logs. It is available in a service deployed to Cloud Run. The sample code is below.

@app.route('/', methods=['POST'])

def index():

# Gets the GCS bucket name from the CloudEvent header

# Example: "storage.googleapis.com/projects/_/buckets/my-bucket"

bucket = request.headers.get('ce-subject')

print(f"Detected change in Cloud Storage bucket: {bucket}")

return (f"Detected change in Cloud Storage bucket: {bucket}", 200)

You can also try this code with Online Python Compiler

To read Pub/Sub events in a service deployed to Cloud Run, see the code below:

@app.route('/', methods=['POST'])

def index():

data = request.get_json()

if not data:

msg = 'no Pub/Sub message received'

print(f'error: {msg}')

return f'Bad Request: {msg}', 400

if not isinstance(data, dict) or 'message' not in data:

msg = 'invalid Pub/Sub message format'

print(f'error: {msg}')

return f'Bad Request: {msg}', 400

pubsub_message = data['message']

name = 'World'

if isinstance(pubsub_message, dict) and 'data' in pubsub_message:

name = base64.b64decode(pubsub_message['data']).decode('utf-8').strip()

resp = f"Hello, {name}! ID: {request.headers.get('ce-id')}"

print(resp)

return (resp, 200)

You can also try this code with Online Python Compiler

Receive events using the Cloud Audit Logs (gcloud CLI)

In the series of "Overview of Eventarc," our first topic is how to receive events from the Cloud Storage in an unauthenticated Cloud Run service using Eventarc.

Step 1: Make sure you have an account in Google Cloud; if not, create one.

Step 2: Select or create a Google Cloud project on the project selector page in the Google cloud console.

Step 3: Ensure that the billing is enabled for your project. You can check if billing is enabled on your project also.

Step 4: Enable the APIs like Cloud Run, Cloud Logging, Eventarc, Pub/Sub, and Cloud Build.

Step 5: Update the gcloud components using the below code:

gcloud components update

Step 6: Log in to your account.

gcloud auth login

Step 7: Set the configuration variables:

gcloud config set project PROJECT_ID

gcloud config set run/region us-central1

gcloud config set run/platform managed

gcloud config set eventarc/location us-central1

You can also try this code with Online Python Compiler

Step 8: In Google Cloud Storage, Enable the following Cloud Audit Log:

- Admin Read

- Data Read

-

Data Write

Step 9: Give the Compute Engine service account the eventarc.eventReceiver role:

gcloud projects add-iam-policy-binding PROJECT_ID \ --member=serviceAccount:PROJECT_NUMBER-compute@developer.gserviceaccount.com \

--role='roles/eventarc.eventReceiver'

Step 10: Install the Git source code management tool after downloading.

Create a Cloud Storage Bucket

We can create a Storage bucket simply by using:

gsutil mb -l us-central1 gs://events-quickstart-PROJECT_ID/

After the complete event source is created, you can also deploy the event receiver service on Cloud Run.

Deploy the event receiver service to Cloud Run

Set up a Cloud Run service to receive and log events. The samples provide frameworks like Java, Python, C#, PHP, and many more. We will take the example of python in this section. To put the sample event receiver service into operation:

Step 1: Clone the repository

git clone https://github.com/GoogleCloudPlatform/python-docs-samples.git

cd python-docs-samples/eventarc/audit-storage

Step 2: Upload it to Cloud Builder after building the container.

gcloud builds submit --tag gcr.io/PROJECT_ID/helloworld-events

Step 3: Deploy the container image to Cloud Run.

gcloud run deploy helloworld-events \

--image gcr.io/PROJECT_ID/helloworld-events \

--allow-unauthenticated

The command line will then display the service URL after the successful deployment.

Create an Eventarc trigger

In this section of the series "Overview of Eventarc," we will learn to create an Eventarc trigger. The Eventarc is used to send events from the Cloud Storage bucket to the helloworld-events Cloud Run service.

Step 1: Create a trigger that uses the Google Cloud project default service account and filters Cloud Storage events:

gcloud eventarc triggers create events-quickstart-trigger \

--destination-run-service=helloworld-events \

--destination-run-region=us-central1 \

--event-filters="type=google.cloud.audit.log.v1.written" \

--event-filters="serviceName=storage.googleapis.com" \

--event-filters="methodName=storage.objects.create" \

--service-account=PROJECT_NUMBER-compute@developer.gserviceaccount.com

You can also try this code with Online Python Compiler

This will create a trigger known as events-quickstart-trigger.

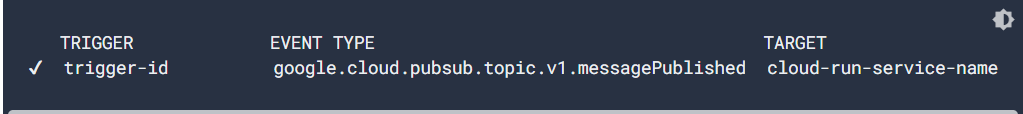

Step 2: Run the command given below to confirm if the events-quickstart-trigger was successfully created.

gcloud eventarc triggers list --location=us-central1

Generating and Viewing an Event

Step 1: Upload the text file to a Cloud Storage to generate an Event:

echo "Hello World" > random.txt

gsutil cp random.txt gs://events-quickstart-PROJECT_ID/random.txt

The Cloud Run service logs the event's message once the upload creates an event.

Step 2: To view the log entries, your service has made for events:

gcloud logging read "resource.type=cloud_run_revision AND resource.labels.service_name=helloworld-events AND NOT resource.type=build"

We have completed a Cloud Audit in the first section of the "Overview of Eventarc" series. This includes Creating a Cloud Bucket, an event receiver service to Cloud Run, Creating an Eventarc, and Generating and Viewing an Event.

Receive events using Pub/Sub messages

In the series of "Overview of Eventarc," we will now discuss how to receive events using Pub/Sub messages stepwise.

If you are new to the Google Cloud, then create a new account. Ensure that the billing is enabled for your Cloud Project. Then install and initialize the Google Cloud CLI.

Set up a Google Service Account

Create a user-provided service account and assign it a few particular roles. This will enable the event forwarder component to retrieve events from Pub/Sub.

Step 1: Create a service account, also known as TRIGGER_GSA that is used to create triggers:

TRIGGER_GSA=eventarc-gke-triggers

gcloud iam service-accounts create $TRIGGER_GSA

Step 2: Grant the monitoring.metricWriter and pubsub.subscriber roles to the service account:

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member "serviceAccount:$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com" \

--role "roles/pubsub.subscriber"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member "serviceAccount:$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com" \

--role "roles/monitoring.metricWriter"

You can also try this code with Online Python Compiler

Enable GKE Destinations

We can use a prebuilt image, gcr.io/cloudrun/hello, to deploy a GKE service that will receive and log events:

Step 1: Now create a Kubernetes deployment:

SERVICE_NAME=hello-gke

kubectl create deployment $SERVICE_NAME \

--image=gcr.io/cloudrun/hello

Step 2: Then, Expose it as a Kubernetes service:

kubectl expose deployment $SERVICE_NAME \

--type LoadBalancer --port 80 --target-port 8080

Create a Pub/Sub Trigger

The Eventarc trigger sends some messages to the hello-gke GKE service whenever a message is published to the Pub/Sub topic.

Step 1: To listen for Pub/Sub messages, create a GKE trigger. The below code is for the new Pub/Sub topic:

gcloud eventarc triggers create gke-trigger-pubsub \

--destination-gke-cluster=events-cluster \

--destination-gke-location=us-central1 \

--destination-gke-namespace=default \

--destination-gke-service=hello-gke \

--destination-gke-path=/ \

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" \

--service-account=$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com

In case you are an existing user, then use the below code:

gcloud eventarc triggers create gke-trigger-pubsub \

--destination-gke-cluster=events-cluster \

--destination-gke-location=us-central1 \

--destination-gke-namespace=default \

--destination-gke-service=hello-gke \

--destination-gke-path=/ \

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" \

--service-account=$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com \

--transport-topic=projects/PROJECT_ID/topics/TOPIC_ID

You can also try this code with Online Python Compiler

-

Give your Google Cloud project ID in place of PROJECT_ID.

-

Give ID of the existing Pub/Sub topic in TOPIC_ID.

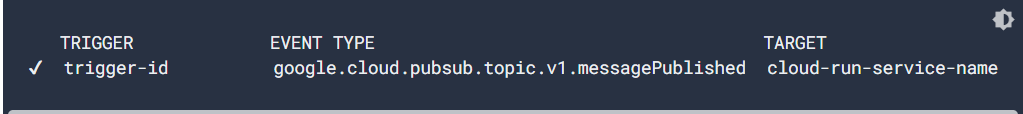

Step 2: Make sure that the trigger was created successfully:

gcloud eventarc triggers list

The output should look like this:

NAME: gke-trigger-pubsub

TYPE: google.cloud.pubsub.topic.v1.messagePublished

DESTINATION: GKE: hello-gke

ACTIVE: Yes

Generate and View an Event

Publishing a text message to the Pub/Sub topic will trigger an event to be created that will start the GKE service. After that, you can see the message in the pod logs.

Step 1: As an environment variable, find and set the Pub/Sub topic:

TOPIC=$(gcloud eventarc triggers describe gke-trigger-pubsub --format='value(transport.pubsub.topic)')

Step 2: To generate an event, send a message to the Pub/Sub

gcloud pubsub topics publish $TOPIC --message="Hello World"

Step 3: To view the event message, use the below code:

a. Find the pod Id:

kubectl get pods

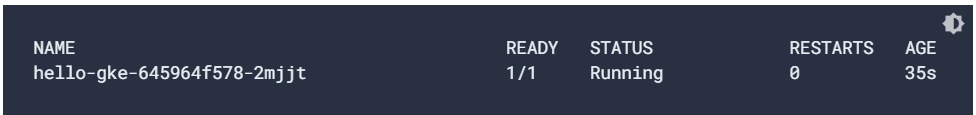

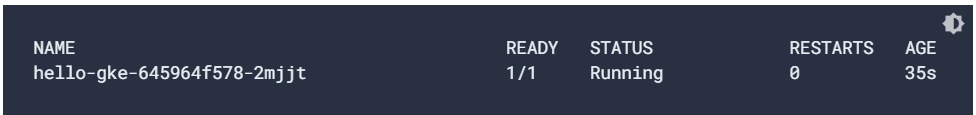

The output screen should look like the following:

b. Check the log of the pod:

kubectl logs NAME

Note: Replace NAME with the copied name of the pod.

c. Look for a log entry matching:

2022/02/24 22:23:49 Hello from Cloud Run! The container started successfully and is listening for HTTP requests on $PORT

{"severity":"INFO","eventType":"google.cloud.pubsub.topic.v1.messagePublished","message":"Received event of type google.cloud.pubsub.topic.v1.messagePublished. Event data: Hello World"[...]}

Clean Up

Even if Cloud Run does not charge when the service is not in use, there is a chance that you will be paid. For instance, the Container Registry contains the GKE cluster, Eventarc resources, Pub/Sub messages, and container image storage.

Migrate Pub/Sub Triggers

In the series of "Overview of Eventarc," our last topic is to migrate Pub/Sub triggers.

Eventarc can be used to migrate event triggers so that a Cloud Run for Anthos service can accept Pub/Sub events. This section of the "Overview of Eventarc" series assumes your Cloud Run for Anthos service runs in a Google Kubernetes Engine (GKE) cluster. And also, you are migrating existing Events for Cloud Run for Anthos triggers. Each cluster needs to have a migration run.

The migration path minimizes event duplication while avoiding event loss. The process of migration includes the following steps:

Enable GKE Destinations

Enable GKE destinations and assign the key role to the Eventarc service account to enable Eventarc to manage resources in the GKE cluster.

Step 1: For Eventarc, enable GKE.

gcloud eventarc gke-destinations init

Step 2: Enter y to bind the required role at the prompt. The following roles are bound:

- roles/compute.viewer

- roles/container.developer

- roles/iam.serviceAccountAdmin

Enable Workload Identity on a cluster

You can skip this step if your Workload identity is already enabled on your cluster.

The suggested way for using applications running on Google Kubernetes Engine (GKE) to access Google Cloud services. It is preferred because of its enhanced manageability and security attributes. Also, utilizing Eventarc, Cloud Run for Anthos events must be forwarded.

Refer to Using Workload Identity to enable Workload Identity on an existing cluster,

Identifying existing Events for Cloud Run for Anthos triggers

Before migrating any existing Events for the Cloud Run for Anthos triggers, you have to retrieve the trigger details.

Step 1: List the cluster's current Events for Cloud Run for Anthos triggers:

gcloud beta events triggers list --namespace EVENTS_NAMESPACE

The namespace of your events broker should be used in place of EVENTS_NAMESPACE.

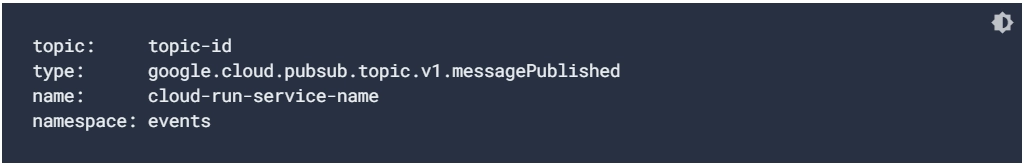

The output screen should look like the following:

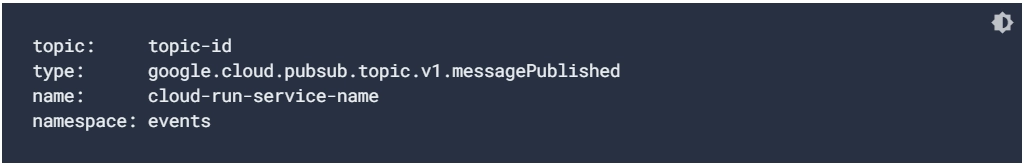

Step 2: To create the Eventarc trigger, you must have the subject ID. Get the topic ID for the existing Cloud Run for Anthos trigger events:

gcloud beta events triggers describe TRIGGER_ID \

--platform gke --namespace EVENTS_NAMESPACE \

--format="flattened(serialized_source.spec.topic,serialized_trigger.spec.filter.attributes.type,serialized_trigger.spec.subscriber.ref.name,serialized_trigger.spec.subscriber.ref.namespace)"

Step 3: Replace TRIGGER_ID with a fully qualified identification or the existing Events for Cloud Run ID for Anthos trigger.

The output screen should look like the following:

Create an Eventarc trigger to replace the existing trigger

Now, Set up a user-managed service account and grant it particular roles before creating the Eventarc trigger. Do this so that Eventarc can manage events for Cloud Run for Anthos destinations.

Step 1: Create a GSA (Google Cloud Service Account):

TRIGGER_GSA=SERVICE_ACCOUNT_NAME

gcloud iam service-accounts create $TRIGGER_GSA

Grant the monitoring.metricWriter and pubsub.subscriber roles to the service account:

PROJECT_ID=$(gcloud config get-value project)

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member "serviceAccount:$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com" \

--role "roles/pubsub.subscriber"

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member "serviceAccount:$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com" \

--role "roles/monitoring.metricWriter"

Step 2: Based on the setup of the existing Events for Cloud Run for Anthos trigger, create a new Eventarc trigger. The arguments should all be identical to the existing Events for Cloud Run for Anthos trigger. It includes the destination service, cluster, and subject ID.

gcloud eventarc triggers create EVENTARC_TRIGGER_NAME \

--event-filters="type=google.cloud.pubsub.topic.v1.messagePublished" \

--location LOCATION \

--destination-gke-service=DESTINATION_SERVICE \

--destination-gke-cluster=CLUSTER_NAME \

--destination-gke-location=CLUSTER_LOCATION \

--destination-gke-namespace=EVENTS_NAMESPACE \

--destination-gke-path=/ \

--service-account=$TRIGGER_GSA@$PROJECT_ID.iam.gserviceaccount.com \

--transport-topic=projects/PROJECT_ID/topics/TOPIC_ID

You can also try this code with Online Python Compiler

Replace TOPIC_ID with the Pub/Sub topic ID that you previously retrieved and EVENTARC TRIGGER_NAME with the name for the new Eventarc trigger.

Step 3: Confirm that trigger was created successfully:

gcloud eventarc triggers list

You can also try this code with Online Python Compiler

Clean up after Migration

You can delete or remove the original Events for Cloud Run for Anthos trigger after testing and confirming the trigger's migration to Eventarc.

Delete the Events for Cloud Run for Anthos trigger:

gcloud beta events triggers delete TRIGGER_NAME \

--platform gke \

--namespace EVENTS_NAMESPACE \

--quiet

You can also try this code with Online Python Compiler

An existing Events for Cloud Run for Anthos trigger has been transferred to Eventarc.

Frequently Asked Questions

What is GCP Eventarc?

Eventarc allows you to deliver events from Google services, SaaS. And also allow your apps to use loosely coupled services that react to state changes. Eventarc needs no infrastructure control. You can optimize productivity. And costs while building a modern, event-driven solution.

What is Cloud Run GCP?

A Managed Compute Platform called Cloud Run lets you run containers that may be invoked through requests or events. Because Cloud Run is serverless, you can focus on what matters, like creating excellent applications. It abstracts away any infrastructure upkeep.

Is Cloud Run a PaaS?

The PaaS architecture called Cloud Run manages the entire container lifetime. That method will be applied in this code lab. You can bring your clusters and Pods from GKE by using Cloud Run for Anthos, which is Cloud Run with an extra layer of control.

Conclusion

We have discussed the topic of Overview of Eventarc. We have seen how to receive events using the Cloud Audit, such as creating a bucket, deployment, and many more.

We hope this blog has helped you enhance your knowledge of "What is Hibernate Caching." If you want to learn more, check out our articles Overview of Azure App Service Local Cache, Xaas in Cloud Computing, Cluster Computing, and many more on our platform Coding Ninjas Studio.

But suppose you have just started your learning process and are looking for questions from tech giants like Amazon, Microsoft, Uber, etc. In that case, you must look at the problems, interview experiences, and interview bundle for placement preparations.

However, you may consider our paid courses to give your career an edge over others!

Happy Learning!