Introduction

Deep neural networks (DNNs) have revolutionized various fields in recent years. It has remarkable success in tasks like image recognition, Natural language processing, and more. However, as DNNs become deeper and more complex, they demand significant computational resources and memory. This makes them impractical for resource-constrained environments like mobile devices and edge computing systems.

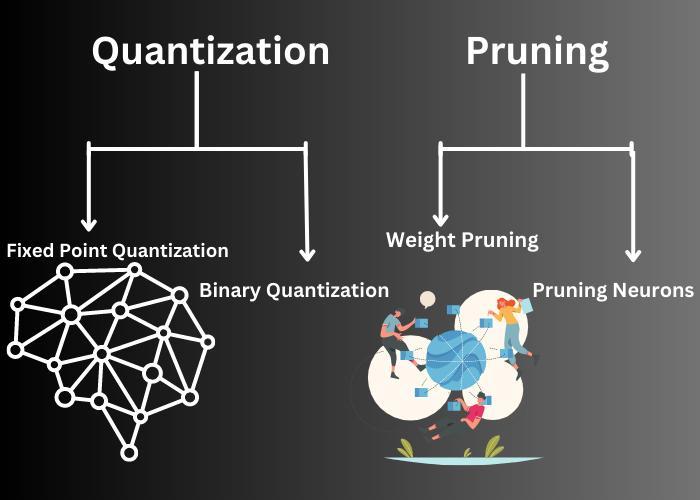

Researchers have devised techniques like quantization and pruning to address this challenge to optimize DNNs for improved efficiency without compromising performance. This blog post will explore two crucial techniques. - Quantization and Pruning, which enables the development of efficient deep neural networks while maintaining accuracy.

Quantization

Quantization is a technique that reduces the precision of numerical values in a DNN's parameters. This reduces memory usage and computational costs. In a standard DNN, parameters are typically represented as 32-bit floating-point numbers. That requires substantial memory and processing power. Quantization aims to represent these parameters with lower precision. Such as 8-bit integers or even binary values, to achieve significant compression.

Fixed-Point Quantization

Fixed-point quantization is one of the most common methods of quantization. Each parameter is represented in this approach by a fixed number of bits. The dynamic range of the values is scaled accordingly. For example, instead of using 32 bits to represent a parameter, it can be represented using 8 bits, reducing its memory footprint by four times.

While fixed-point quantization leads to memory savings, it can introduce quantization errors that may impact the model's performance. To mitigate this, researchers often use dynamic range scaling and fine-tuning techniques to maintain the model's accuracy.

Binary Quantization

Binary quantization is an extreme form of quantization where each parameter is represented using only one bit. Taking values +1 or -1. Binary quantization significantly reduces memory requirements and computational complexity. However, it poses challenges in preserving the model's accuracy. Techniques like BinaryConnect and XNOR-Net have been proposed to address these issues.