Introduction

Algorithms form the backbone of any coding interview. Thus it's very important to have a good grasp of this topic. Knowing the space and time complexities of each of the algorithms is as much important as knowing how to code them. But don't you worry about any of it. Ninjas are here for you, and today we will are going to discuss the time and space complexities of various algorithms.

Recommended topic about, kth largest element in an array and Euclid GCD Algorithm

Questions

Q1) What is the time complexity of the code given below?

#include<bits/stdc++.h>

using namespace std;

int i, j, k = 0;

while(i<=n){

j=2;

while(j<=n){

k = k + n / 2;

j = j * 2;

}

i++;

}

Answer: O(n * log(n))

Explanation: If we look closely, we can see that ‘j’ keeps doubling until it's less than or equal to ‘n’. The number of times which we can keep doubling a number until it's less than or equal to ‘n’ is log (n).

Let's look at a few examples.

‘j’ = 2, 4, 8, 16 for n = 16

Steps taken = log(16) = 4.

As a result, j will run for O(log n) steps.

For 'i', it takes n/2 iterations costing us O(n/2) time.

As a result, total time complexity is equal O(n/ 2 * log (n)) ~ O(n * log (n)).

Q2) Consider an array with a maximum capacity of 100 elements. In case one, the user adds one element to an array. In example 2, the user creates an array with 100 elements. What is the space complexity in both cases?

Answer: O(1), O(1)

Explanation:

To store one element, we need 100 memory spaces.

To store two elements, we need 100 memory spaces.

… as so on,

To store n element, we will still require 100 memory spaces.

Here, the memory is constant and independent from n. Thus the complexity is O(1) in both cases.

Q3) What is the worst-case time complexity of reporting all elements in the range [a, b] in a balanced binary search tree with n elements? Assume that there are k elements reported?

Answer: O(log (n) + k)

Explanation:

We must first check if a and b are present in BST for the range [a, b].

1. Checking if element 'a' is present in BST takes O (log n) time.

2. Checking if element 'b' is present in BST takes O (log n) time.

We must use in-order sorting from a to b to locate all the elements in the range [a, b].

In this method, every successor of a will be laid out until it reaches the predecessor of b, and all items between a and b will be reported.

In-order sorting will require O(k) time for ‘k’ elements.

As a result, O(log n) + O(log n) + O(k) = O(2 * logn + k) ~ O(logn + k) ~ O(logn + k).

Q4) What is the time complexity of the code snippet given below?

def f():

ans = 0

for i = 1 to n:

for j = i; j <= n; j += i:

ans += 1

print(ans)

Answer: O(n * log (n))

Explanation:

Here outer for loop (loop i) will run ‘n’ times.

when i=1, inner loop (loop j) will run n/1 times [for j = 1; j <= n; j += 1]

when i=2, inner loop will run n/2 times [for j = 2; j <= n; j += 2]

…upto i=n, inner loop will run n/n=1 time [for j = n; j <= n; j += n]

So, Total time= O(n+ n/2+ n/3+ …+ n/n)

=O(n(1+1/2 +1/3+…+1/n))

=O(n * logn)

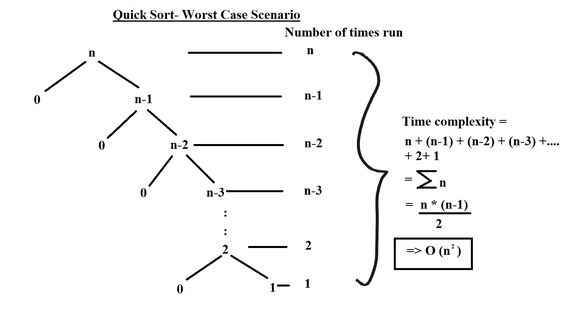

Q5) What is the worst-case time complexity of quicksort?

Answer: O(n * n)

Explanation: When we encounter the most unbalanced partitions imaginable, the original call takes n seconds, the recursive call on n - 1 element takes (n - 1) seconds, the recursive call on (n - 2) elements takes (n - 2) seconds, and so on. Quick Sort's worst-case time complexity would be O(n * n).

Q6) What is the space complexity of quicksort in all possible cases?

Answer: The recursion stack's space is used to determine the space complexity. In the worst-case scenario, the amount of space used will be O(n). The typical case space will be of the order of O. (log n) as in that case. It would be balanced. When the algorithm reaches its worst situation, when we need to perform n recursive calls to get a sorted list, the worst-case space complexity becomes O(n).

Q7) What is the time complexity of the code snippet given below?

def f()

ans = 0

for i = 1 to n:

for j = 1 to log(i):

ans += 1

print(ans)

Answer: O(n * log(n))

Explanation:

for ‘i’ = 1 - No of times the code will run is log(1)

for ‘i’ = 2 - No of times the code will run is - log(1) + log(2)

Similarly, for ‘n’ times - log(1) + log(2) + … log(n)

Hence the overall time complexity is O(n * log n)

Q8) What is the space complexity of the code snippet given below?

int arr = [];

for (int i = 0; i < n; ++i) {

for (int j = 0; j < n; ++j) {

arr[i * n + j](i + j);

}

}

Answer: O(n * n)

Explanation: We can see that the loop is running n * n times, and each time we are adding a new element in the array. Thus, the space complexity will be equal to O(n * n).

Q9) Below is the pseudo-code for a sorting algorithm, what is the time complexity of this algorithm?

SORT(A)

for i = 1 to n

key ← A [i]

j ← i – 1

while j > = 0 and A[j] > key

A[j+1] ← A[j]

j ← j – 1

End while

A[j+1] ← key

End for

Answer:

Best case - O(n)

Worst-case - O(n * n)

Explanation:

This is the code for insertion sort. Even though insertion sort is efficient, it will still perform the outer for loop if we feed it an already sorted array, needing n steps to sort an already sorted array of n elements, making its best case time complexity a linear function of n.

In an unsorted array, an element must be compared to all other elements, which means that every n element must be compared to all other n elements. As a result, it can be used for n x n, or n ^ 2 comparisons.

Q10) What is the time complexity of the below snippet?

int unknown(int n){

int i, j, k=0;

for (i=n/2; i<=n; i++)

for (j=2; j<=n; j=j*2)

k = k + n/2;

return (k);

}

Answer:

O(n * log(n))

Explanation:

The first loop will run at max n/2 times, whereas the nested loop will run for a total of log(n) times, thus the overall time complexity is O(n)* O(log(n)) ~ O(n * log(n)).

Check out this problem - Largest BST In Binary Tree