Introduction

Restaurant review analysis is a sentiment analysis used for text analysis, computational linguistics, and biometrics to identify and extract subjective information.

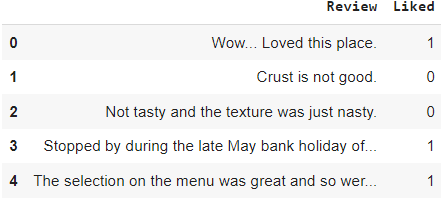

We will get the reviews from a restaurant in the form of paragraphs, and we will analyse if a customer is saying a good thing about that restaurant or saying a bad thing about the restaurant. So for this, we will need some files of reviews. I will be using natural language processing to act. For this purpose, we need to gather lots of review data in paragraphs. We will need the structured form of the data, and for this, I am downloading the data set from the Kaggle.

Implementation

I am importing the data set, which is in TSV format. There are many reviews, and their categories are mentioned as 0 or 1, separated by tab space. Here, zero signifies a bad review, and one represents a good review. I am importing the necessary modules to work on NumPy arrays and the data frame.

import numpy as np

import pandas as pd

I will convert the TSV format of data into CSV format. TSV stands for Tab-separated values while CSV stands for comma-separated values.

dataset = pd.read_csv('Restaurant_Reviews.tsv',delimiter='\t')

I will clean the text because there are many unnecessary things, such as punctuation marks. They will increase the size of the words and decrease the algorithm's efficiency.

I will perform Stemming to reduce the word to its word stem and convert each word into lowercase. There might be a chance someone has written a comment in the capital letter, and someone has written the same word in the small letter. In Python, capital and small letters are treated differently, so we must convert similar words into lowercase letters.

# library to clean data

import re

# Natural Language Toolkit

import nltk

nltk.download('stopwords')

#to remove stopword

from nltk.corpus import stopwords

#for stemming purpose

from nltk.stem.porter import PorterStemmer

#initialise empty array to append clean text

corpus=[]

for i in range(0,1000):

#column:"Review", row ith

review = re.sub('[^a-zA-Z]',' ',dataset['Review'][i])

#convert all cases to lower cases

review = review.lower()

#split to array(default delimiter is " ")

review = review.split()

#creating PorterStemmer object to take main stem of each word

ps = PorterStemmer()

#loop for stemming each word in a string array at ith row

review = [ps.stem(word) for word in review if not word in set(stopwords.words('english'))]

#rejoin all string array elements to create back into the string

corpus.append(review)

Output

[nltk_data] Downloading package stopwords to /root/nltk_data...

[nltk_data] Package stopwords is already up-to-date!

I will perform Tokenization to split the sentences and words from the body of the text.

Then, I will make the back of words via sparse matrix taking all the different words reviewed in the data set without repeating terms.

#creating the bag of Words model

from sklearn.feature_extraction.text import CountVectorizer

#To extract max 1500 features

#max feature is attribute to experiment with to get better results

cv = CountVectorizer(max_features = 1500)

#X contains corpus (dependent variable)

X = cv.fit_transform(corpus).toarray()

# y contains answer if review is positive or negative

y = dataset.iloc[:,1].values

I will split the corpus into training and test sets. For this, we need a class train_test_split from Sklearn cross-validation. We can do good splits such as 70-30 or 80-20 or 85-15 or 75-25. Here I am using a 75-25 split size. X is called the independent variable that contains many words, y is the label,0 or 1( positive or negative.

#Splitting the dataset into the Training and Test set

from sklearn.model_selection import train_test_split

#experiment with "test_size" to get a better result

x_train, x_test, y_trian, y_test = train_test_split(X,y,test_size = 0.25)

I will fit a Random forest classifier, an ensemble model made of many trees.

#Fitting random forest classifier to the training set

from sklearn.ensemble import RandomForestClassifier

#n_estimators can be said as the number of trees,experiment with n_estimator to get better results

model = RandomForestClassifier(n_estimators=501,criterion='entropy')

model.fit(x_train,y_train)

Let's predict the final output using the predict() method.

y_pred = model.predict(x_test)

print(y_pred)

acc = round(model.score(x_test,y_test)*100,2)

print(str(acc)+'%')

Output

94.3%Check this out : Boundary value analysis