Merge Sort

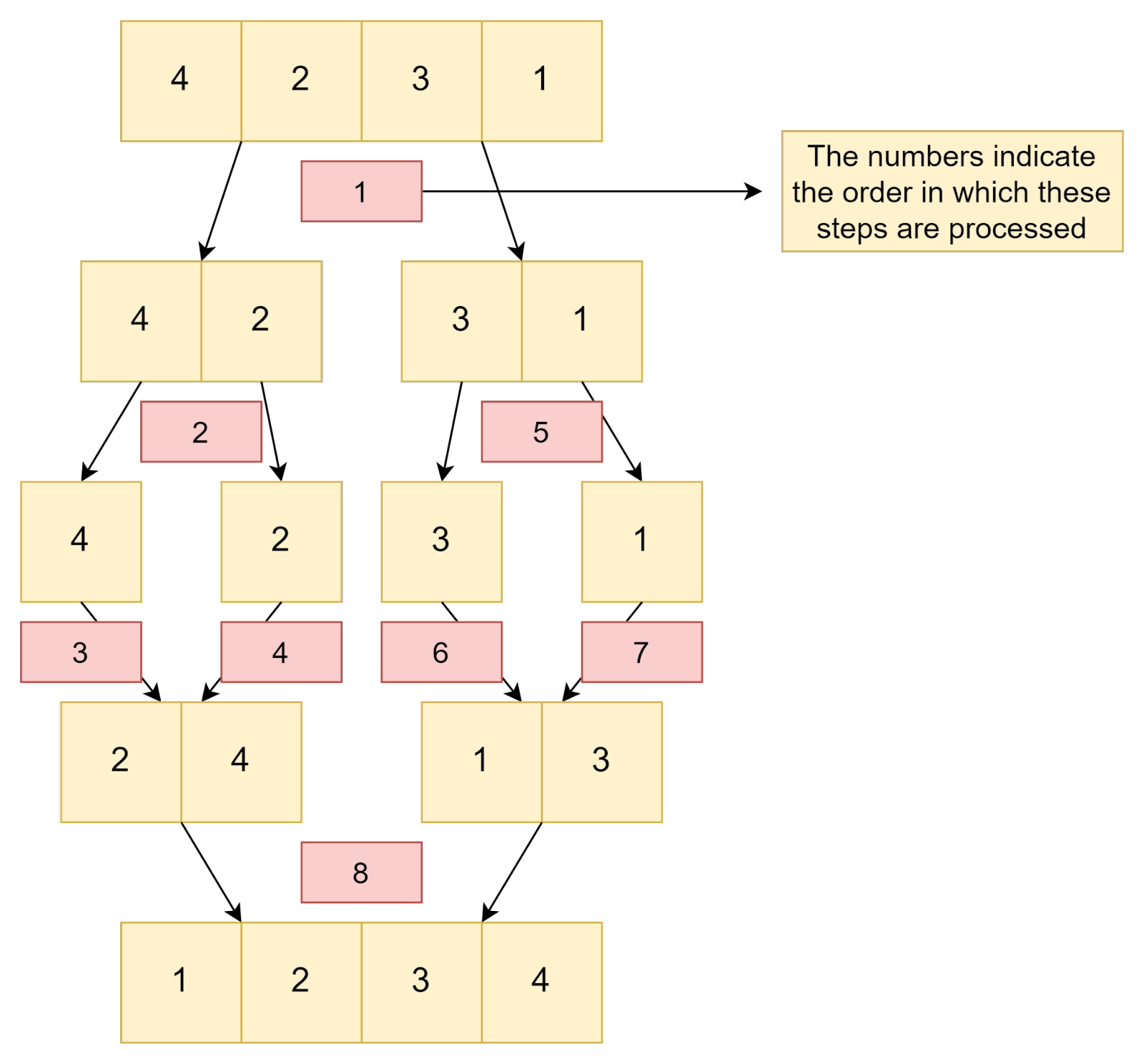

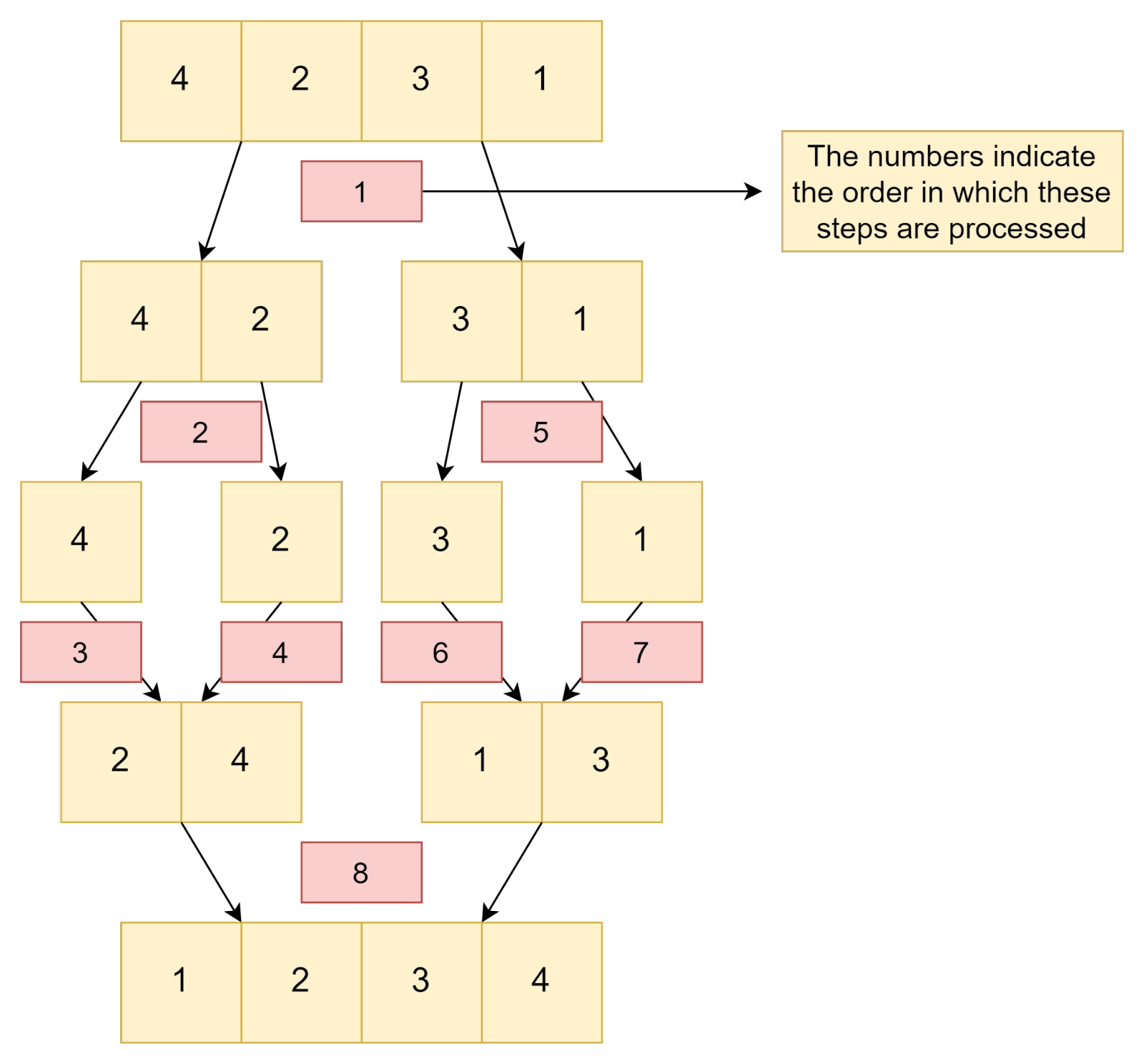

Merge Sort is a sorting algorithm based on the approach of divide and conquer. It breaks the array into two smaller halves of equal size, and then it merges both of the sorted halves. The merge function merges both the array here, assuming that both the halves are sorted.

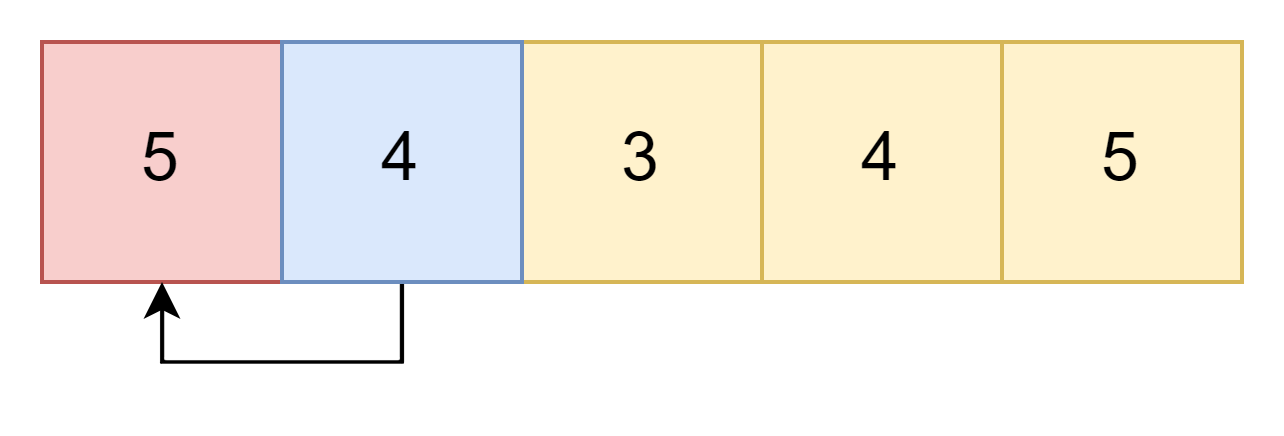

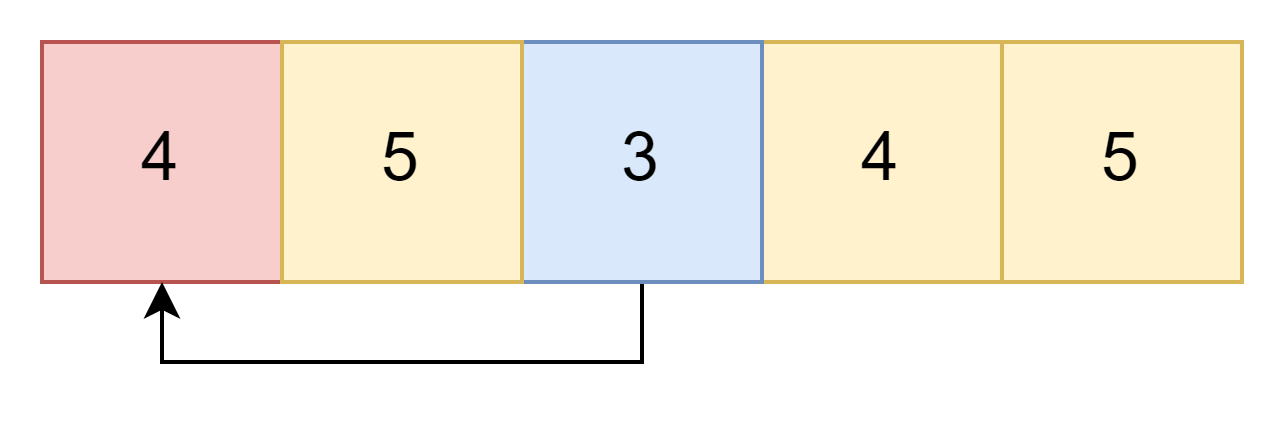

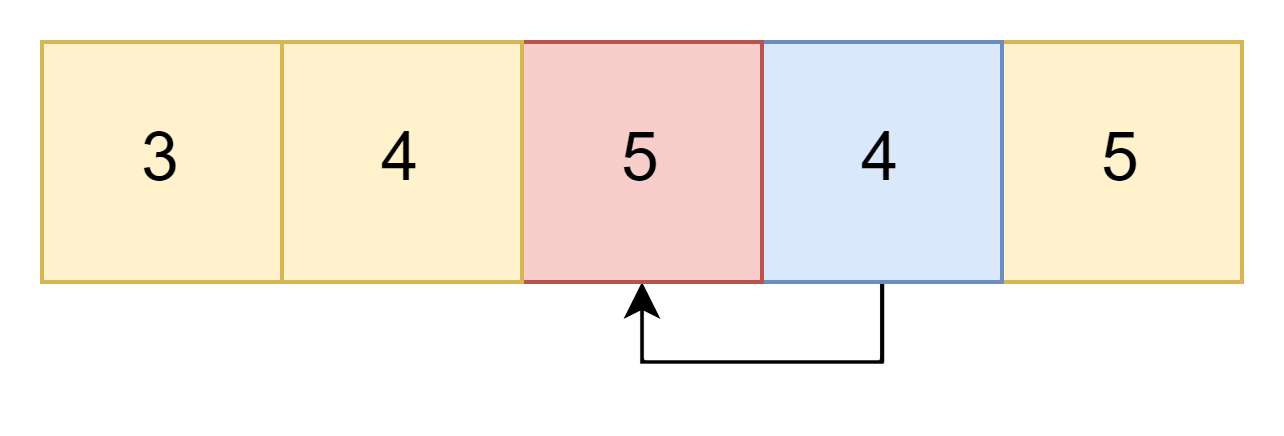

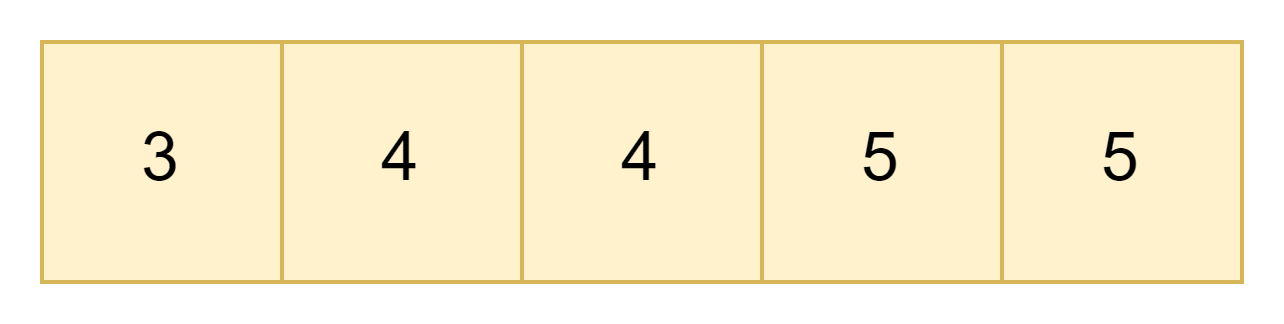

For example, if we want to sort this array:

You can refer to this blog for a clear understanding of Merge Sort.

Pseudocode for MergeSort

mergeSort(arr[], l, r)

If r > l

Finding the middle point to divide the array into two halves:

middle m = l + (r-l)/2

Calling mergeSort for the first half:

mergeSort(arr, l, m)

Calling mergeSort for the second half:

mergeSort(arr, m+1, r)

Now, merge the two halves sorted in the above steps:

merge(arr, l, m, r)

Algorithmic Complexities

Time Complexity: O(N*log(N))

The time complexity of merge sort for worst case, best case, and the average case is O(N*log(N)).

Space Complexity: O(N)

Advantages

- The division of the main problem into sub-problems has no major cost.

- Time complexity is better than many sorting algorithms.

Combined Algorithm

We can combine the advantages of both the sorting algorithms into a single algorithm and the time complexity of the combined algorithm would be

O([N + [K(log(N/K)]]).

Now let’s see how we got the time complexity of this combined algorithm.

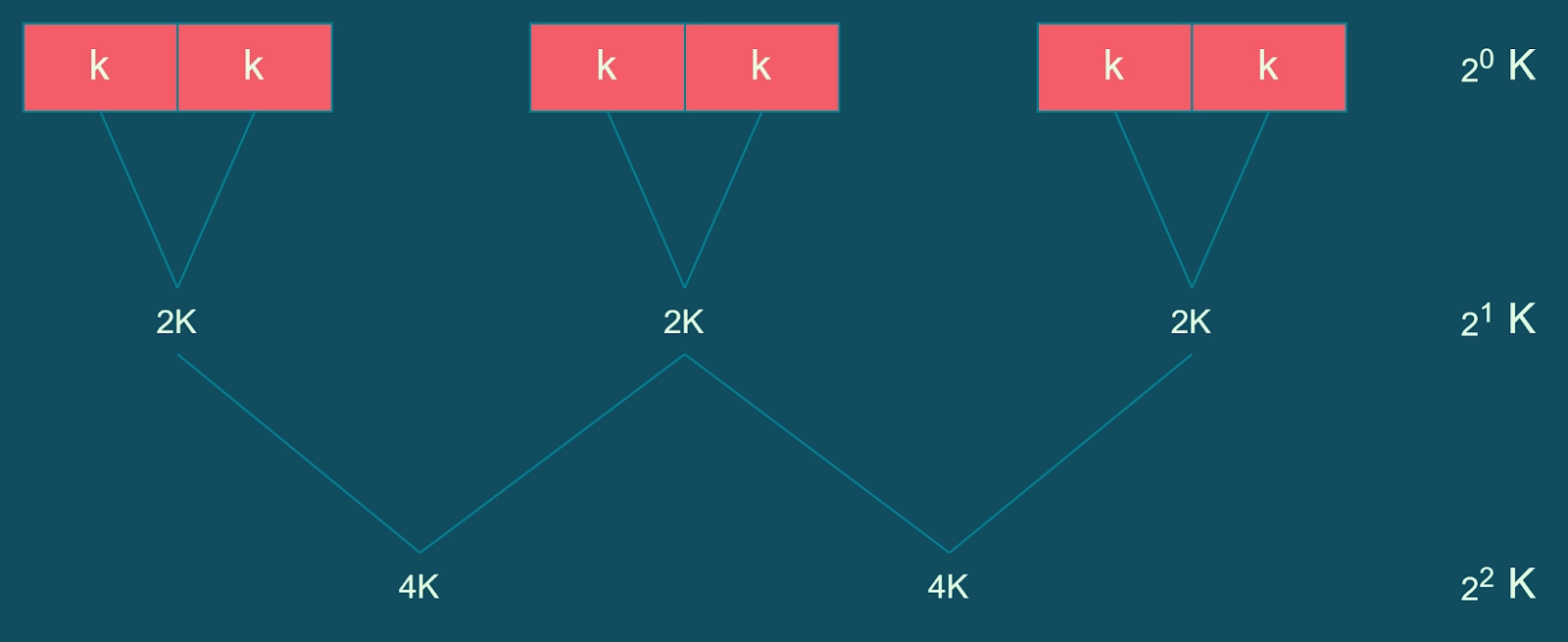

1. Division

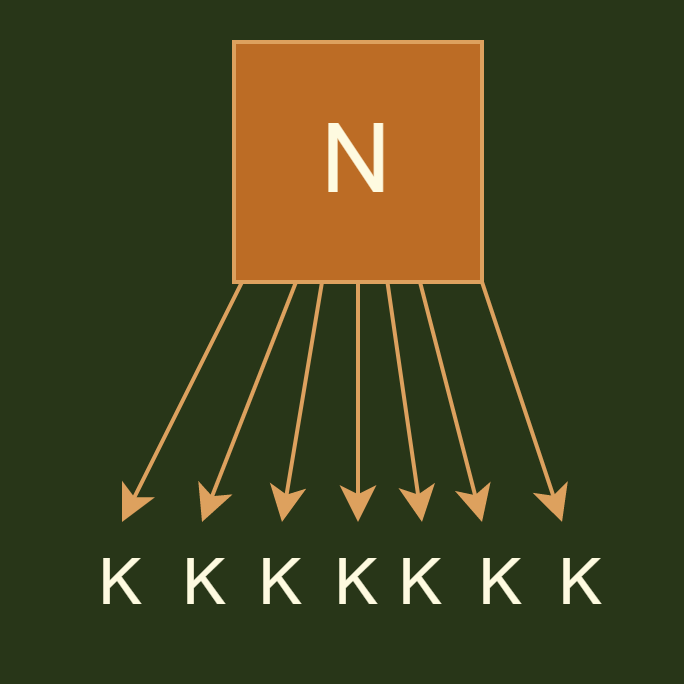

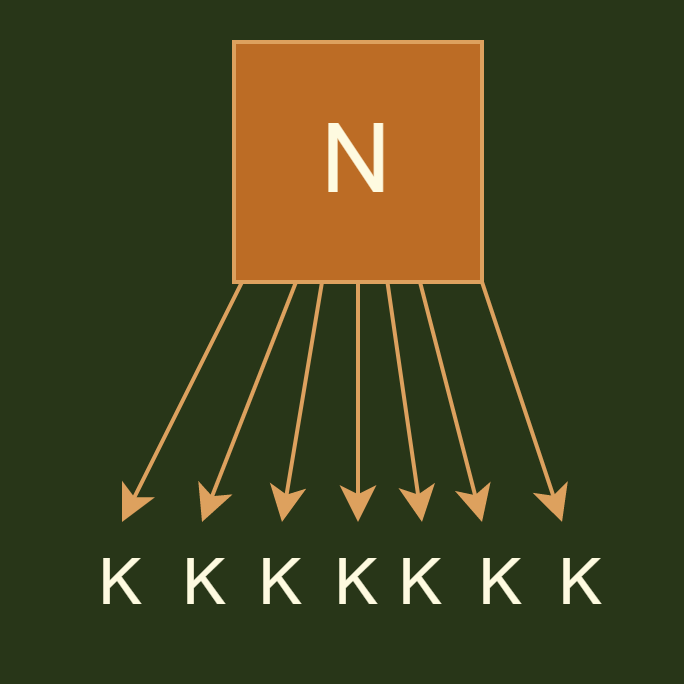

Firstly we will divide all the N elements into (N/K) groups of size K.

2. Sorting

We will perform an insertion sort for each subarray of size K to sort this subarray.

The total cost of insertion sort is

O(K) for the best case and O(K*K) for worst-case since there are (N/K) blocks of size K therefore, the best case would be (N/K) * K which would be equal to O(N), and for the worst-case O(N/K) * (K*K) == O(N*K)

3. Merging

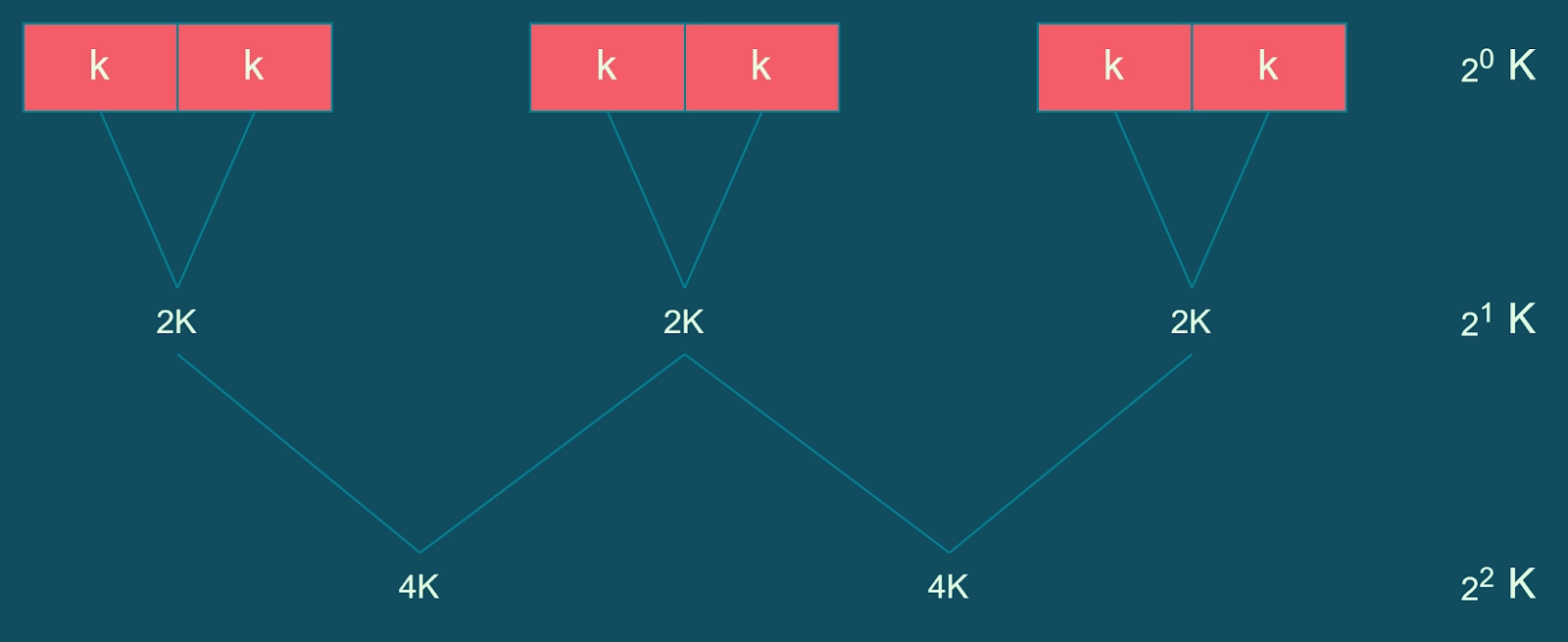

After applying insertion sort on the (N/K) subarrays of size K, we will now merge these groups using the merge sort technique.

Algorithmic Complexities

Time Complexity:

Suppose we do i iterations of merge sort:

(2^i) * K = N => (2^i) = N/K => i*(log(2)) = log(N/K) => i = log(N/K)

Time required to merge = O(N*log(N/K))

Therefore, the total time complexity of the combined algorithm is:

Best Case: N+N*log(N/K)

Worst Case: N*K + N*log(N/K)

Space Complexity: O(N)

If K = 1, the above algorithm will work as a merge sort, which is better in time complexity.

If K = N, the above algorithm will work as an insertion sort, better in space complexity.

Read More - Time Complexity of Sorting Algorithms and Euclid GCD Algorithm

FAQs

-

What is the space complexity of an algorithm?

Space complexity can be referred to as the extra space used by the algorithm to solve a particular problem.

-

Name any five sorting algorithms?

Five sorting algorithms are merge sort, bubble sort, insertion sort, quick sort, radix sort.

-

Name the sorting algorithm which has the most optimized time complexity?

Merge Sort is the most optimized sorting algorithm. Its time complexity is O(N*log(N)) , the average time complexity of quick sort is O(n*logn) but in worst case its time complexity becomes O(n^2).

Key takeaways

This article discussed sorting by combining Insertion Sort and Merge Sort algorithms. We have discussed insertion sort and mergeSort, and we have also discussed the time and space complexities of the merge sort, insertion sort. We have also discussed their combined sorting algorithm's time and space complexity.

Recommended Problem - Merge K Sorted Arrays

Until then, Keep Learning and Keep Coding.