Introduction

Besides supervised and unsupervised learning, reinforcement learning is also a machine learning paradigm. Its goal is to train the machine to understand and learn to take action that will maximize the reward. The strategy of trying and testing does it.

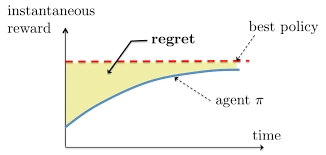

The agent is responsible for accomplishing this by finding the optimal balance between exploring new environments and exploiting the already learned environments.

Tradeoffs between Explore-Exploit

- Exploitation is when the agent knows all his options and chooses the best option based on the previous success rates.

- Exploration is the concept where the agent is unaware of his opportunities and tries to explore other options to predict better and earn rewards.

Dilemma and decision are the two different sides of the same situation. Let us take the example of two friends investing/buying shares. One friend gets lucky and increases his investment by three times. While the other did not get any profit, at this point, he gets greedy and thinks that he might also get lucky if he was investing in the same company as his friend.

Source: Link

So he then starts investing in the same company as his friend. This action is called the greedy action, and the policy is the greedy policy.

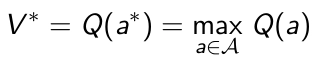

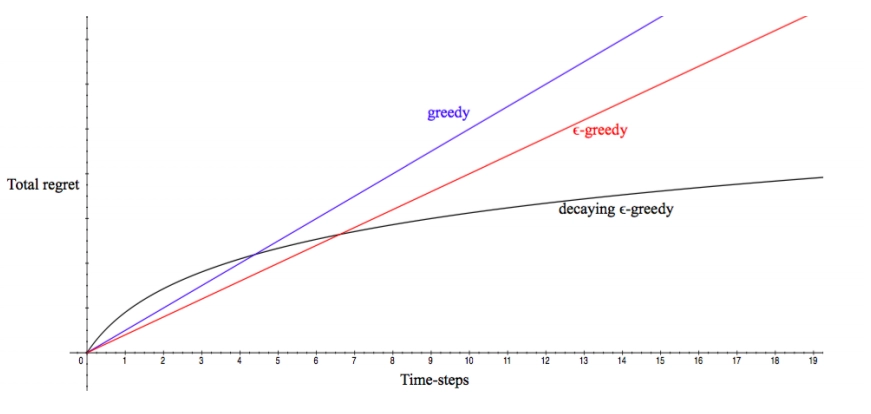

However, the share market is quite unpredictable; he might not know whether the company share prices would hike or decline or stay the same, and therefore the greedy policy would fail. Similarly, in reinforcement learning, having a partial understanding of future states and rewards, the agent will be in a dilemma whether to explore unknown actions or exploit the limited knowledge to receive rewards. The agent cannot go for both exploit and explore simultaneously. Therefore, we imply the Epsilon Greedy Policy to overcome exploration and exploitation's tradeoffs.