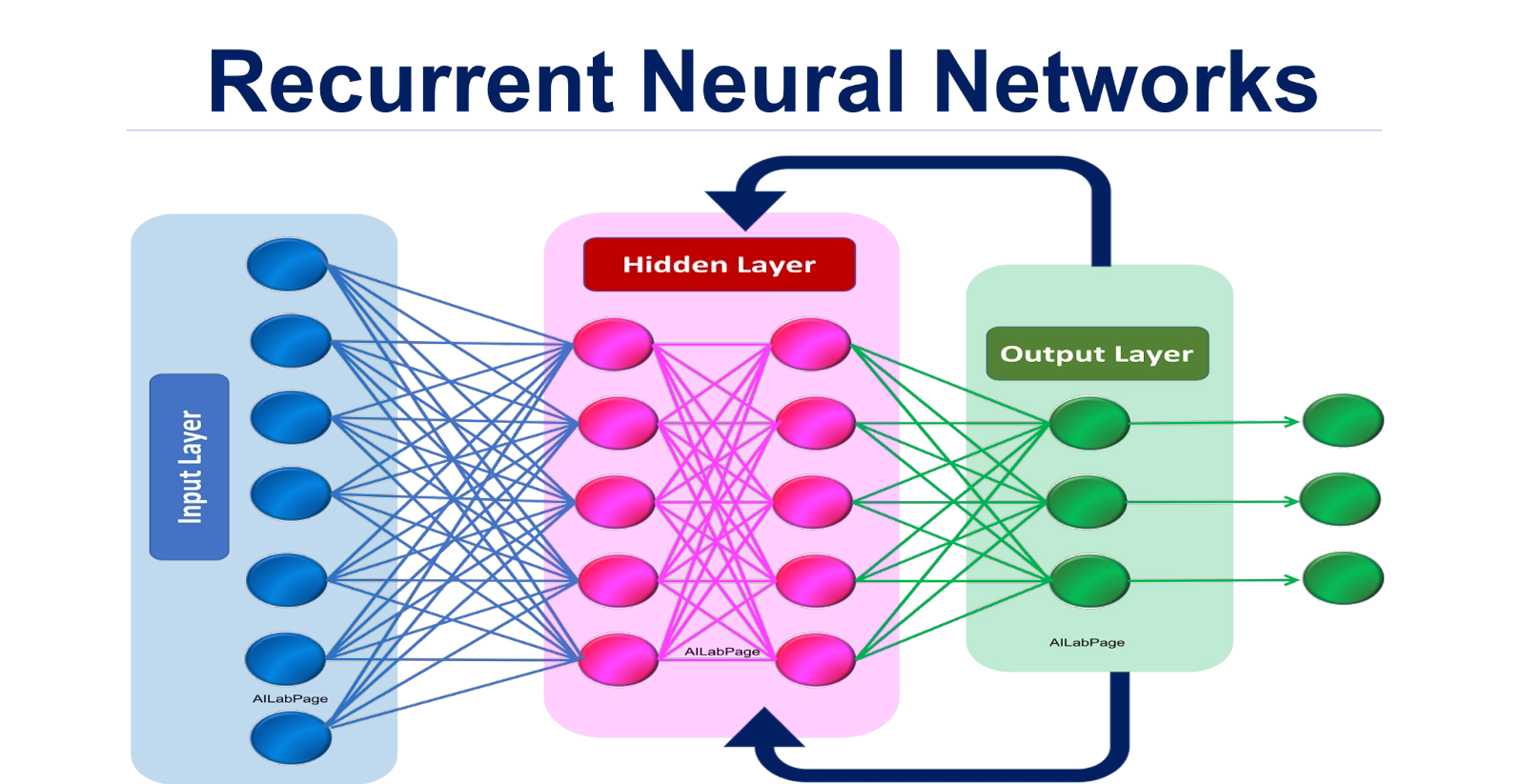

Types of RNN cells

There are two types of RNN cells, namely, LSTMs and GRUs. Both have gates, which have values between 0 and 1 corresponding to each input. The motive of these gates is to forget and retain a few selected inputs, showing that these cells can both remember information from the past and let it go when required, which allows them to handle sequence better.

Now, let us look at the two most common cell types.

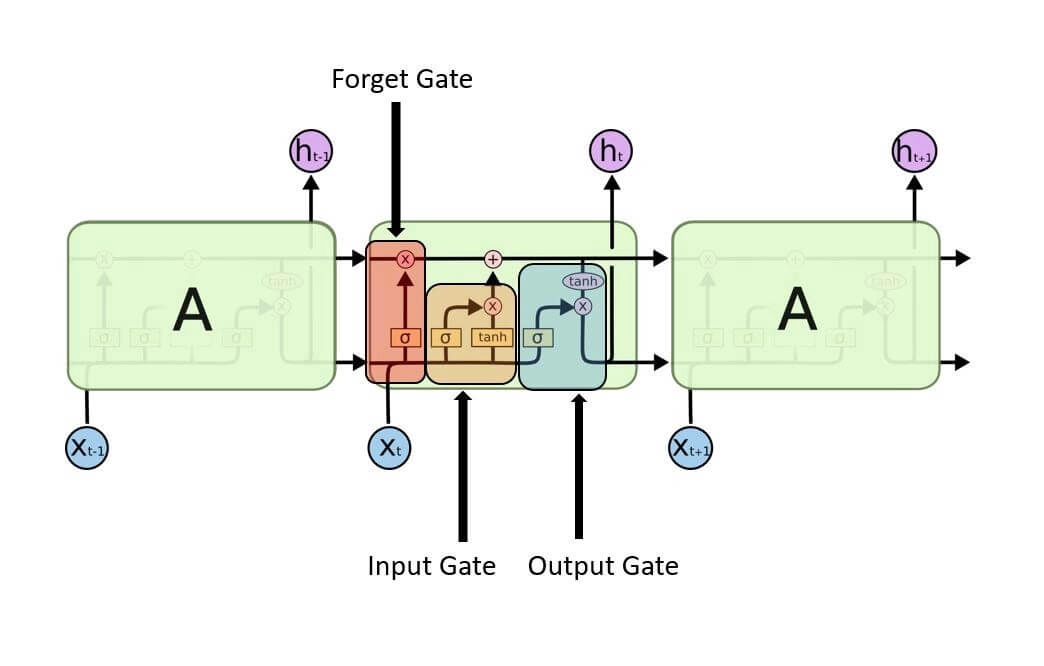

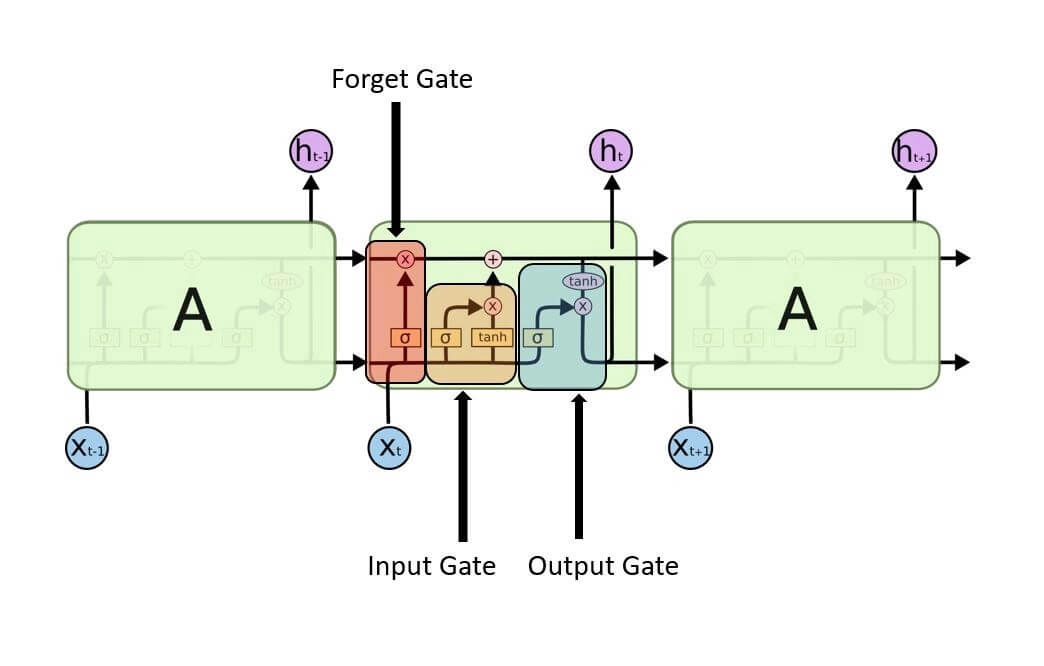

LSTM

LSTM is short of Long-Short Term Memory. It is an artificial recurrent neural network architect that is used in the field of deep learning. LSTM has feedback connections, unlike the standard feed-forward neural networks. It can process single data points and an entire data sequence, such as in speech or video. They have a more complicated cell structure than an average recurrent neuron, allowing them to regulate learning better or forget effectively from the different input sources.

Working of LSTM

An LSTM system consists of three gates, input gate, output gate, and forget gate.

Source: Link

Input gate

The input gate discovers which value from the input should be used to modify the memory. The sigmoid function decides which values to let through 0 or 1. And tanh function gives weightage to the values passed, determining their level of importance ranging from -1 to 1.

Forget Gate

The forget gate discovers the details to be discarded from the block. A sigmoid function decides it. It sees the previous state (ht-1) and the content input (Xt) and outputs a number between 0(omit this) and 1(keep this) for each number in the cell state Ct-1.

Output gate

The output is decided using the input and the memory of the block. The sigmoid function determines which values to let through 0 or 1. However, the tanh function decides which values to let through 0, 1. The tanh function gives weightage to the values passed, determining their level of importance ranging from -1 to 1 and multiplying with a sigmoid output.

GRU

The GRU is the newer version of RNN and is similar to LSTM. In GRU, there is no forget gate; instead, it is only used to update gates. It consists of only two gates, the update gate, and the reset gate.

Source: Link

Update gate

The update gate is similar to the gates input gate and forgets gate in LSTM. They are also supposed to judge what information to throw away and what information to store.

Reset gate

The reset gate is supposed to decide how much past information must be forgotten.

Applications

- Finding the entire parametric space: Beginning with all possible connections and then pruning the redundant ones, leaving us with the important ones. Since starting with ‘all’ possible links is computationally a nightmare, the actual search space in these methods is usually limited.

Source: Link

- Growing a cell one node at a time: This method relies on tactics used to create decision trees. After every repetition, a new node is added at the top of the graph. The tree grows from the output and ends at leaf nodes when we get both x_t and h_t-1 (the inputs).

Source: Link

- Genetic Algorithms: RNN cell architectures that are the star performers in the current generation are cross-bred to produce better cell architectures in the next generation.

Source: Link

Frequently Asked Questions

-

What does an RNN gives as output?

The output of an RNN layer contains a single vector per sample. This vector is the output of the RNN cell corresponding to the last timestep containing information about the entire input sequence.

-

What is a fully connected layer in RNN?

Fully Connected Layers are the layer that maps the output of the LSTM layer to the desired output size.

-

What is the activation function is an RNN?

The most commonly used activation functions in RNN modules are Sigmoid. Tanh. RELU.

Key Takeaways

RNN is suitable for developing sequence data for predictions but suffers from short-term memory. LSTM’s and GRU’s were built as a method to mitigate short-term memory using mechanisms called gates. They are neural network that regulates the flow of information flowing through the sequence chain. LSTM’s and GRU’s are used in deep learning applications like speech recognition, speech synthesis, natural language understanding, etc. If you’re interested in going deeper, Check out our industry-oriented machine learning course curated by our faculty from Stanford University and Industry experts.