Forward Propagation

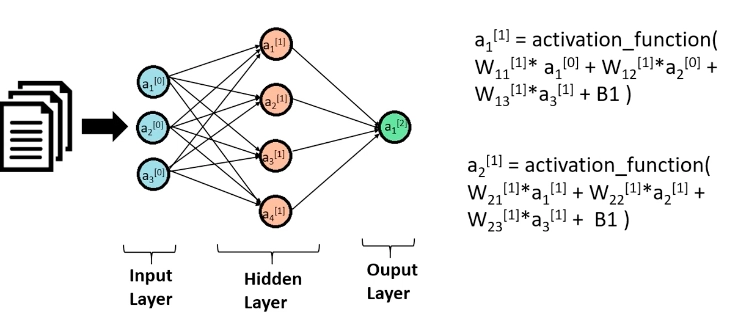

So, what is Forward Propagation? As discussed earlier, it’s the first part of one training iteration. The movement of data from the input layer to the next subsequent layers computing the neurons is known as forward propagation.

Source- link

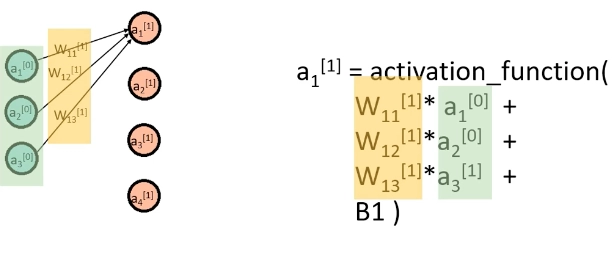

Beginning with the notations, Here-

a1[0] denotes the first neuron of the input layer

a2[0] denotes the second neuron of the input layer and so on…

a1[1] denotes the first neuron of the hidden layer

a2[1] denotes the second neuron of the hidden layer and so on…

Lastly, a1[2] is the first and the only neuron in the output layer. There can be more than one neuron in the final layer as well.

Source - link

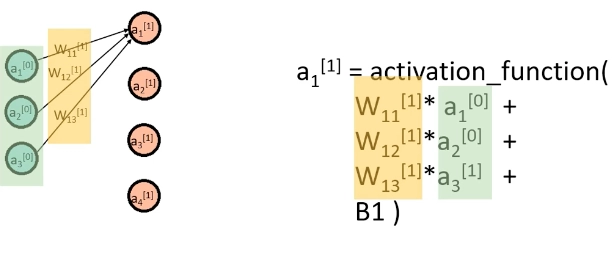

Here each neuron in the input layer is connected with each neuron in the next subsequent layer.

The weight between one neuron to another is denoted by w.

For example,

the weight between the second neuron of the first layer (a2[0]) and the first neuron of the second layer(a2[0]) is denoted by w12[1]. Now since all the neurons in the first layer connect with each neuron in the second layer, hence a1[1] is given by

a1[1] = f(w11[1]*a1[0] + w12[1]*a2[0] + w13[1]*a3[0] + B1)

Where B1 is the bias term and f is the activation function.

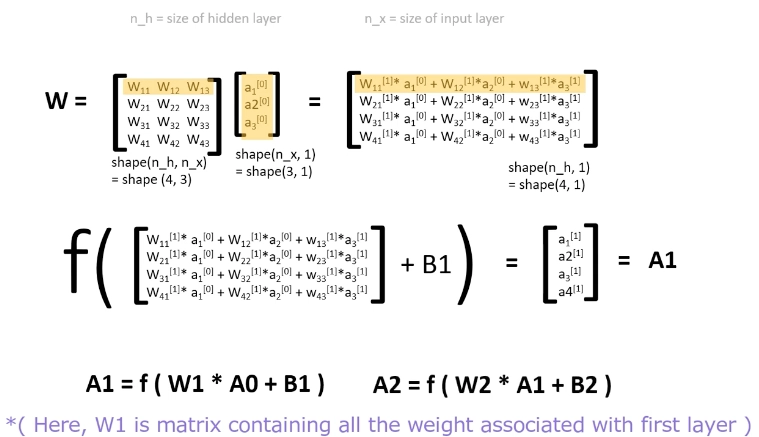

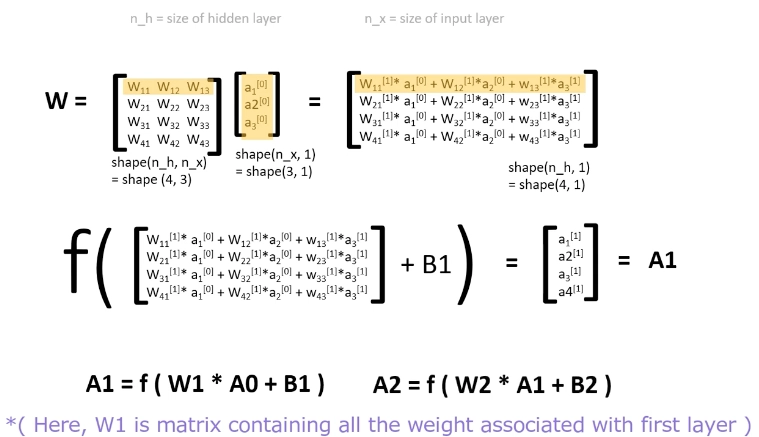

We can compute other neurons in a similar fashion. This operation can be denoted as matrix multiplication.

Source - link

Here, W is the matrix of weights associated with every neuron in a layer arranged row-wise, starting with the first layer. Multiplying it with the matrix of neurons from the previous layer, adding the bias term B(i), and passing it through the activation function, we get the matrix of all the neurons in the next subsequent layer.

Here A1 denotes the matrix of neurons in the second layer, and W1 denotes the matrix of weights associated with the first layer.

This way, we can find all the subsequent layers in the neural network.

This subsequent computation of layers is called Forward propagation. Now, the question arises, how should we select the weights. This is done with the feedback received after comparing the output generated with the actual output, which is then sent back. The weights are then adjusted to minimise the error in the feedback. This is known as Backpropagation. You can check out our blog on Backpropagation to get a better understanding.

Also see, Artificial Intelligence in Education

Frequently Asked Questions

-

What is meant by forward propagation?

Ans. Computation of each layer in the neural network, starting from the input layer all the way to the output layer, is known as Forward Propagation.

-

How are weights associated with each layer selected?

Ans. The first iteration starts with a random selection of weights. However, the weights are gradually adjusted as the neural network receives feedback about the output generated. Adjusting weights and minimising the cost function is the purpose of Backpropagation.

-

What is the difference between Forward Propagation and backward propagation?

Ans. Forward propagation is tasked to make the computations, while backward propagation is tasked to get the feedback based on which the computations are corrected.

Key Learnings

Artificial Neural Networks make the basis for Deep learning. Hence it’s important to know exactly how ANNs work. This blog thoroughly covers the principle behind Forward Propagation and what purpose it serves. It also covers how it is different from Backpropagation in its objective. It is advisable to visit our other blogs on ANN to understand them in their entirety. If you wish to build upon your skills in deep learning, then you may check out our industry-oriented courses on deep learning curated by industry experts.

Happy Learning!