How to use FLAIR for NLP

Installation

You must install Pytorch before installing Flair. Follow this link to install Pytorch

After that just run this command.

pip install flair

Flair Datatypes

Flair Datatypes:

Flair offers two types of objects. They are:

- Sentence

- Tokens

import flair

from flair.data import Sentence

# take a sentence

s= Sentence('Coding Ninja is Awesome.')

print(s)

You can also try this code with Online Python Compiler

Output:

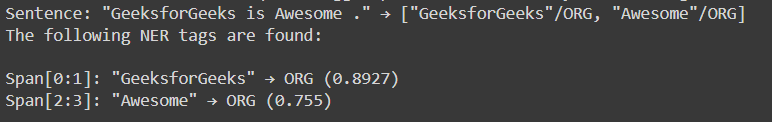

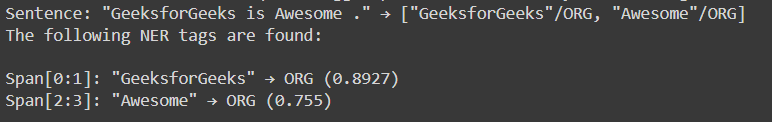

NER Tags

To predict tags for a given sentence we will use a pre-trained model as shown below.

import flair

from flair.data import Sentence

from flair.models import SequenceTagger

# input a sentence

s = Sentence('Coding Ninjas is great.')

# loading NER tagger

tagger_NER= SequenceTagger.load('ner')

# run NER over sentence

tagger_NER.predict(s)

print(s)

print('The following NER tags are found:\n')

# iterate and print

for entity in s.get_spans('ner'):

print(entity)

You can also try this code with Online Python Compiler

Output:

Word Embeddings:

Word embeddings assign embeddings to each individual word in a text. Flair, as previously mentioned, offers a variety of word embeddings, including its own Flair Embeddings. We'll look at how to put some of these into practice here.

A) Classic Word Embeddings — These are static word embeddings. Each different word is given only one pre-computed embedding in this method. This category contains the majority of common word embeddings, including the GloVe embedding.

import flair

from flair.data import Sentence

from flair.embeddings import WordEmbeddings

# using glove embedding

GloVe_embedding = WordEmbeddings('glove')

# input a sentence

s = Sentence('Coding Ninjas has changed my life by giving me quality content.')

# embed the sentence

GloVe_embedding.embed(s)

# print the embedded tokens

for token in s:

print(token)

print(token.embedding)

You can also try this code with Online Python Compiler

Output:

Token[0]: "Coding"

tensor([-0.9485, -0.6832, 0.4411, 0.1044, -0.0971, -0.2315, 0.4491, 0.0338,

-0.0291, 0.7931, -0.1222, -0.0776, -0.8558, -0.3021, 0.4678, 1.3623,

0.4862, -0.4978, 0.5748, 0.2984, -1.3022, -0.6850, 0.9733, 0.2768,

0.5648, -0.7439, 0.5264, 0.0751, 0.0431, -0.1671, 0.5441, 0.2561,

-0.4419, -0.2748, 0.5930, -0.3694, -0.5157, 0.4142, 0.2500, -0.1992,

0.1126, -0.4630, -0.7945, -0.1299, -0.6545, 0.1559, -0.2571, -0.1784,

-0.8521, -0.0017, -0.3644, 0.4133, 0.3370, 0.7247, -0.1383, 0.1291,

0.0651, -0.0024, 0.5616, 0.5527, -0.0752, -0.3711, 0.4538, -0.4160,

0.4693, 0.5090, -0.4462, -0.4516, 0.2669, 0.1894, -0.8690, 0.3454,

0.5334, 0.1480, 0.9680, -0.2842, -0.0070, 0.2179, 0.0284, 0.0697,

-0.0954, -1.0090, -1.0657, -0.7193, -1.3071, 1.6410, -0.0777, -0.7094,

-0.7959, 0.1022, -0.1490, 0.5828, -0.6352, -0.0806, 0.4067, -0.3347,

0.1326, -0.8684, 0.0865, 0.5727])

Token[1]: "Ninjas"

tensor([ 0.1377, 0.2260, -0.1929, -0.3780, -0.7664, 0.6439, 0.3071, -0.6139,

-0.0262, -0.1599, 0.5059, 0.2083, -0.1994, 0.4609, -0.1929, 0.2725,

0.1978, -0.0470, -0.4616, -0.1050, 0.6107, -0.3809, 0.1142, 0.0899,

0.5405, 0.2280, -0.1065, 0.2831, -0.4941, -0.4357, 0.3478, -0.2997,

-0.8749, -0.1390, 0.6853, -0.2046, -0.2386, 0.3011, 0.6886, -0.8018,

0.0461, 0.7491, 0.2388, 0.2687, -0.2072, -0.0266, -0.6066, 0.5775,

0.4430, 0.2138, -1.3652, -0.0252, -0.0499, 0.6025, -0.3616, 0.2501,

0.5339, -0.2998, -0.2240, 0.0047, -0.3280, 0.2004, -0.1466, -0.2754,

0.1081, 0.3824, -0.6671, -0.0452, 0.0094, -0.0360, -0.2907, -0.3716,

-0.1948, 0.1394, -0.5052, -0.2369, 0.0577, 0.7543, 0.5573, -0.0993,

-0.2725, -0.5744, -0.5149, 0.5281, 0.4872, -0.2048, -0.2826, 0.9950,

0.4194, -0.6284, -0.1708, -0.2480, -0.4761, 0.1244, -0.1396, -1.0866,

-0.5709, 0.1172, 0.1468, -0.1035])

Token[2]: "has"

tensor([ 9.3736e-02, 5.6152e-01, 4.8364e-01, -4.5987e-01, 5.6067e-01,

-1.6940e-01, 1.8687e-02, 4.5529e-01, 6.5615e-02, 2.5181e-01,

-1.4251e-01, 1.0532e-01, 7.7865e-01, 1.4280e-01, -8.1140e-02,

-6.9555e-02, 3.2433e-01, 1.9611e-02, -1.5608e-01, 2.2235e-01,

3.5559e-01, 1.4713e-01, 1.9156e-01, 2.8030e-01, 2.7691e-01,

-2.0670e-01, -1.1378e-01, -4.8318e-01, -6.4248e-01, -3.5523e-01,

2.1939e-01, 1.2533e+00, -2.1164e-01, 9.1811e-01, 3.1986e-01,

4.8367e-01, 1.5322e-01, 5.6109e-01, -6.0692e-01, -2.8075e-02,

-9.2199e-01, -2.5583e-01, 6.6362e-01, -4.9082e-01, 3.4757e-01,

-4.8103e-02, 5.7283e-01, -6.2332e-01, 8.7508e-01, -5.0079e-01,

-1.2316e-01, -6.9096e-01, 1.0129e-01, 1.5160e+00, -1.7400e-01,

-2.8902e+00, -2.4541e-01, -1.7934e-01, 1.1001e+00, 1.4198e+00,

4.9132e-01, 3.0282e-01, 7.7149e-02, -9.7834e-02, 9.0586e-01,

-1.6150e-01, 5.5681e-01, 3.2817e-01, 4.9335e-01, 4.4815e-02,

5.7458e-01, -3.2663e-01, -2.9745e-01, 1.8070e-03, 2.4382e-01,

-5.1915e-01, -1.4392e-01, 2.7921e-01, -1.5964e+00, 3.7152e-01,

8.1129e-01, -1.3488e-01, -3.6534e-01, -2.2346e-02, -1.5091e+00,

-3.8727e-01, 3.0063e-01, -3.7562e-01, -1.8582e-01, -3.9748e-01,

-1.0719e-01, -1.2265e-01, -6.6462e-01, 1.2112e-01, -3.7281e-01,

6.0048e-01, -4.2683e-01, -8.1305e-01, 6.2397e-01, 7.3176e-01])

Token[3]: "changed"

tensor([ 5.9014e-01, -1.6524e-01, 2.9241e-01, 2.1487e-01, -1.7894e-01,

-9.6078e-02, 1.2266e-01, -6.0071e-02, 1.0477e-01, 1.0592e-01,

4.9439e-02, -7.5033e-02, 5.2681e-01, -3.1481e-01, 1.5618e-01,

-6.8104e-01, -3.9924e-02, 1.4993e-01, -2.7383e-01, -9.9363e-02,

2.4255e-01, -1.2560e-01, 2.1889e-01, 9.6639e-04, -1.9069e-01,

-3.2811e-01, 3.1624e-02, -8.4373e-01, 4.5889e-02, 7.1095e-02,

4.0193e-01, 1.2175e+00, -4.2473e-01, -9.8046e-02, -2.8234e-01,

3.4936e-01, -1.3179e-01, -3.5067e-01, -3.9365e-01, -6.8285e-01,

-1.4058e-01, -8.1491e-01, 5.9576e-02, 1.2649e-01, -5.6070e-01,

9.0764e-02, 2.9091e-01, 5.7168e-02, 1.0911e-01, -1.4859e+00,

1.6127e-01, -1.1542e-01, 7.7548e-01, 1.2050e+00, -1.4691e-01,

-1.9732e+00, -1.6329e-01, 1.4997e-03, 1.2589e+00, 5.0079e-01,

2.9990e-02, 7.1761e-01, -4.1721e-01, -1.8725e-01, 5.5176e-01,

-1.1622e-01, -3.0309e-01, -1.3413e-01, 1.6841e-01, 3.8622e-01,

-5.1597e-02, -4.4127e-03, -1.8865e-01, -3.1188e-01, 2.4158e-01,

-3.8708e-01, -4.6993e-02, 3.2547e-01, -4.8506e-01, -2.3362e-01,

-5.6920e-01, -5.0932e-01, -1.9597e-01, -9.7976e-02, -9.5955e-01,

-1.2310e-01, -3.1017e-01, -1.0086e+00, -3.7292e-01, 1.2004e-01,

-3.7904e-01, -1.0555e-01, -2.5156e-01, 4.2394e-01, -4.4824e-01,

1.1170e-01, -5.0723e-01, -3.1289e-02, -6.2690e-02, 1.4181e-01])

Token[4]: "my"

tensor([ 0.0803, -0.1086, 0.7207, -0.4514, -0.7496, 0.6378, -0.2571, 0.4161,

-0.0545, 0.3556, 0.3586, 0.5400, 0.4912, 0.2571, -0.2147, -0.4284,

-0.4232, 0.3872, -0.3569, 0.4012, -0.1985, 0.4345, -0.3648, 0.0717,

0.5332, 0.8456, -0.6754, -1.2527, 0.8376, -0.1593, 0.3780, 0.9454,

0.8307, 0.1943, -0.5845, 0.5828, -0.6256, 0.4904, 0.4327, -0.5425,

0.1045, -0.1626, 0.9900, -0.7422, -0.5978, 0.1019, -0.3357, -0.3909,

0.1513, -1.3533, -0.1126, 0.1435, 0.0381, 1.1167, -0.2308, -2.6394,

0.6685, 0.4845, 1.8796, 0.0803, 0.7373, 1.8058, -0.5193, 0.0041,

0.7699, 0.3688, 0.8114, 0.1694, -0.1192, -0.2650, 0.2269, 0.7694,

0.8520, -0.9777, 0.2318, 0.8814, -0.2709, -0.3991, -0.5719, 0.0756,

0.1809, 0.5904, -0.1343, -0.1206, -1.8157, -0.3550, -0.3172, -0.2704,

-0.6723, -0.0410, -0.4433, 0.3565, 1.0247, 0.4969, -0.5170, -0.4928,

-0.3340, -0.3484, 0.3147, 1.0087])

Token[5]: "life"

tensor([ 0.2516, 0.4589, 0.3027, 0.1246, 0.1506, 0.7373, -0.3143, -0.3131,

-0.4089, 0.0425, -0.4261, 0.4955, 0.0105, 0.2220, 0.0288, -0.5905,

0.5335, 0.1780, -0.2449, 0.9269, 0.2706, -0.0963, -0.0038, 0.0652,

0.5849, 0.3937, -0.4344, -1.0214, -0.1204, 0.3056, -0.1696, 0.1867,

-0.4401, -0.3458, -0.4686, 0.1854, -0.3038, 0.4990, -0.3754, -0.5204,

-0.5141, -0.1344, 0.1174, -0.1379, -0.2505, 0.4127, 0.0610, -0.1037,

0.3436, -0.5338, -0.0136, -0.5058, 0.4702, 1.5464, 0.2180, -2.2455,

0.3539, -0.1286, 1.5870, 0.7957, 0.1543, 1.2620, -0.2617, -0.6863,

1.1039, 0.3391, 0.8808, -0.2441, 0.2174, -0.2120, 0.0974, -0.1773,

0.0629, -0.1105, 0.2185, 0.0388, 0.1723, -0.5824, -0.8661, -0.0220,

-0.0118, 0.9020, 0.2041, 0.7052, -1.5673, 0.1123, -0.3544, -0.7339,

-0.2852, -0.7496, 0.4904, -0.4536, 0.8172, 0.1501, -0.3177, 0.0317,

0.0699, -0.7478, 0.1368, 0.1893])

Token[6]: "by"

tensor([-0.2087, -0.1174, 0.2648, -0.2834, 0.1958, 0.7446, -0.0389, 0.0285,

-0.4425, -0.3043, 0.2713, -0.5191, 0.5218, -0.7665, 0.2804, -0.4834,

-0.1563, -0.4970, -0.5102, -0.0365, 0.2058, -0.6136, 0.4639, 0.7350,

0.6681, -0.4443, -0.1760, -0.5478, -0.0135, 0.1633, 0.2815, 0.0542,

-0.1991, -0.1907, -0.4318, 0.1478, 0.2756, 0.1857, -0.4078, -0.1541,

-0.5885, -0.0085, -0.1418, 0.7061, 0.5403, -0.4331, 0.1750, -0.4621,

-0.3137, -0.3404, -0.2513, 0.6823, 0.3358, 1.5862, -0.3943, -2.9938,

-0.2977, 0.0421, 1.9075, -0.0726, -0.0922, 0.6613, 0.1387, 0.7877,

0.6931, -0.2218, 0.7171, 1.1453, 1.2153, 0.1420, -0.7991, 0.1697,

-0.3453, -0.5174, -0.1565, 0.1876, 0.1694, -0.0083, -1.4511, 0.0620,

1.1019, 0.0844, -0.3415, 0.4999, -1.1106, -0.1376, 0.1538, -0.0610,

-0.5383, -0.7894, -0.1257, -0.5738, -0.7348, 0.5477, -0.2846, -0.2435,

-0.2751, -0.3327, 0.2788, -0.8705])

Token[7]: "giving"

tensor([ 3.3218e-01, 2.5289e-02, 4.4659e-01, 2.9877e-01, 3.4465e-01,

5.1759e-01, -3.9559e-01, 9.8973e-02, -1.5446e-01, -6.2742e-01,

1.8752e-01, -4.1123e-01, -9.6717e-02, -5.0206e-02, 2.5483e-01,

-2.3937e-01, -2.8515e-01, -2.1644e-01, -2.2703e-02, 6.3781e-01,

-1.0152e-01, -7.9121e-02, -3.0515e-01, -2.2891e-01, 4.8011e-02,

1.2372e-01, -3.9784e-01, -2.1613e-01, -1.2284e-01, -4.0349e-02,

2.3041e-01, 3.9889e-01, -1.1838e-01, -2.3601e-01, -8.9005e-02,

-1.1504e-01, -1.9619e-01, 2.5139e-01, -7.6092e-01, 2.0833e-01,

-2.4797e-01, -1.9797e-01, 5.7382e-01, -1.1180e-01, -2.6223e-01,

-5.3075e-01, 7.9536e-02, -4.2935e-01, -3.1349e-01, -6.4805e-01,

-7.8088e-04, 2.6649e-01, -2.0073e-01, 9.7418e-01, -1.4793e-01,

-2.2970e+00, 4.1794e-01, -3.6036e-01, 1.6881e+00, 1.1836e-01,

5.6897e-02, 2.0981e-01, -1.3822e-01, 2.2690e-01, 3.0856e-01,

4.1016e-02, 3.6934e-01, 3.7889e-01, 1.7157e-02, -7.6909e-02,

-5.2752e-01, 5.3872e-03, -1.7535e-01, -4.0128e-01, 1.5650e-01,

6.5140e-01, -3.4173e-01, -4.0770e-01, -6.7466e-01, -2.4945e-01,

5.2996e-01, 1.4866e-02, -6.0091e-01, -3.0200e-01, -1.2516e+00,

1.3198e-01, 2.6113e-01, 3.4633e-01, -8.5451e-02, -1.1992e-01,

5.0940e-03, -8.0446e-01, 1.4242e-01, -6.1518e-01, -5.7138e-01,

-5.4933e-01, -1.5083e-01, 1.1212e-01, 4.3103e-01, 2.3263e-01])

Token[8]: "me"

tensor([ 5.6719e-02, 1.3333e-01, 7.2690e-01, -4.6336e-01, -5.9334e-01,

7.1746e-01, -1.1795e-01, 2.1614e-01, 4.3036e-01, -6.7053e-01,

5.7480e-01, 2.6827e-01, 2.4659e-02, 1.6066e-01, 2.0400e-01,

-3.9246e-01, -6.3294e-01, 6.2915e-01, -7.6340e-01, 1.1581e+00,

3.6218e-01, 3.1932e-01, -6.5613e-01, -4.7797e-01, 2.9885e-01,

6.2435e-01, -4.6060e-01, -9.6276e-01, 1.2214e+00, -2.3152e-01,

-6.8889e-02, 6.3519e-01, 7.7546e-01, 3.3128e-01, -3.5220e-01,

7.4236e-01, -6.6703e-01, 3.2260e-01, 4.3490e-01, -6.0154e-01,

-4.2067e-01, 2.1991e-02, 1.6378e-01, -9.5682e-01, -6.4464e-01,

-9.4111e-02, -2.7105e-01, -2.3312e-01, -3.8453e-01, -1.2665e+00,

-1.8289e-01, 5.0432e-01, -5.4260e-02, 1.1872e+00, -4.7143e-01,

-2.6562e+00, 4.4917e-01, 6.7218e-01, 1.4074e+00, 1.9179e-03,

4.0658e-01, 1.4287e+00, -1.0631e+00, -2.1713e-01, 4.7800e-01,

1.3170e-01, 1.2494e+00, 7.2980e-01, -2.0880e-01, 2.5449e-01,

1.2297e-01, 3.4922e-01, 2.4051e-01, -8.1023e-01, 5.2047e-01,

6.8801e-01, 6.7784e-02, -2.2132e-01, -1.2176e-01, -1.6238e-01,

5.3189e-01, -3.2943e-01, -5.3818e-01, -2.2957e-01, -1.4103e+00,

1.6494e-02, -1.3494e-01, -3.6251e-02, -7.7385e-01, -3.5178e-01,

-1.1230e-01, -3.7400e-01, 5.5139e-01, -1.9572e-01, -8.7050e-02,

-3.1469e-01, -4.2257e-01, -3.5286e-02, -2.4911e-02, 6.2131e-01])

Token[9]: "quality"

tensor([ 0.1103, 0.1253, 0.5215, 0.2598, -0.2896, -0.7153, -0.1205, -0.1618,

-0.5464, 0.5405, -0.3801, -0.6569, 0.3436, -0.2196, -0.2897, 0.0498,

0.7592, 0.1586, 0.4355, 0.6712, -0.7538, -0.3516, 0.1692, -0.6701,

-0.0801, 0.2232, 0.1115, -0.3422, -0.8780, -0.1048, 0.0118, 0.1775,

-0.4033, 0.2135, 0.2932, 0.3522, -0.4174, 0.5145, -0.5759, -0.5394,

0.2346, -0.8272, -0.3761, -0.1666, 0.2284, 0.1467, -0.0313, -0.7566,

-0.1011, -1.1077, 0.3854, -0.2678, -0.6217, 1.1614, 0.5684, -1.5594,

0.1835, 0.1143, 1.8400, -0.6741, 0.0077, 0.4336, -0.5318, 0.1763,

0.4175, -0.0989, 0.6215, -0.3858, 0.8044, -0.3297, 0.4026, 0.5385,

0.7844, 0.2654, 1.1350, 0.5131, -0.3069, 0.1659, -0.1746, -0.2624,

0.5942, 0.1902, -0.5581, -0.0366, -1.6606, 0.1614, 0.0476, -0.3759,

-0.6824, 0.2062, -0.0093, -0.3852, -0.4286, -0.0577, -0.5203, 0.4712,

-0.6065, -0.9291, 0.9071, 0.5735])

Token[10]: "content"

tensor([-0.1856, -0.1996, 0.1397, -0.4034, 0.5065, -0.0204, -0.1699, -0.2575,

0.1849, 0.1320, 0.5099, -0.4227, -0.1058, -1.1797, 0.1069, 0.1852,

0.2057, -0.1548, -0.0025, 0.4229, -0.6255, -0.4793, 0.3382, 0.3945,

-0.4465, 0.1249, 0.6977, 0.7655, -0.4459, 0.5499, 0.3186, 1.1514,

-0.5582, -0.6907, 0.9843, 0.3429, 0.0930, -0.0668, -0.9322, -0.2489,

0.1780, -0.4086, -0.7967, -0.0255, -0.1949, -0.4062, -0.7626, -0.3136,

0.2474, -0.6902, 0.9404, 0.2332, -0.3650, 0.7000, 0.0059, -1.4976,

-0.4290, -0.6553, 1.9299, -0.1564, 0.3897, 0.3707, -0.4576, -0.6196,

1.3286, 0.0638, 1.4521, -0.4421, 0.2418, -0.7970, 0.1790, 0.0750,

1.4354, 0.2880, -0.2649, 0.0510, 0.0991, -0.2074, -0.2811, -0.4952,

-0.3431, -0.6003, -0.1989, -0.0249, -1.5615, 0.1260, 0.0107, -0.0588,

-0.0590, 0.0678, -0.4709, 0.1068, -0.3152, -0.1949, -0.0503, -0.5000,

-0.2581, -0.9514, 0.9176, 0.8282])

Token[11]: "."

tensor([-0.3398, 0.2094, 0.4635, -0.6479, -0.3838, 0.0380, 0.1713, 0.1598,

0.4662, -0.0192, 0.4148, -0.3435, 0.2687, 0.0446, 0.4213, -0.4103,

0.1546, 0.0222, -0.6465, 0.2526, 0.0431, -0.1945, 0.4652, 0.4565,

0.6859, 0.0913, 0.2188, -0.7035, 0.1679, -0.3508, -0.1263, 0.6638,

-0.2582, 0.0365, -0.1361, 0.4025, 0.1429, 0.3813, -0.1228, -0.4589,

-0.2528, -0.3043, -0.1121, -0.2618, -0.2248, -0.4455, 0.2991, -0.8561,

-0.1450, -0.4909, 0.0083, -0.1749, 0.2752, 1.4401, -0.2124, -2.8435,

-0.2796, -0.4572, 1.6386, 0.7881, -0.5526, 0.6500, 0.0864, 0.3901,

1.0632, -0.3538, 0.4833, 0.3460, 0.8417, 0.0987, -0.2421, -0.2705,

0.0453, -0.4015, 0.1139, 0.0062, 0.0367, 0.0185, -1.0213, -0.2081,

0.6407, -0.0688, -0.5864, 0.3348, -1.1432, -0.1148, -0.2509, -0.4591,

-0.0968, -0.1795, -0.0634, -0.6741, -0.0689, 0.5360, -0.8777, 0.3180,

-0.3924, -0.2339, 0.4730, -0.0288])

B) Flair Embedding — This technique utilizes the concept of contextual string embeddings. It extracts latent syntactic-semantic data. Word embeddings are contextualized by the words that surround them. As a result, depending on the surrounding text, it generates multiple embeddings for the same word.

import flair

from flair.data import Sentence

from flair.embeddings import FlairEmbeddings

# using forward flair embeddingembedding

forward_flair_embedding= FlairEmbeddings('news-forward-fast')

# input the sentence

s = Sentence('Coding Ninjas has changed my life by giving me quality content.')

# embed words in the input sentence

forward_flair_embedding.embed(s)

# print the embedded tokens

for token in s:

print(token)

print(token.embedding)

You can also try this code with Online Python Compiler

Output:

Token[0]: "Coding"

tensor([ 8.8816e-06, -5.0592e-04, 4.5855e-03, ..., -2.2687e-09,

2.5091e-04, 1.5499e-03])

Token[1]: "Ninjas"

tensor([-4.4836e-03, -8.4255e-04, -4.2968e-03, ..., -3.7876e-09,

-3.7533e-06, 4.1339e-02])

Token[2]: "has"

tensor([-1.1524e-02, -7.5499e-05, 8.9049e-05, ..., -1.4477e-07,

8.1057e-06, 2.2234e-04])

Token[3]: "changed"

tensor([-8.2025e-03, -1.2155e-04, 1.0319e-02, ..., -3.9466e-10,

-3.1091e-06, 2.4481e-02])

Token[4]: "my"

tensor([-6.4847e-04, -1.4995e-06, -5.0240e-03, ..., -2.5867e-08,

-3.4497e-05, 7.9547e-03])

Token[5]: "life"

tensor([-3.1060e-03, -6.2251e-05, 3.0458e-02, ..., -7.6064e-09,

7.7145e-04, 1.7327e-02])

Token[6]: "by"

tensor([-4.5850e-03, -2.0143e-05, 1.0356e-03, ..., -3.8542e-09,

1.6641e-04, 1.2065e-03])

Token[7]: "giving"

tensor([-1.1326e-03, -1.0918e-04, 5.3230e-02, ..., -2.6280e-08,

1.0056e-05, 9.6235e-04])

Token[8]: "me"

tensor([-4.0165e-02, -9.0741e-05, 9.6876e-03, ..., -1.0702e-09,

-2.2489e-03, 1.4560e-01])

Token[9]: "quality"

tensor([-1.2143e-02, -2.0668e-05, -4.8624e-02, ..., -1.8722e-09,

-1.2585e-06, 8.7331e-03])

Token[10]: "content"

tensor([-2.1095e-03, -3.2740e-04, 1.7810e-02, ..., -4.6556e-09,

1.0749e-05, 2.5840e-02])

Token[11]: "."

tensor([ 7.3251e-04, -2.0609e-06, 6.5170e-04, ..., -2.0037e-08,

-2.5030e-06, 8.4512e-04])

C)Stacked Embeddings — These embeddings allow you to combine multiple embeddings. Let's look see how to use GloVe and forward and backward Flair embeddings together:

import flair

from flair.data import Sentence

from flair.embeddings import FlairEmbeddings, WordEmbeddings

from flair.embeddings import StackedEmbeddings

# flair embeddings

forward_flair_embedding= FlairEmbeddings('news-forward-fast')

backward_flair_embedding= FlairEmbeddings('news-backward-fast')

# glove embedding

GloVe_embedding = WordEmbeddings('glove')

# create a object which combines the two embeddings

stacked_embeddings = StackedEmbeddings([forward_flair_embedding,

backward_flair_embedding,

GloVe_embedding,])

# input the sentence

s = Sentence('Coding Ninjas has changed my life by giving me quality content.')

# embed the input sentence with the stacked embedding

stacked_embeddings.embed(s)

# print the embedded tokens

for token in s:

print(token)

print(token.embedding)

You can also try this code with Online Python Compiler

Output:

Token[0]: "Coding"

tensor([ 8.8816e-06, -5.0592e-04, 4.5855e-03, ..., -8.6836e-01,

8.6507e-02, 5.7270e-01])

Token[1]: "Ninjas"

tensor([-0.0045, -0.0008, -0.0043, ..., 0.1172, 0.1468, -0.1035])

Token[2]: "has"

tensor([-1.1524e-02, -7.5499e-05, 8.9049e-05, ..., -8.1305e-01,

6.2397e-01, 7.3176e-01])

Token[3]: "changed"

tensor([-8.2025e-03, -1.2155e-04, 1.0319e-02, ..., -3.1289e-02,

-6.2690e-02, 1.4181e-01])

Token[4]: "my"

tensor([-6.4847e-04, -1.4995e-06, -5.0240e-03, ..., -3.4842e-01,

3.1466e-01, 1.0087e+00])

Token[5]: "life"

tensor([-3.1060e-03, -6.2251e-05, 3.0458e-02, ..., -7.4780e-01,

1.3684e-01, 1.8927e-01])

Token[6]: "by"

tensor([-4.5850e-03, -2.0143e-05, 1.0356e-03, ..., -3.3267e-01,

2.7878e-01, -8.7050e-01])

Token[7]: "giving"

tensor([-1.1326e-03, -1.0918e-04, 5.3230e-02, ..., 1.1212e-01,

4.3103e-01, 2.3263e-01])

Token[8]: "me"

tensor([-4.0165e-02, -9.0741e-05, 9.6876e-03, ..., -3.5286e-02,

-2.4911e-02, 6.2131e-01])

Token[9]: "quality"

tensor([-1.2143e-02, -2.0668e-05, -4.8624e-02, ..., -9.2914e-01,

9.0714e-01, 5.7346e-01])

Token[10]: "content"

tensor([-2.1095e-03, -3.2740e-04, 1.7810e-02, ..., -9.5140e-01,

9.1759e-01, 8.2825e-01])

Token[11]: "."

tensor([ 7.3251e-04, -2.0609e-06, 6.5170e-04, ..., -2.3394e-01,

4.7298e-01, -2.8803e-02])

4) Document Embeddings: Document embeddings provide a single embedding for the full text, unlike word embeddings. The following are the document embeddings available in Flair:

A) Document Embeddings in Transformers

B) Document Embeddings for Sentence Transformers

C) RNN Embeddings in Documents

D) Embeddings of Document Pools

Let's have a look at how Document Pool Embeddings function.

Document Pool Embeddings — This is a very basic document embedding that pools all of the word embeddings and delivers the average of them all.

import flair

from flair.data import Sentence

from flair.embeddings import WordEmbeddings, DocumentPoolEmbeddings

# init the glove word embedding

GloVe_embedding = WordEmbeddings('glove')

# init the document embedding

doc_embeddings = DocumentPoolEmbeddings([GloVe_embedding])

# input the sentence

s = Sentence('Coding Ninjas has changed my life by giving me quality content.')

#embed the input sentence with the document embedding

doc_embeddings.embed(s)

# print the embedded tokens

print(s.embedding)

You can also try this code with Online Python Compiler

Output:

tensor([-2.5027e-03, 3.8810e-02, 3.8421e-01, -1.7375e-01, -1.0837e-01,

2.3367e-01, -2.8789e-02, 1.8458e-04, -3.4181e-02, 3.6676e-02,

1.5011e-01, -7.4053e-02, 1.4235e-01, -1.2873e-01, 9.5148e-02,

-1.1877e-01, 9.3658e-02, 1.0966e-02, -2.0236e-01, 3.9587e-01,

-7.4248e-02, -1.7542e-01, 1.3372e-01, 8.2817e-02, 2.9034e-01,

7.7647e-02, -6.4879e-02, -4.3748e-01, -3.3444e-02, -6.2901e-02,

1.9740e-01, 5.5336e-01, -1.9368e-01, -2.3447e-02, 4.4275e-02,

2.4168e-01, -2.0262e-01, 2.9198e-01, -1.9744e-01, -3.8078e-01,

-2.0090e-01, -2.3385e-01, 4.8811e-02, -1.6017e-01, -2.0675e-01,

-8.9702e-02, -6.5502e-02, -3.0937e-01, 4.9592e-03, -6.8379e-01,

-7.6543e-02, 3.8580e-02, 8.6789e-02, 1.1467e+00, -1.2379e-01,

-1.9347e+00, 1.0475e-01, -6.4031e-02, 1.3812e+00, 2.7994e-01,

1.0215e-01, 6.3930e-01, -2.3163e-01, -7.6195e-02, 6.8314e-01,

7.3629e-02, 4.7710e-01, 1.1621e-01, 3.2899e-01, -5.0072e-02,

-9.8042e-02, 9.1839e-02, 2.2936e-01, -2.2398e-01, 2.4192e-01,

1.3251e-01, -4.2875e-02, -5.4103e-03, -5.5485e-01, -9.6144e-02,

2.5825e-01, -1.2035e-01, -4.0800e-01, 4.2897e-02, -1.2341e+00,

7.2225e-02, -7.9506e-02, -2.2897e-01, -3.4404e-01, -2.3006e-01,

-1.2795e-01, -2.2462e-01, -8.6607e-02, 1.0467e-01, -3.1499e-01,

-1.6571e-01, -3.1184e-01, -4.2184e-01, 3.5239e-01, 3.2476e-01])

Frequently Asked Questions

1. What is the Flair model?

Flair is a robust open-source natural language processing library. Text extraction, word embedding, named entity recognition, parts of speech tagging, and text categorization are some of the most common applications. For NLP models, all of these attributes are pre-trained in flair.

2. What is a sentence for Flair?

A Sentence is a list of Token objects that holds a textual sentence.

3. Is Flair better than SpaCy?

Overall Findings: Which library is superior to the other, Flair or SpaCy, depends entirely on the use case. Although SpaCy is faster, the optimizations in Flair make it a considerably superior solution for several use-cases. SpaCy is a fairly popular library, and the documentation is excellent.

4. What is flair in NLP?

Zalando Research developed and open-sourced Flair, a simple natural language processing (NLP) library. Flair's framework is based on PyTorch, one of the most popular deep learning frameworks.

Conclusion

So, in a nutshell, FLAIR is a great library for NLP and can be considered state of the art for NLP and used for various applications and implementations of NLP tasks.

Hey Ninjas! Don't stop here; check out Coding Ninjas for Machine Learning, more unique courses, and guided paths. Also, try Coding Ninjas Studio for more exciting articles, interview experiences, and fantastic Data Structures and Algorithms problems.

Happy Learning!