Introduction

The Media Translation API provides real-time speech translation directly from audio data to your content and applications. The API, which uses Google's machine learning technology, improves accuracy and simplifies integration while equipping you with a comprehensive range of features to further optimize your translation results. Improve user experience with low-latency streaming translation and swiftly scale with simple internationalization.

The Media Translation API improves interpretation accuracy by optimizing model integrations from audio to text and abstracts any frictions that may arise when starting several API requests. Make a single API call, and Media Translation will handle the rest.

Translating streaming audio into text

Media Translation is the process of converting an audio file or a stream of speech into text in another language.

Setting Up the Project

To use Media Translation, you must first create a Google Cloud project and enable the Media Translation API for that project.

-

Create an account if you're new to Google Cloud to see how their products perform in real-world scenarios.

-

Select or create a Google Cloud project on the project selector page of the Google Cloud console.

-

Check that billing for your Cloud project is enabled.

-

Turn on the Media Translation API.

-

Set up a service account:

-

Navigate to the Create service account page in the console.

-

Choose your project.

-

Enter the name in the Service account name field. Based on this name, the console populates the Service account ID field.

Enter the description in the Service account description field. As an example, consider the Service account for quickstart.

-

Continue by clicking Create.

-

Grant your service account the following role(s) to gain access to your project: Owner > Project

Choose a role from the Select a role list.

Click + to add more roles. Add another role, and then each subsequent role.

-

Click the Continue button.

-

To finish creating the service account, click Done.

Keep your browser window open. It will be useful in the following step.

-

Navigate to the Create service account page in the console.

-

Make a key for your service account:

-

Select the email address associated with the service account you created in the console.

-

Select Keys.

-

Click Add key, followed by Create new key.

-

Click the Create button. Your computer receives a JSON key file.

-

Close the window.

-

Select the email address associated with the service account you created in the console.

-

Set the GOOGLE APPLICATION CREDENTIALS environment variable to the path to the JSON file containing your service account key. This variable only applies to the current shell session, so if you open a new one, you must set it again.

-

Consider Linux or macOS, for example.

Replace KEY_PATH with the path of the JSON file that contains your service account key.export GOOGLE_APPLICATION_CREDENTIALS="KEY_PATH"

-

For Windows:

Replace KEY_PATH with the path of the JSON file that contains your service account key.

For powershell:

$env:GOOGLE_APPLICATION_CREDENTIALS="KEY_PATH"

For command prompt:

set GOOGLE_APPLICATION_CREDENTIALS=KEY_PATH

-

Consider Linux or macOS, for example.

-

Install and launch the Google Cloud CLI.

- Install the client library for the language you want to use

Translate speech

The code examples below show how to translate speech from a file with up to five minutes of audio or a live microphone.

Translating speech from an audio file

const fs = require('fs');

// Imports the Cloud Media Translation client library

const {

SpeechTranslationServiceClient,

} = require('@google-cloud/media-translation');

// Creates a client

const client = new SpeechTranslationServiceClient();

async function translate_from_file() {

/**

* TODO for developer, Uncomment the following lines before running the sample.

*/

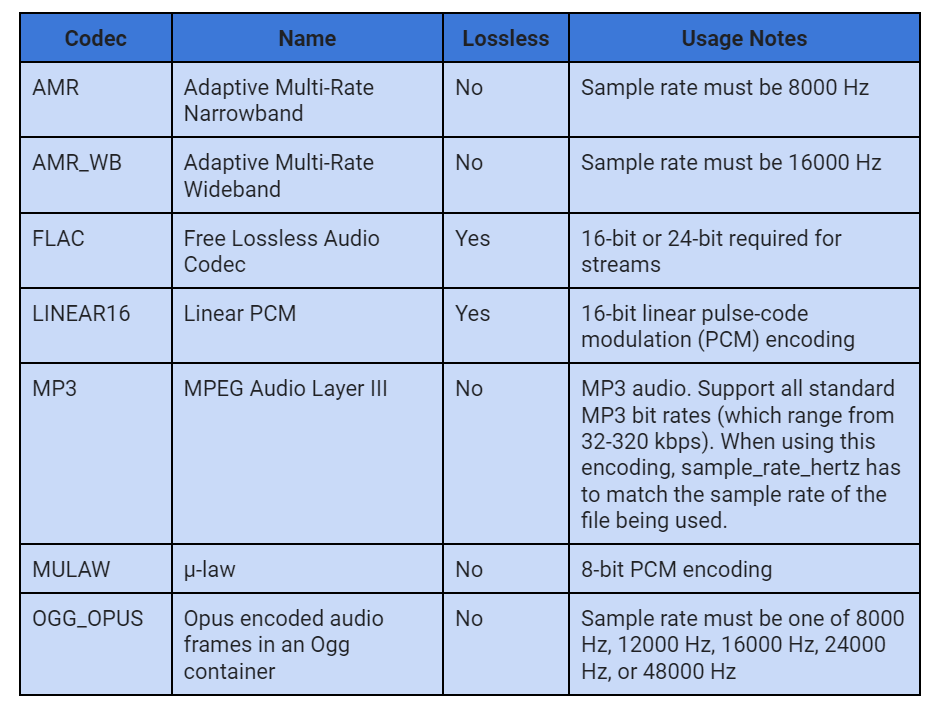

// const encoding = 'Encoding of the audio file, e.g. LINEAR16';

// const filename = 'Local path to audio file, e.g. /path/to/audio.raw';

// const targetLanguage = 'BCP-47 target language code, e.g. es-ES';

// const sourceLanguage = 'BCP-47 source language code, e.g. en-US';

const config = {

audioConfig: {

audioEncoding: encoding,

sourceLanguageCode: sourceLanguage,

targetLanguageCode: targetLanguage,

},

single_utterance: true,

};

// first request must simply contain a streaming config and no data

const initialRequest = {

streamingConfig: config,

audioContent: null,

};

const readStream = fs.createReadStream(filename, {

highWaterMark: 4096,

encoding: 'base64',

});

const chunks = [];

readStream

.on('data', chunk => {

const request = {

streamingConfig: config,

audioContent: chunk.toString(),

};

chunks.push(request);

})

.on('close', () => {

// Config-only requests should be first in the stream of requests

stream.write(initialRequest);

for (let i = 0; i < chunks.length; i++) {

stream.write(chunks[i]);

}

stream.end();

});

const stream = client.streamingTranslateSpeech().on('data', response => {

const {result} = response;

if (result.textTranslationResult.isFinal) {

console.log(

`\nFinal translation: ${result.textTranslationResult.translation}`

);

console.log(`Final recognition result: ${result.recognitionResult}`);

} else {

console.log(

`\nPartial translation: ${result.textTranslationResult.translation}`

);

console.log(`Partial recognition result: ${result.recognitionResult}`);

}

});

Translating speech from a microphone

// Allow user input from terminal

const readline = require('readline');

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout,

});

function doTranslationLoop() {

rl.question("Press any key to translate or 'q' to quit: ", answer => {

if (answer.toLowerCase() === 'q') {

rl.close();

} else {

translateFromMicrophone();

}

});

}

// Node-Record-lpcm16

const recorder = require('node-record-lpcm16');

// Imports the Cloud Media Translation client library

const {

SpeechTranslationServiceClient,

} = require('@google-cloud/media-translation');

// Creates a client

const client = new SpeechTranslationServiceClient();

function translateFromMicrophone() {

/**

* TODO for developer, Uncomment the following lines before running the sample.

*/

//const encoding = 'linear16';

//const sampleRateHertz = 16000;

//const sourceLanguage = 'Language to translate from, as BCP-47 locale';

//const targetLanguage = 'Language to translate to, as BCP-47 locale';

console.log('Begin speaking ...');

const config = {

audioConfig: {

audioEncoding: encoding,

sourceLanguageCode: sourceLanguage,

targetLanguageCode: targetLanguage,

},

singleUtterance: true,

};

// first request must simply contain a streaming configuration and no data

const initialRequest = {

streamingConfig: config,

audioContent: null,

};

let currentTranslation = '';

let currentRecognition = '';

// Create a recognize stream

const stream = client

.streamingTranslateSpeech()

.on('error', e => {

if (e.code && e.code === 4) {

console.log('Streaming translation reached its deadline.');

} else {

console.log(e);

}

})

.on('data', response => {

const {result, speechEventType} = response;

if (speechEventType === 'END_OF_SINGLE_UTTERANCE') {

console.log(`\nFinal translation: ${currentTranslation}`);

console.log(`Final recognition result: ${currentRecognition}`);

stream.destroy();

recording.stop();

} else {

currentTranslation = result.textTranslationResult.translation;

currentRecognition = result.recognitionResult;

console.log(`\nPartial translation: ${currentTranslation}`);

console.log(`Partial recognition result: ${currentRecognition}`);

}

});

let isFirst = true;

// Start a recording and transmit microphone data to the Media Translation API

const recording = recorder.record({

sampleRateHertz: sampleRateHertz,

threshold: 0, //silence threshold

recordProgram: 'rec',

silence: '5.0', //seconds of silence before ending

});

recording

.stream()

.on('data', chunk => {

if (isFirst) {

stream.write(initialRequest);

isFirst = false;

}

const request = {

streamingConfig: config,

audioContent: chunk.toString('base64'),

};

if (!stream.destroyed) {

stream.write(request);

}

})

.on('close', () => {

doTranslationLoop();

});

}

doTranslationLoop();